Start your freelance career as a record scorer

How to Become a Successful Freelance Data Annotator. Data annotation is an important task in the field of artificial intelligence and device learning. As a record scorer, he has the opportunity to launch his independent career in this rapidly growing company. In this article, we will explore the essential elements to excel as a contract information scorer and offer valuable information to help you succeed in this field.

Create an abstract representation of annotating information, using colors and shapes to convey the idea of organizing and labeling large amounts of information. recognition of the concept of precision and accuracy, using geometric styles to suggest order and structure. stay away from any literal or figurative interpretation of the data, and instead emphasize the annotation method itself. Use overlapping shapes and gradient colors to create depth and measurement in the image.

Annotation of key findings information

- It is a vital challenge in artificial intelligence and systems learning.

- Working as a self-employed information recorder offers top-level career possibilities.

- Developing the right skills and using the right equipment is essential to achieving the goal.

- Networking and creating an online presence will help you find freelance projects.

- Following quality practices and continually learning is key to excelling at data annotation.

What is information annotation?

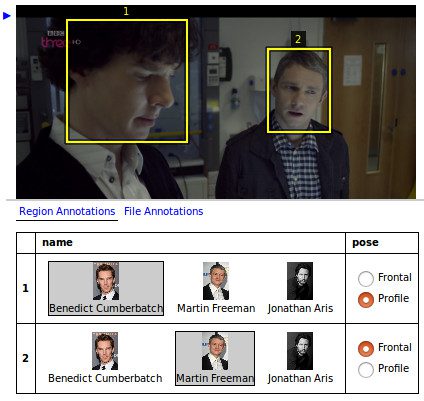

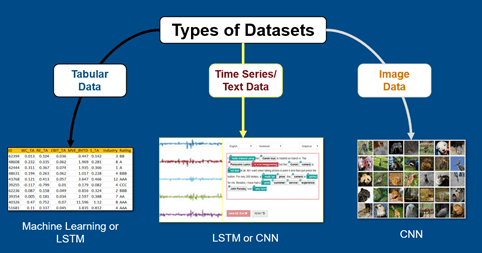

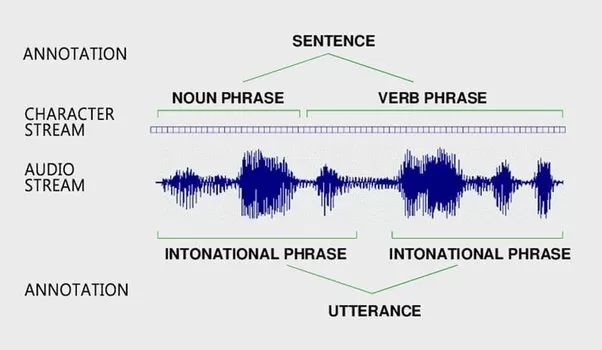

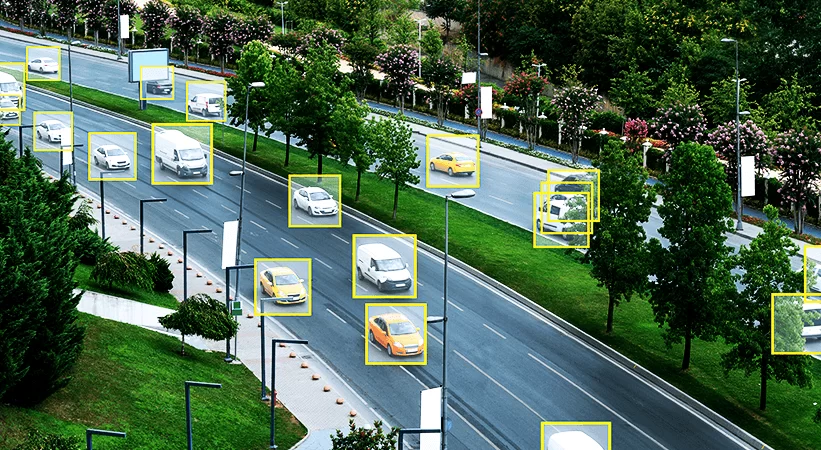

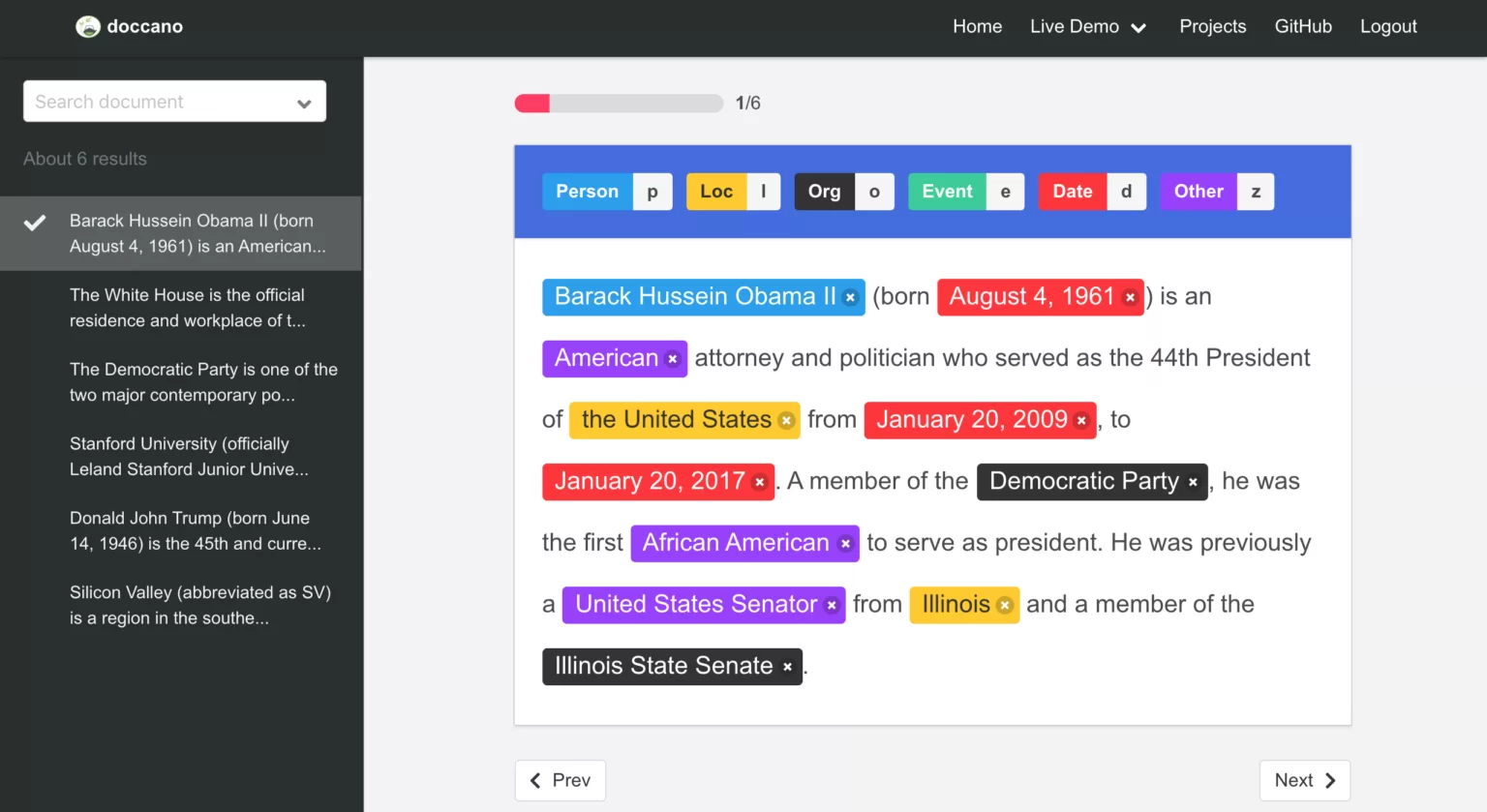

Statistical annotation is the process of labeling statistics to train AI and devices to gain knowledge from the models. It includes tasks including image annotation, in which objects or capabilities are marked in images, and text annotation, in which entities or sentiments are labeled in text files. Log annotation plays an essential role in improving the accuracy and overall performance of AI frameworks. It requires an interest in details and solid experience in the domain of information to make certain notes.

“Data annotation is the foundation on which AI models are built. It provides the necessary classified statistics that allow the system to gain knowledge from the algorithms to properly understand and systematize the data.”

The importance of data annotation in AI and automatic acquisition data annotation play an important role in the development and improvement of AI and device learning models. Through statistical labeling and annotation, these models can effectively analyze patterns and make accurate predictions and decisions. The data annotation technique includes responsibilities including object detection, sentiment analysis, speech popularity, and more, which can be crucial in educating AI structures.

Without proper data annotation, AI models can struggle to understand and interpret input data efficiently, leading to flawed and unreliable results. Consequently, statistics annotation is an important step in the process of device learning and artificial intelligence, enabling the arrival of reliable and high-performance models.

To excel as a data annotator, certain skills and qualifications are required. those include:

A keen attention to detail: Statistical annotation requires meticulous recognition and precision to ensure annotations.

Knowledge of different data annotation techniques and equipment: Familiarity with a variety of annotation methods, including photo and text annotation, and skill in using record annotation tools are crucial.

Familiarity with AI and systems learning concepts: Understanding the fundamentals of AI and device mastery helps understand the importance of statistical annotation in school models.

The ability to work with massive data sets: Data annotators often deal with massive data sets, so having the ability to manipulate and process large volumes of data is crucial.

Excellent Communication Talents: Powerful verbal exchange with clients, group members, and project stakeholders is vital to clarifying requirements and ensuring accurate annotations.

The ability to comply with suggestions and commands: Following annotation suggestions provided by clients or projects ensures consistency and accuracy in labeling.

Domain Information: Depending on the business you’re operating in, having expertise in a single area (including medical terminology, e-commerce products, or automotive features) may be fine.

Numerous information labeling offerings, equipment, and organizations are available to assist data annotators in their work. These sources offer education, recommendations, and frameworks to help annotators effectively fulfill their obligations.

Finding Independent Statistics Scoring Opportunities

As a contract information annotator, there are several approaches to discovering possibilities. One option is to register with data annotation systems or organizations that match annotators with clients. These platforms offer a constant movement of tasks and manage the executive factors of freelancing, such as payments and buyer communication.

Another option is to connect with companies and specialists in artificial intelligence and device learning at the same time to find freelance jobs. By communicating with people and companies within the company, you can discover capacity possibilities and showcase your skills as a record-keeper. Building a strong online presence, including through a portfolio or website, can also help attract potential clients.

They show a photograph of a person sitting in front of a computer, with various equipment and AI software visible on the screen, while they meticulously label and classify unique types of data. The person should look focused and efficient, surrounded by charts and graphs that visually represent the statistics they are working with.

The scene should have a futuristic feel, with smooth lines, stunning colors, and complex generation seen at some point. The overall mood should be seen as one of efficiency, productivity and accuracy, reflecting the essence of what it means to be a contract information annotator operating with artificial intelligence equipment.

Critical Equipment for Recording Facts

Statistical annotation is a mission that requires the use of numerous tools to tag and annotate facts effectively. By leveraging these tools, information annotators can improve their productivity and accuracy within the annotation system.

They provide a variety of features and functionalities that suit extraordinary forms of statistical annotation tasks. They offer skills for image annotation, text annotation, and collaboration between annotators. While used effectively, these tools can greatly streamline the data annotation workflow and improve the quality of annotated information.

Top-Level Practices for Fact Annotation

As a statistical annotator, following top-level practices is essential to excel at information annotation. By following these practices, you can ensure the quality and accuracy of your annotations, resulting in successful AI and systems learning models.

1. Understanding Annotation Hints

One of the first steps in data annotation is to thoroughly understand the annotation hints provided by the consumer or business. These tips describe unique requirements for labeling information, such as labeling conventions and annotation criteria. By familiarizing yourself with these guidelines, you can ensure consistency and alignment with mission objectives.

2. Make sure labeling is consistent.

Consistency in labeling is critical to maintaining the integrity of statistics and teaching accurate models. When labeling a data set, be sure to use consistent annotations on comparable data factors. This involves using equal labels for identical objects or entities, ensuring consistency in naming conventions, and maintaining consistent formatting or labeling requirements.

3. Maintain excessive precision

Precision and accuracy are crucial in statistical annotation. purpose of delivering notes with a high degree of precision, avoiding errors or misinterpretations. Double-check your notes for errors or inconsistencies and rectify them directly. Often, validating annotations with quality assessments or peer reviews can also help maintain a high level of accuracy.

4. Review and validate annotations frequently,

it is essential to review and validate annotations frequently during the annotation process. By frequently reviewing your notes, you can notice potential errors, inconsistencies, or ambiguities and take corrective action. Validation tactics, such as cross-validation or inter-annotator agreement testing, can help ensure the accuracy and reliability of the annotated data set.

5. Collaborate and speak

effectively Collaboration and conversation with clients, task managers or group members are crucial for successful data annotation. Regular communication ensures readability and alignment with task expectations, addressing any questions or clarifications immediately. By actively using engagement with business stakeholders, you can foster a collaborative environment and deliver annotations that meet their requirements.

Standalone Fact Annotation

Create a photo showing the fact annotation technique in AI. It encompasses elements including a computer screen, a mouse, and various shapes and colors that represent different styles of records. show the annotations that are made with precision and accuracy. Use vibrant colors to emphasize the importance of this endeavor in AI training.

Challenges and Opportunities in Statistical Annotation Data annotation, while presenting interesting possibilities for freelancers, also presents its true share of challenges. The challenge of dealing with huge and complex data sets can be overwhelming and requires a meticulous interest in the elements and a deep knowledge of the statistical domain.

Additionally, managing tight closing dates and managing various notation requirements can put a lot of pressure on record annotators. However, these challenges can also be seen as possibilities for growth and development within the discipline of record annotation. By constantly gaining knowledge and staying up-to-date with new strategies and methodologies, information annotators can conquer those challenges and hone their talents to excel in this dynamic enterprise.

Demanding situations in records

Fact annotation often includes operating with huge and complex data sets, requiring meticulous interest and knowledge. Ensuring correct and stable labeling across your entire data set can be a daunting task, especially when dealing with complicated details and ambiguous statistics. Additionally, managing tight closing dates while preserving records requires a careful balance of efficiency and accuracy. It is very important that data annotators adapt to different annotation needs, as different initiatives may also require different labeling strategies or suggestions.

Opportunities for advancement Despite demanding situations, data annotation presents possibilities for freelancers to hone their skills and increase their knowledge. Continually learning and staying up-to-date with new techniques and methodologies can increase annotation accuracy and improve the overall performance of AI and machine learning models.

By accepting challenges, stat scorers can gain valuable experience and establish themselves as trusted professionals in the field. As demand for AI data annotations continues to grow, there are ample opportunities for freelancers to contribute to innovative projects and have a widespread impact on the industry.

Conclusion record keeping is a dynamic and thriving discipline that offers incredible possibilities for freelancers. By developing essential skills, leveraging the right equipment, and following best practices, you could boost your freelance profession as a fact-keeper. With the growing demand for device insight and artificial intelligence solutions, the need for annotated data will continue to grow. So, take flight, accept the challenges and embark on your adventure as a freelance information recorder in this exciting company.

Frequently Asked Questions

What is information annotation?

Data annotation is the technique of labeling data, including images or text, to teach the AI and device models.

Why is log annotation important in AI and device domain?

Data annotation is essential in artificial intelligence and device learning as it improves the accuracy and performance of models by providing appropriately categorized statistics for education.

What skills and qualifications are required for fact recording?

Record annotation requires an interest in detail, familiarity with artificial intelligence and machine knowledge of principles, and the ability to work with massive data sets. Communicating properly and following suggestions are also essential.

How can I locate independent fact annotation possibilities?

You can be part of information annotation platforms or agencies, form a community with experts, or showcase your skills through a portfolio or website to attract clients.

What are some famous data annotation equipment?

What are some quality practices for annotating information?

Best practices include understanding annotation suggestions, maintaining consistency, and frequently reviewing and validating annotations.

What are the challenges and possibilities in data annotation?

The challenges are dealing with huge data sets and tight deadlines; However, these demanding situations can also be possibilities for growth and skill development.

How can I boost my freelance career as an information annotator?

With the help of developing the necessary skills, leveraging the right tools, and following good practices, you can launch your freelance career as a data annotator in this thriving industry.

How to Unlock Compliance: 7 Tips for Using Excel as a Standalone Data Annotator

- Independent statistics scorer

- Within the fast-paced world of artificial intelligence and machine learning, demand for annotated data is on the rise, using the rise of annotation

- freelance records as a viable career option. Record annotators play a critical role in labeling and structuring sets

- of data, allowing machines to learn and make informed decisions. Whether you’re a seasoned expert or just getting started, learning the art of information annotation can open doors to rewarding possibilities and worthwhile endeavors. Here are seven tips to help you thrive as a freelance stat scorer:

1. Expand a solid foundation: Before diving into the world of statistical annotation, it is essential to gain a solid understanding of the underlying concepts and methodologies. Familiarize yourself with common annotation tasks, including photo tagging, text tagging, and audio transcription, as well as the tools and software used within the annotation system. Online guides, tutorials, and resources from systems like 24x7offshoring can serve as useful study resources to hone your skills and expand your knowledge base.

2. Hone your annotation skills: Data annotation requires precision, interest in details, and consistency to provide labeled data sets. Practice annotating pattern data sets and hone your skills to accurately label unique varieties of records, whether images, text, audio, or video. Pay close attention to annotation advice, specifications, and excellent requirements provided by clients or task managers, and strive to provide annotations that meet or exceed their expectations. Constant exercise and feedback from friends or mentors allow you to hone your annotation skills and improve your efficiency over the years.

3. Stay up to date on industry trends: The field of statistical annotation is dynamic and new strategies, teams, and trends are constantly emerging.

Stay abreast of industry developments, advances in annotation technology, and high-quality practices through blogs, forums, webinars, and conferences. Interact with the fact annotation community on platforms like 24x7offshoring and specialized forums to exchange ideas, conduct peer research, and stay informed on the ultra-modern trends shaping the industry. By staying proactive and adaptable, you can function as an informed and sought-after data scorer in the freelance market.

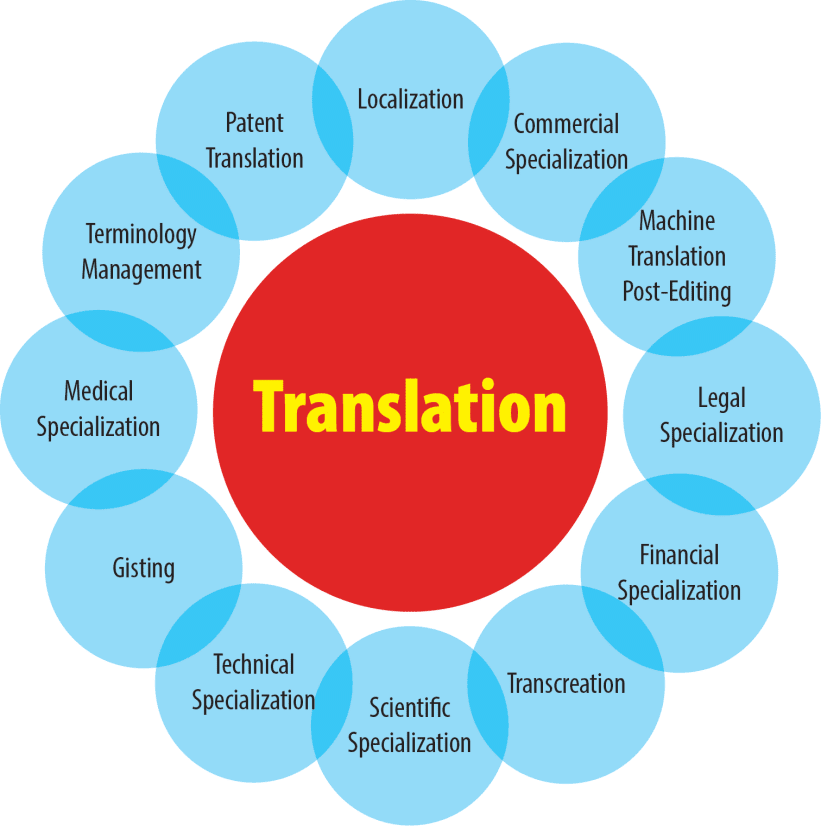

3 types of translation

https://24x7offshoring.com/english-to-hindi-best-translation-sentences/

https://24x7offshoring.com/localization/translation/

4. Cultivate attention to detail: Interest in detail is paramount in annotating information, as even minor errors or inconsistencies can compromise the satisfaction and reliability of categorized data sets.

Pay meticulous attention to annotation guidelines, ensure accuracy and consistency in all annotations, and review your paintings for errors or omissions before submitting them. Expand strategies to mitigate common errors, including ambiguity, occlusion, and label noise, and adopt a systematic technique to review and validate annotations to maintain requirements. Cultivating a keen eye for detail will not only improve your scoring ability, but will also earn you recognition for delivering accurate and reliable effects.

5. Embrace collaboration and feedback: Collaboration and feedback are crucial components of professional growth and development as a contract statistics scorer. Interact with clients, project managers, and fellow annotators to solicit feedback, make needs clear, and address any concerns or demanding situations that may arise during the annotation process.

Actively participate in team conferences, brainstorming classes, and peer feedback to exchange ideas, share good practices, and examine others’ stories. Embrace positive complaints as an opportunity for learning and improvement, and try to include feedback in your workflow to improve your capabilities and overall performance over the years.

6. Prioritize time management and entrepreneurship: Effective time management and entrepreneurship are essential to maximizing productivity and meeting task deadlines as an independent fact-keeper. expand a scientific workflow and prioritize obligations based on their urgency and importance, allocating sufficient time for annotations, reviews, and improved assurance activities.

Take advantage of project management teams, task trackers, and calendar apps to schedule and track your daily activities, set actionable goals and milestones, and reveal your progress closer to achieving them. Break down big projects into smaller, more manageable tasks, and set a standard that balances productivity with self-care to avoid burnout and sustain long-term achievements.

7. Build Your TagsProfessional Logo: As a contract data annotator, creating a strong professional logo is key to attracting clients, securing initiatives, and organizing yourself as a trusted expert in the discipline. Create an attractive portfolio that showcases your knowledge, experiences, and past projects, and highlights your specific talents and skills as a fact-keeper.

Take advantage of social media systems, professional networks, and freelance marketplaces to promote your offerings, connect with potential clients, and showcase your portfolio to a broader audience. Cultivate customer relationships, continually deliver top-notch results, and ask satisfied customers for testimonials or referrals to build credibility and trust your brand.

In the end, success as a freelance record-keeper requires a combination of technical knowledge, attention to detail, continuous learning, and powerful talents for verbal exchange. By following these seven tips and taking a proactive, collaborative approach to your work, you can excel in the field of record keeping, unlock new opportunities, and carve out a fulfilling career path in the ever-evolving landscape of artificial intelligence and machine learning. .

Broadening horizons: elevating your profession as an independent information recorder.

In the field of independent information annotation, there are countless avenues for professional growth and fulfillment. Let’s dive into other strategies and concerns to further enhance your career as a freelance data annotator:

8. Specialize in niche domains: While skill in well-known statistical annotation tasks is crucial, remember to focus on niche or vertical domain names to differentiate yourself and attract specialized tasks. Whether it’s scientific imagery, annotating geospatial logs, or labeling monetary data, becoming an expert in a specific area can open doors to expensive projects and profitable opportunities. Invest time in gaining unique domain expertise, understanding particular annotation requirements, and honing your talents to become an expert in your preferred niche.

9. Leverage automation and tools: As the field of data annotation evolves, automation and tools have become increasingly common, presenting possibilities to optimize workflows and improve productivity. Get familiar with annotation tools and software frameworks, including 24x7offshoring , which offer capabilities to automate repetitive tasks, manage annotation projects, and ensure great control. Embrace emerging technologies, such as computer vision models for semi-computerized annotations and data augmentation methods to produce artificial records, allowing you to work more efficiently and deliver better annotations at scale.

10. Build long-term client relationships: Cultivating long-term client relationships is critical to maintaining a successful freelance career in data annotation. recognition for delivering exquisite results, exceeding consumer expectations and demonstrating your commitment to their success.

Proactively communicate with clients, provide regular updates on task progress, and look for opportunities to add fees beyond the scope of your preliminary commitment. With the help of fostering trust, reliability, and professionalism, you can gain repeat business, referrals, and endorsements from satisfied clients, ensuring a regular flow of initiatives and solid profits over the years.

11. Diversify your skill set: Similar to studying basic data annotation tasks, consider diversifying your skill set to expand your career opportunities and tackle more complicated projects. Investigate complementary capabilities along with statistics preprocessing, feature engineering, and release testing, which are important for a next-generation device to understand pipeline development.

E xplore associated roles including statistics curation, statistics analysis and device engineering knowledge acquisition, which leverage your annotation information while providing opportunities to advance your career and earn greater earnings. By continually growing your talent pool and adapting to changing industry trends, you could future-proof your career and stay aggressive in the dynamic discipline of artificial intelligence.

12. Spend money on continuous knowledge: The information annotation industry is continually evolving, with new techniques, tools and methodologies emerging periodically. Invest money in continued mastery and expert development to stay ahead and remain applicable in the competitive freelance market. Sign up for advanced guides, workshops, and certifications to deepen your knowledge on annotation strategies, algorithmic knowledge acquisition systems, and precise mastery programs.

Participate in online communities, forums, and hackathons to collaborate with peers, share ideas, and solve challenging real-world annotation situations. By adopting an attitude of improvement and committing to the domain for life, you could stay at the forefront of the industry and work towards long-term satisfaction as an independent data annotator.

13. They are trying to find mentoring and guidance: Mentoring can be valuable for aspiring freelance statistical scorekeepers trying to navigate the complexities of the sector and boost their career advancement. looking for trained mentors or industry veterans who can provide you with guidance, advice and help as you embark on your freelancing journey.

Be a part of mentoring programs, 24x7offshoring agencies , and mentor-mentee platforms to connect with seasoned experts willing to share their knowledge and opinions. Actively seek feedback from mentors, leverage their love to overcome demanding situations, and learn from their successes and mistakes to chart a path to success in your own career as a contract information scorer.

14. Foster an attitude of growth: Embody an attitude of growth characterized by resilience, adaptability and the willingness to face demanding situations and overcome setbacks. View boundaries as possibilities for growth, approach new responsibilities with curiosity and enthusiasm, and be open to positive feedback and complaints. Cultivate a passion for continued growth, set ambitious dreams for your career, and enjoy your achievements along the way.

By fostering an improved mindset, you can cultivate the resilience and determination necessary to overcome obstacles, seize opportunities, and achieve achievements as a contract data annotator in the dynamic and rapidly evolving field of artificial intelligence.

In the end, the path to success as an independent statistical annotator is paved with continuous learning, strategic networking, and a constant commitment to excellence. By embracing specialization, automation, and diversification, as well as fostering long-term client relationships and investing in non-stop knowledge and mentorship, you could boost your career, unlock new possibilities, and thrive in the industry’s ever-evolving landscape. . Artificial intelligence and machine learning. With dedication, perseverance, and a growth mindset, the opportunities for professional growth and satisfaction as a freelance stat scorekeeper are endless.

Freelance Data Annotator Within the fast-paced world of artificial intelligence and data acquisition, demand for annotated information is increasing, capitalizing on the rise of freelance record annotation as a viable career option. Data annotators play a critical role in labeling and structuring data sets, allowing machines to examine and make informed decisions. If you are a professional… examine more

What it takes to be a record taker: Competencies and requirements

Becoming a contract fact taker presents flexibility and the ability to work from home. Information annotators label records of the factors used to educate the system’s learning models. They perform numerous types of statistical annotation responsibilities, including bounding containers, video markup, transcription, translation, and copying of textual content. Freelance data annotators have control over their hours and schedules, and are responsible for their own productivity. They are paid according to labeled statistics and must ensure accuracy and consistency in their jobs.

Key takeaways:

- Information annotators label statistical factors used to teach device study models.

- They perform tasks including box binding, video marking, transcription, translation, and text copying.

- Freelance data annotators have flexibility in their hours and schedules.

- Accuracy and consistency are vital to your ability as a data annotator.

- Fact recorders are responsible for their personal productivity and assembly deadlines.

- The advantages of independent data annotation

- Independent record scorers experience the power and balance between paintings and stock that comes with their unbiased paintings. They have the freedom to choose when and where they work, allowing them to create a schedule that suits their needs. Whether fleeing the comfort of their homes or a safe coffee shop, freelancers are fortunate to be in control of their work environment.

Working remotely offers convenience and luxury. Self-employed people can avoid the stress of commuting and the expenses that come with it. Instead, they can focus on their tasks, ensuring they have a quiet, distraction-free space to perform their information-writing duties.

Freelancers also have the opportunity to work on a variety of projects, exposing them to unique industries and notation needs. This not only continues to be interesting for your work, but also expands your experience and skills. With each task, freelancers study dreams and goals and accordingly adapt their notes to achieve pleasant consequences.

Independent record keepers play a crucial role in the advancement of technology and artificial intelligence. Its annotated information helps educate the system to gain knowledge of the models, leading to higher accuracy and performance in various packages. By contributing to the improvement of technologies, freelancers have a great effect on the future of AI and its tremendous adoption.

Overall, the benefits of freelance data annotation, including flexibility, job/lifestyle stability, and the potential for personal expansion, make it an attractive option for those seeking freelance work in the discipline.

Freelance vs. Employed Data Scorer

Freelance stat scorers and employed stat scorers have striking differences in the structure and benefits of their charts. While freelancers work on a challenge or task basis, contract scorers follow a traditional employment structure. Let’s discover the important differences between these two roles.

Painting Structure

Freelance record scorers experience the power of setting their own schedules and working in a primarily business-based association. They have the autonomy to choose the tasks they want to perform, which gives them a sense of independence in their jobs. In the assessment, employed record takers adhere to regular work schedules and are assigned tasks by their employers. Their work schedules and tasks are usually determined based on the needs and needs of the company.

Worker Blessings

Freelance data annotators now receive no employee blessings, including paid time off or health insurance. They will also be responsible for taking care of their own day of rest and taking care of their health care needs. Additionally, self-employed individuals are responsible for managing their personal taxes, which include collecting and reporting profits. On the other hand, employed news reporters enjoy the benefits their employers provide, including paid time off, health insurance, and the convenience of having taxes withheld from their earnings.

Reimbursement Structure

The form of payment for freelance record keepers is usually based primarily on the variety of data points tagged. Freelancers have the ability to earn more based on their speed and accuracy, as they are often paid per data factor. By comparison, contract record keepers earn regular wages or hourly wages, regardless of the number of factual factors they record. Your reimbursement is decided through your contracts or employment agreements.

In short, independent information annotators enjoy the freedom and versatility of concerted work, setting their personal schedules and selecting their tasks. But they no longer get job benefits, such as paid time off or health insurance, and are responsible for their own taxes. Employed record takers have the stability of conventional employment, with benefits provided through their employers. The following table presents a comparison of key variations between independent and employed statistical scorers:

- Independent Statistics Annotator of used statistics

- The scorer works according to the commitment or according to the assignment and meets a normal work schedule

- Set your own hours

- Respect the hours assigned by the employer

No employee benefits Purchase employee benefits (e.g., paid time off, health care, insurance)

handle their own taxes Taxes withheld with the help of the corporation

compensation based on data points called ordinary earnings or hourly wages

knowledge Variations between freelancers and employees Data annotation can help people determine the work structure and blessings that align with their choices and dreams.

Skills for Success Independent Data Scorers

A Success Independent statistical scorers possess a number of important talents that allow them to excel at their job. These skills include:

Computer Skills: It is important that data annotators are comfortable working on computers and have basic computer skills to navigate through statistical annotation tools and software.

Attention to Element: Annotating accurate and specific information requires a high level of attention to detail. Annotators must carefully examine and label statistical factors according to precise guidelines.

Self-management: As freelancers, data annotators must exercise self-control to ensure productivity and meet time limits for each task. They must correctly organize their obligations and work independently.

Quiet Focus: A quiet environment is essential for fact annotators to pay attention and maintain attention while noting obligations appear. Distractions can affect the accuracy and quality of your paintings.

Meeting Time Limits: Project meeting time limits are important for maintaining a regular pace of work as a freelance information recorder.

Note takers must prioritize tasks and offer consequences within established deadlines.

Understand Strengths: Knowing one’s strengths and limits as an information recorder allows for better business allocation and efficient use of time.

Specializing in areas in which you excel can help increase accuracy and productivity.

Organizational Thinking: Effective organizational questioning is crucial for record takers to manage a couple of projects, prioritize tasks, and ensure a smooth workflow. Annotators want to strategize and plan their annotation approach based on mission needs.

With the help of cultivating those talents, freelance record keepers can excel at their work, meet client expectations, and build a successful career in the record keeping field.

The Importance of Hard Skills in Recordkeeping

Fact recorders require a combination of hard and soft talents to carry out their responsibilities successfully. At the same time that soft skills enable powerful conversation and problem solving, hard skills provide the vital technical foundation for correct and efficient information annotation.

“Hard competencies are the technical skills that data annotators want to perform their tasks accurately and skillfully.”

Within the realm of fact annotation, several difficult skills stand out as critical to success. Those skills include:

Square Competency: The ability to query and manage databases is vital to accessing the applicable statistics needed for annotation tasks. Knowledge of the established question. The (square) language enables annotators to successfully retrieve and analyze vital information.

Typing Skills: Typing skills and typing accuracy are critical for data annotators to process large amounts of information quickly and accurately. The ability to quickly enter information ensures environmentally friendly annotation workflows.

Programming languages: Familiarity with programming languages is an advantage, along with 24x7offshoring , to automate annotation tasks and develop custom annotation pipelines or teams. Annotators with programming capabilities can optimize the annotation system and beautify productivity.

Attention to detail: Preserving precision and accuracy is paramount in statistical annotation. Annotators must have a strong interest in the item to ensure that each annotation is thorough, regular, and aligned with precise annotation recommendations.

By honing these difficult skills, statistical annotators can improve their proficiency and effectiveness in assuming annotation responsibilities.

Statistical Annotation Specialization Across Industries

The demand for specialized annotators has grown dramatically as industries recognize the importance of information accuracy and relevance. To meet this need, companies like Keymakr Records Annotation Service offer in-house teams of specialized annotators who possess industry-specific knowledge. These annotators understand the nuances of various sectors, allowing them to provide more correct and effective record annotations.

Having specialized annotators dedicated to unique industries ensures that annotations are tailored to meet the precise needs of each quarter. For example, in waste management, annotators with knowledge in this field can accurately label unique types of waste materials, and supporting agencies improve waste sorting and recycling tactics. Similarly, in the retail sector, annotators with knowledge of product categorization and attributes can provide specific annotations for e-commerce platforms, improving product search and advisory systems.

By leveraging company-specific expertise, specialized annotators contribute to greater data accuracy, which is essential for training devices to gain knowledge of the models. With their deep knowledge of industry context, they can annotate information more accurately, reducing errors and improving the overall appearance of classified data sets.

Blessings of Independent Statistical Annotators

from Specialized Annotators:

Superior factual accuracy: Specialized annotators possess experience and information in the area that allows them to annotate information with precision and relevance.

Company-Specific Knowledge: These annotators understand the specific requirements and demanding conditions of specific industries, resulting in more effective annotations.

Greater Efficiency: Specialized annotators are familiar with industry-specific annotation tips, tools, and strategies, allowing them to work quickly and efficiently.

Excellent advanced statistics: By leveraging their knowledge, specialized annotators contribute to better quality data sets, leading to better performance of the device knowledge model.

Agencies in many sectors are realizing the cost of specialized annotators and investing in collaborations with statistical annotation services companies. This ensures that your record keeping obligations are completed by professionals with vital experience unique to the industry. Ultimately, the contribution of specialized annotators results in more accurate and applicable statistical annotations, paving the way for advanced applications of artificial intelligence and device learning in specific industries.

With the increasing importance of information accuracy and unique expertise in the industry, it is anticipated that the demand for specialized annotators will continue to grow. Their contributions play an important role in advancing numerous industries and optimizing AI-driven strategies.

The Position of Soft Skills in Statistics

Soft annotation skills are crucial for fact annotators to excel in their paintings. Effective conversation, strong teamwork, adaptability, problem-solving skills, interpersonal skills, and essential questioning play a critical role in achieving fact-writing initiatives.

While operating on complicated projects, statistical annotators depend on effective verbal exchange to ensure readability and expertise among team participants. This is particularly essential in remote collaborations, where a clean and concise communication is crucial to mission performance.

Similar to conversation, strong interpersonal skills contribute to successful statistical annotation effects. Collaborative efforts require people to interact well with others, actively focus, and offer constructive feedback. This fosters a positive career environment and promotes efficient teamwork.

Effective communication and strong interpersonal skills enhance collaboration and efficiency in record keeping tasks.

Another key skill for record keepers is adaptability. Fact annotation tasks can vary in complexity and require the ability to adapt to new strategies, equipment, and suggestions. Adaptive annotators can quickly study and apply new talents, ensuring accuracy and consistency in their annotations.

Problem-solving skills are essential for statistical annotators when faced with complex annotation tasks. Being able to investigate and approach demanding situations with essential thinking allows scorers to make informed selections and contribute annotations.

Ultimately, gentle skills play an important role in accomplishing statistical annotation projects. Powerful conversation, strong teamwork, adaptability, problem-solving skills, interpersonal skills, and meaningful thinking all contribute to accurate, consistent, and impactful record keeping.

Crucial Interpersonal Skills for Record Keepers

In addition to technical skills, statistical annotators need to possess crucial interpersonal skills. These consist of the ability to prioritize responsibilities and manipulate time effectively. Prioritization allows statistical annotators to determine the order in which tasks should be performed based on their importance or deadline. Time management competencies enable note takers to allocate their time effectively, ensuring deadlines are met and productivity is maximized.

Another key skill for record keepers is critical reflection. This skill is necessary to read complex statistical units and make informed decisions during the annotation process. Statistical annotators must be able to do serious thinking to identify styles, solve problems, and ensure correct annotations.

Accuracy and attention to detail are crucial for data annotators. They must be detail-oriented to ensure error-free annotations and maintain data integrity. Annotators must pay close attention to every aspect of the data, ensuring that all applicable statistics are captured appropriately.

Powerful communication and teamwork skills are also vital for fact keepers. They frequently collaborate with others on annotation initiatives, and a clear conversation ensures that everyone is on the same web page. Working effectively in a team allows annotators to share ideas, address challenges, and contribute annotations.

Developing and strengthening these important fluid competencies will not only make data annotators more successful in their roles, but will also improve their overall performance and contribute to the achievement of data annotation tasks.

Records Problem -Solving Talents

Annotators’ problem-solving skills play an important role in the work of statistical annotators. These experts want to investigate complex problems, choose appropriate solutions, and make informed decisions about annotations. By leveraging their troubleshooting capabilities, log annotators ensure correct and meaningful log tagging.

Data annotation often involves working with numerical information. Having strong numerical capabilities allows annotators to read and manage records effectively. They could interpret styles, features, and relationships within the data, allowing them to make informed decisions about annotations and contribute to the overall success of the machine learning models.

Record visualization is another crucial skill for fact recorders. The ability to provide data visually allows annotators to discuss complex records in a clear and insightful way. By using statistical visualization methods such as charts, graphs, and diagrams, annotators can beautify data insights and facilitate better decision making.

Crucial questioning is a fundamental skill for fact keepers. It allows them to evaluate and analyze statistics, detect errors or inconsistencies in capabilities, and make accurate judgments. With critical reflection skills, annotators can ensure the comprehensiveness and accuracy of annotations, contributing to more reliable machine mastery results.

Attention to detail is paramount for data annotators. They must take a meticulous approach, carefully analyzing each data point, annotation guideline, or labeling requirement. An attention to detail ensures that annotations are accurate, consistent, and aligned with desired suggestions, improving the overall appeal of labeled facts.

Example of problem-solving competencies for statistical annotators: Statistical inconsistency of response to problems

across multiple resources examine and examine information from numerous sources, identify patterns, remedy discrepancies, and create consistent annotations.

Recognition of complex information samples follows vital thinking skills to identify and categorize complex styles, ensuring that annotations are correct and meaningful.

Inconsistent annotation suggestions Use problem-solving competencies to investigate and clarify uncertain suggestions, seek rationalization from relevant stakeholders, and establish a standardized method for annotations.

Data Anomalies and Outliers recognizes and addresses anomalies and outliers in data, ensuring they are correctly annotated and do not bias machine learning models.

Data annotators with strong problem-solving skills, numerical skills, data visualization capabilities, crucial questions, and attention to detail are well equipped to excel in their work, making valuable contributions to the development of artificial intelligence and technologies. of machine learning.

Continuous knowledge acquisition and self-development information annotation is a continually evolving discipline, with new developments and business advancements occurring regularly. In an effort to remain relevant and meet business demands, record scorers must prioritize continued mastery and self-development. With the help of actively seeking out educational sessions and attending workshops, scorers can improve their skills and stay up to date with the latest equipment and strategies.

Feedback is also a crucial matter of self-improvement. By seeking feedback from friends and supervisors, scorers can discover areas of development and work to improve their overall performance. This feedback loop allows them to investigate their errors and continually refine their annotation capabilities.

Continuous learning and self-development are not the most important thing for personal growth, but they also contribute to professional fulfillment. As the field of fact annotation advances, annotators who prioritize their improvement and acquisition of applicable talents will stand out and excel in their careers.

Advantages of acquiring knowledge and personal improvement without stopping:

Stay up to date with industry trends and advancements

Improve scoring talents through education and workshops

Improve accuracy and performance in fact recording obligations

Adapt to new equipment and techniques

Position yourself for destiny and career opportunities

Continuously increasing knowledge acquisition and self-development are key ingredients for achieving achievements in the rapidly developing and constantly changing field of statistical annotation. By adopting a growth mindset and actively seeking new knowledge and competencies, note takers can live on the cutting edge and unlock new possibilities in their careers.

Benefits of continuous learning advantages of self-development

1. Stay updated with business developments and news 1. Enhance annotation talents and knowledge

2. Expand professional network through educational sessions 2. Improve accuracy and consistency in annotations

3. Professional growth and career advancement 3. Adaptability to new teams and strategies

to become a successful freelance record scorer requires a combination of technical talents, attention to detail, and strong, fluid capabilities.

Data annotation skills play a crucial role in accurately labeling statistical factors so that the system gains knowledge of trends. An attention to detail ensures the consistency and consistency of annotations, while interpersonal skills such as communication, teamwork, and problem solving contribute to powerful collaboration within record annotation projects.

Continued knowledge and self-improvement are crucial for independent record scorers to remain competitive within the area. As the generation advances, staying up-to-date with business developments and acquiring new skills is crucial for career growth. Record keepers must actively seek training periods, attend workshops, and live with knowledge of ultra-modern tools and techniques.

Freelance record keeping offers a flexible and valuable career path. As the field of artificial intelligence and device learning continues to develop, there are enough target possibilities for independent statistical annotators. Continuous learning and self-development will enable them to evolve towards evolving technologies and preview their successful careers as record keepers.

Frequently Asked Questions

What are the process requirements for a record taker?

Activity requirements for a statistics annotator generally include experience in record labeling, knowledge of data annotation methods and tools, familiarity with annotation rules, statistics curation skills, and the ability to ensure good management, accuracy and data consistency in labeling.

What are the advantages of standalone data annotation?

Independent record keeping offers flexibility, work/life stability, and the ability to work remotely. Freelancers have control over their schedules and schedules, can do business from home, and choose initiatives that interest them.

How is independent statistical annotation different from contracted statistical annotation?

Freelance information annotators work on a task or project basis and have the freedom to set their personal schedule. They do not receive job benefits and are responsible for their own productivity, while contract data annotators have a traditional job structure with benefits provided through their company.

What capabilities are essential for successful independent fact annotators?

Successful freelance record keepers must have computer skills, attention to detail, a talent for self-management, and the ability to work in a calm and mindful environment. Meeting deadlines, understanding strengths, and organizing tasks effectively are also vital skills.

What are the critical skills for fact annotation?

Hard skills, such as square skill, typing skills, and knowledge of programming languages such as Python, R, or Java, are important for record scorers. An interest in detail is vital to maintaining precision within the annotation method.

How does specialization play a role in statistical annotation?

Specialized annotators that capture the nuances of particular industries contribute to more correct and powerful fact annotation. Groups like Keymakr Facts Annotation Service offer in-house groups of specialized annotators designed for various industries.

What fluid skills are essential for record annotation?

Powerful verbal exchange, teamwork, adaptability, problem-solving skills, interpersonal skills, and important questions are soft skills vital to successful fact keepers.

What are the crucial soft skills of statistical annotators?

The main soft skills of statistical annotators include the ability to prioritize tasks, track time correctly, think seriously, pay attention to details, and speak and work well with others.

What problem-solving skills are important for statistical annotators?

Statistical annotators want problem-solving skills to analyze complex problems, perceive solutions, and make informed decisions about annotations. Numerical skills and data visualization skills also help annotators work with numbers and gift data efficiently.

How important is continuous learning for information annotators?

Continuously gaining knowledge is crucial for fact keepers to stay up-to-date on industry characteristics. They must actively seek out educational sessions, attend workshops, and live with the latest tools and techniques. Seeking feedback and constantly improving skills is also important for personal and expert growth.

What are the future opportunities within the area of independent information annotation?

Freelance log annotation offers a flexible and profitable career path, with future opportunities within the growing discipline of artificial intelligence and machine learning. Continued knowledge and self-improvement in statistical scoring talents are important to staying competitive in the field.