Everything About Neural Network Activation Functions Is Here

“The entire globe is a massive data problem in neural networks.”

It turns out that—

This statement applies to both our brains and machine learning.

Our brain is always attempting to categorize incoming data into “useful” and “not-so-useful” categories.

Artificial neural networks in deep learning go through a similar process.

The segmentation helps a neural network operate effectively by ensuring that it learns from the beneficial input rather than being trapped studying the unhelpful information.

What is the Activation Function of a Neural Network?

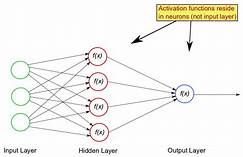

An Activation Function determines whether or not a neuron is activated. This means that it will use simpler mathematical operations to determine whether the neuron’s input to the network is essential or not throughout the prediction phase.

The Activation Function’s job is to generate output from a set of input values that are passed into a node (or a layer).

But—

Let’s back up for a moment and define what a node is.

A node is a duplicate of a neuron that receives a collection of input signals—external stimuli—if we compare the neural networks to the human brain.

The brain evaluates these incoming signals and chooses whether or not the cell should be engaged

(“fired”) based on their kind and strength.

The Activation Function’s principal function is to convert the node’s summed weighted input into an output value that may be passed to the next hidden layer or used as output.

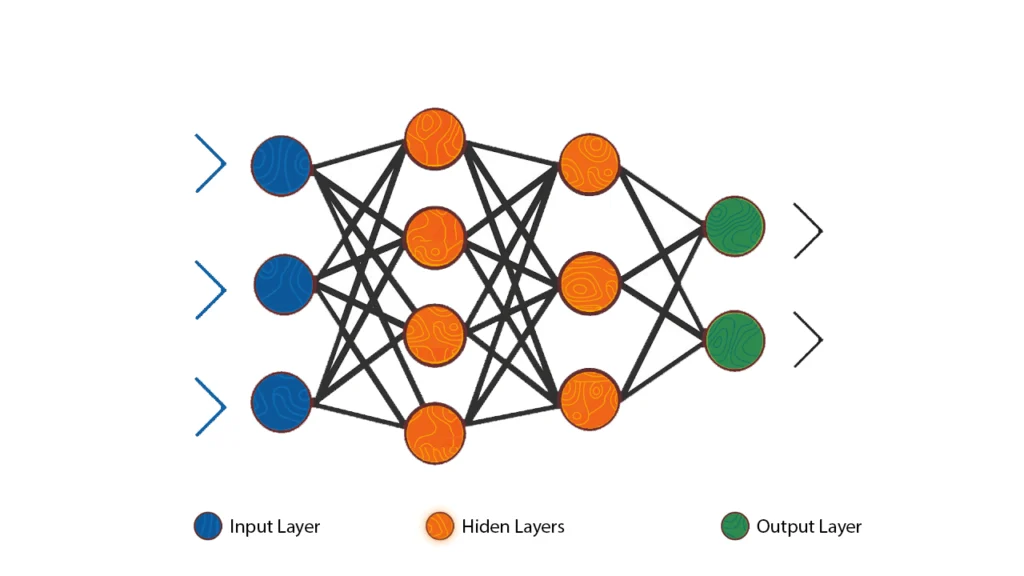

Neural networks Architecture Elements

Here’s the deal:

It may be difficult to go deeper into the issue of activation functions if you don’t comprehend the notion of neural networks and how they work.

Activation Functions for Three Different Types of Neural Networks

Now that we’ve covered the fundamentals, let’s look at some of the most common neural networks activation functions.

The function of Binary Steps

A threshold value determines whether a neuron should be activated or not in a binary step function.

The input to the activation function is compared to a threshold; if it is higher, the neuron is activated; if it is lower, the neuron is deactivated, and its output information is not passed on to the next concealed layer…

The following are some of the drawbacks of the binary step function of neural networks:

• It can’t provide multi-value outputs, hence it can’t be utilized to solve multi-class classification issues, for example.

• The step function’s gradient is zero, which makes the backpropagation procedure difficult.

Linear Activation Function

The linear activation function, often known as the Identity Function, is a proportional activation function.

A linear activation function, on the other hand, has two fundamental drawbacks:

• Backpropagation is not conceivable since the function’s derivative is a constant and has no relationship to the input x.

• If a linear activation function is employed, all layers of the neural network will collapse into one. The last layer of a neural network will always be a linear function of the first layer, regardless of how many levels there are. A linear activation function effectively reduces the neural networks to a single layer.

To read more: https://24x7offshoring.com/blog/

Activation Functions That Aren’t Linear

A linear regression model is used to create the linear activation function displayed above.

The model is unable to make complicated mappings between the network’s inputs and outputs due to its limited power.

The following drawbacks of linear activation functions are overcome by non-linear activation functions:

• They allow backpropagation since the derivative function is now tied to the input, making it feasible to go back and figure out which input neuron weights can produce a better forecast.

• They allow several layers of neurons to be stacked since the output is a non-linear mixture of input transmitted through numerous levels. In a neural network, any output may be represented as a functional computation.

Neural Networks Architecture Elements

Here’s the deal:

It may be difficult to go deeper into the issue of activation functions if you don’t comprehend the notion of neural networks and how they work.

That is why it is a good idea to brush up on your knowledge and take a brief look at the structure and components of the neural networks Architecture. It’s here.

A neural network made up of linked neurons may be seen in the figure above. The weight, bias, and activation function of each of them are unique.

Layer of Input

The domain’s raw input is sent into the input layer. This layer does not do any computations. The information (features) is simply passed on to the hidden layer via the nodes in this layer.

Layer that isn’t visible

The nodes of this layer are not exposed, as the name implies. They provide the network with a layer of abstraction.

The hidden layer computes all of the features entered through the input layer and sends the results to the output layer.

Layer of Output

It’s the network’s last layer that takes the information gleaned from the hidden layer and turns it into the final value.

Backpropagation vs. Feedforward

When studying neural networks, you’ll come across two concepts that describe how information moves: feedforward and backpropagation. The Activation Function is a mathematical “gate” between the input feeding the current neuron and its output flowing to the next layer in feedforward propagation.

Simply said, backpropagation seeks to reduce the cost function by altering the weights and biases of the network. The amount of modification with regard to parameters such as the activation function, weights, bias, and so on is determined by the cost function gradients.

Components of a Brain Organization

Input Layer: This layer acknowledges input highlights. It gives data from the rest of the world to the organization, no calculation is performed at this layer, hubs here pass on the information(features) to the secret layer.

Secret Layer: Hubs of this layer are not presented to the external world, they are essential for the reflection given by any brain organization. The secret layer plays out a wide range of calculation on the highlights entered through the info layer and moves the outcome to the result layer.

Yield Layer: This layer raise the data advanced by the organization to the external world.

What is an enactment capability and why use them?

The enactment capability concludes regardless of whether a neuron ought to be initiated by computing the weighted aggregate and further adding predisposition to it. The reason for the initiation capability is to bring non-linearity into the result of a neuron.

Clarification: We know, the brain network has neurons that work in correspondence with weight, predisposition, and their particular actuation capability. In a brain organization, we would refresh the loads and predispositions of the neurons based on the mistake at the result. This cycle is known as back-spread. Enactment capabilities make the back-engendering conceivable since the slopes are provided alongside the blunder to refresh the loads and inclinations.

Continue Reading, just click on: https://24x7offshoring.com/blog/

The Extract, Transform, and Load (ETL) process is a common way to move data from one system to another. It is a three-step process that involves extracting data from the source system, transforming it to fit the destination system, and then loading it into the destination system.

The different steps of the ETL process are:

- Extract: The first step is to extract the data from the source system. This can be done using a variety of methods, such as exporting data from a database, copying files from a file system, or extracting data from a web service.

- Transform: The second step is to transform the data to fit the destination system’s data model. This may involve cleaning the data, removing duplicate records, or converting data types.

- Load: The third step is to load the data into the destination system. This can be done using a variety of methods, such as inserting data into a database, copying files to a file system, or loading data into a data lake.

Here is a more detailed explanation of each step:

Extract: The extract step involves identifying the data that needs to be extracted from the source system. This may involve identifying specific tables, fields, or records. Once the data has been identified, it can be extracted using a variety of methods.

Transform: The transform step involves cleaning the data, removing duplicate records, or converting data types. This ensures that the data is in the correct format and that it is ready to be loaded into the destination system.

Load: The load step involves loading the data into the destination system. This may involve inserting data into a database, copying files to a file system, or loading data into a data lake.

The ETL process can be a complex and time-consuming process. However, it is a valuable process for consolidating, cleaning, and integrating data from multiple sources.

Here are some of the benefits of using ETL:

- Data consolidation: ETL can be used to consolidate data from multiple sources into a single repository. This makes it easier to manage and analyze the data.

- Data cleaning: ETL can be used to clean data, removing duplicate records, correcting errors, and filling in missing values. This ensures that the data is accurate and consistent.

- Data integration: ETL can be used to integrate data from multiple sources into a single data model. This makes it easier to use the data for reporting and decision-making.

Here are some of the challenges of using ETL:

- Data complexity: ETL can be challenging to implement in complex data environments. This is because the data may be in different formats, or it may be stored in different systems.

- Data volume: ETL can be challenging to implement in data environments with large volumes of data. This is because the ETL process may need to be scaled to handle the large volume of data.

- Data latency: ETL can introduce latency into the data pipeline. This is because the ETL process may need to be run periodically, which can delay the availability of the data.

Overall, ETL is a valuable process for consolidating, cleaning, and integrating data from multiple sources. However, it is important to be aware of the challenges of ETL before implementing it in a data environment.

Activation functions are used in neural networks to introduce non-linearity into the model. This is important because it allows the neural network to learn more complex relationships between the input and output data. Without activation functions, the neural network would be a linear model, which would only be able to learn linear relationships.

There are many different activation functions that can be used in neural networks. Some of the most common activation functions include:

- Sigmoid: The sigmoid function is a S-shaped function that is often used in classification problems.Sigmoid function

- Tanh: The tanh function is similar to the sigmoid function, but it has a range of [-1, 1]. This makes it useful for regression problems.Tanh function

- ReLU: The ReLU function is a rectified linear unit function that is often used in deep learning models.ReLU function

- Leaky ReLU: The leaky ReLU function is a variant of the ReLU function that has a small slope for negative values.Leaky ReLU function

The choice of activation function depends on the specific problem that the neural network is trying to solve. Some factors to consider when choosing an activation function include:

- The range of the output data.

- The complexity of the relationship between the input and output data.

- The computational complexity of the activation function.

Activation functions are an important part of neural networks. They allow neural networks to learn more complex relationships between the input and output data, which makes them more powerful models.