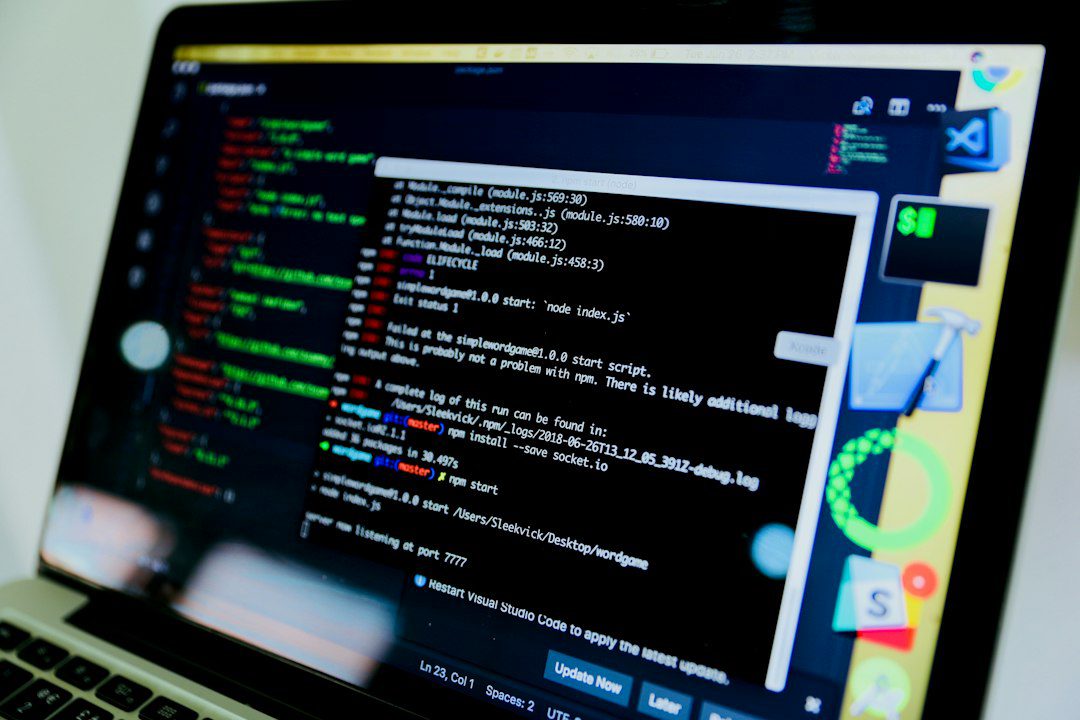

Want to create something great in AI ML? Let’s talk.

Put a request in our contact form and our team will connect with you soon.

24x7offshoring is the one-stop solution for multinational organizations all over the world, with experience in over 100 mediums to large scale projects across five continents.

Company

Newsletter

Signup our newsletter to get update information, news or insight.