Introduce the types of image annotation, application scenarios, and the advantages and disadvantages of various annotations.

Without data analytics, companies are blind and deaf, roaming the web like deer on the highway.”

— Geoffrey Moore

Every data science task requires data. Specifically, clean and understandable data that is fed into the system. When it comes to images, computers need to see what human eyes see.

For example, humans have the ability to recognize and classify objects. Likewise, we can use computer vision to interpret the visual data it receives. This is where image annotation comes in.

Image annotation plays a vital role in computer vision. The goal of image annotation is to provide task-relevant, task-specific labels. This might include text-based labels (classes), labels drawn scenarios on images (i.e. borders), or even pixel-level labels. We explore this range of different annotation techniques below.

AI requires more human intervention than we think. In order to prepare high-precision training data, we must annotate images to get correct results. Data annotation often requires a high level of domain knowledge that can only be provided by experts from a particular domain.

Computer vision tasks that require annotation:

Line/Edge Detection

Pose Prediction/Keypoint scenarios Recognition

1) Target detection

There are two main techniques for object detection, namely 2D and 3D bounding boxes.

For polygonal objects, the polygon method can be used. Let’s discuss it in detail.

2D bounding box

In this method, only a rectangular box needs to be drawn around the detected object. They are used to define the position of objects in the image. The border can be determined by the x, y coordinates of the upper left corner of the rectangle and the x, y coordinates of the lower right corner.

pros and cons:

Labeling is quick and easy.

Can not provide important scenarios information, such as the orientation of the object, which is crucial for many applications.

Includes background pixels that are not part of the object. This may affect training.

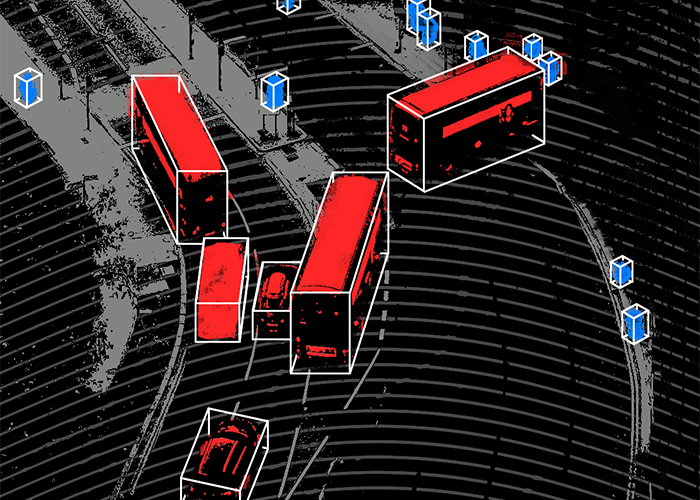

3D bounding box or cube

Similar to 2D bounding boxes, except they can also show the depth of the target. This annotation is achieved by back-projecting a bounding box on the 2D image plane to a 3D cuboid. It allows the system to distinguish features such as volume and position in three-dimensional space.

Pros and Cons :

Fixed an issue with object orientation.

When the object is occluded, this annotation scenarios can imagine the dimensions of the bounding box, which may affect the training.

Such annotations also include background pixels, which may affect training.

polygon

Occasionally, irregularly shaped objects must be marked. In this case, polygons are used. Just by marking the edges of objects when annotating, we can get a perfect outline of the object to be detected.

Pros and Cons :

The main advantage of polygonal markers is that it eliminates background pixels and captures the exact dimensions of objects.

Very time-consuming, if the shape of the object is complex, it is difficult to label.

NOTE : The polygon method is also used for object shape segmentation. We’ll discuss segmentation below.

Data acquisition is an ML cold start problem. But even when you have a working dataset, building and testing models can be tricky.

2) Line/Edge Detection (Line and Spline)

Lines and splines are useful when scenarios demarcate boundaries. The pixels that distinguish one region from another are labeled.

Pros and Cons :

The advantage of this approach is that the pixels on the line do not need to be all contiguous. This is useful for detecting broken lines or partially occluded objects.

Manually labeling lines in an image is very tiring and time-consuming, especially when there are many lines in the image.

This can give misleading results when scenarios objects happen to be aligned.

3) Attitude prediction / key point recognition

In many computer vision applications, neural networks are often required to identify important points of interest in an input image. We call these points landmarks or keypoints. In this application, we want the neural network to output the coordinates (x, y) of the key points.

4) Segmentation

Image segmentation is the process of dividing an image into multiple parts. Image segmentation is commonly used to localize objects and boundaries in images at the pixel level. There are many methods of image segmentation.

Semantic Segmentation : Semantic segmentation is a machine learning task that requires pixel-level annotation, where each pixel in an image is assigned to a class. Every pixel carries semantic scenarios meaning. This is mainly used in situations where the context of the environment is very important.

Instance Segmentation : Instance segmentation is a subtype of image segmentation that identifies each instance of each object in an image at the pixel level. Instance segmentation and semantic segmentation are one of two levels of granularity in image segmentation.

Panoptic Segmentation : Panoptic segmentation combines semantic and instance segmentation, where all pixels are assigned a class label and all object instances are uniquely segmented.

5) Image classification

https://jwpblog.files.wordpress.com/2018/10/new-doc-2018-10-15-09-35-00_1.jpg?w=768

Image classification is different from object detection. The purpose of object detection is to identify and locate objects, while the purpose of image classification is to identify and identify specific object classes. A common example of this use case is classifying pictures of cats and dogs. The annotator must assign a class label “dog” to an image of a dog and “cat” to an image of a cat.

Use Cases for Image Annotation

In this section, we discuss how image annotation can be used to help machine models perform industry-specific tasks:

Retail: 2D bounding boxes can be used to annotate images of products, which can then be used by machine learning algorithms to predict cost and other attributes. Image classification can also help here.

Medicine : Polygons can be used to label organs in medical x-rays so that they can be fed into deep learning models to train on deformities or defects in x-rays. This is one of the most important applications of image annotation and requires high domain knowledge of medical experts.

Self-Driving Cars : This is another important scenarios area where image annotation can be applied. Labeling every pixel in an image with semantic segmentation enables the vehicle to perceive obstacles on the road. Research in this area is still ongoing.

Emotion Detection : This is the milestone that can be used to detect a person’s emotion (happy, sad, or natural). This can be applied to assess subjects’ emotional responses to specific content.

Manufacturing industry : Lines and splines can be used to mark images of factories Lines follow robots to work. This can help automate the production process and human labor can be minimized.

Some Challenges of Image Annotation

Time complexity : Manually labeling images takes a lot of time, and machine learning requires a lot of data sets, and it takes a lot of time to effectively label these image-based data sets.

Computational complexity : Machine learning requires precisely labeled data to run models. If the annotator injects any kind of error when annotating the image, it may affect the training and all the efforts may go to waste.

Domain Knowledge : As mentioned earlier, image annotation usually requires domain-specific high-level domain knowledge. So we need annotators who know what to annotate, and experts in the field.