What considerations are taken into account for the best longitudinal data collection?

Data Collection

Data collection is a systematic process of gathering observations or measurements. Whether you are performing research for business, governmental or academic purposes, data collection allows you to gain first-hand knowledge and original insights into your research problem.

While methods and aims may differ between fields, the overall process of data collection remains largely the same. Before you begin collecting data, you need to consider:

- The aim of the research

- The type of data that you will collect

- The methods and procedures you will use to collect, store, and process the data

To collect high-quality data that is relevant to your purposes, follow these four steps.

LONGITUDINAL STUDIES: CONCEPT AND PARTICULARITIES

WHAT IS A LONGITUDINAL STUDY?

The discussion about the meaning of the term longitudinal was summarized by Chin in 1989: for epidemiologists it is synonymous with a cohort or follow-up study, while for some statisticians it implies repeated measurements. He himself decided not to define the term longitudinal, as it was difficult to find a concept acceptable to everyone, and chose to consider it equivalent to “monitoring”, the most common thought for professionals of the time.

The longitudinal study in epidemiology

In the 1980s it was very common to use the term longitudinal to simply separate cause from effect. As opposed to the transversal term. Miettinen defines it as a study whose basis is the experience of the population over time (as opposed to a section of the population). Consistent with this idea, Rothman, in his 1986 text, indicates that the word longitudinal denotes the existence of a time interval between exposure and the onset of the disease. Under this meaning, the case-control study, which is a sampling strategy to represent the experience of the population over time (especially under Miettinen’s ideas), would also be a longitudinal study.

Likewise, Abramson agrees with this idea, who also differentiates longitudinal descriptive studies (studies of change) from longitudinal analytical studies, which include case-control studies. Kleinbaum et al. likewise define the term longitudinal as opposed to transversal but, with a somewhat different nuance, they speak of “longitudinal experience” of a population (versus “transversal experience”) and for them it implies the performance of at least two series of observations over a follow-up period. The latter authors exclude case-control studies. Kahn and Sempos also do not have a heading for these studies and in the keyword index, the entry “longitudinal study” reads “see prospective study.”

This is reflected in the Dictionary of Epidemiology directed by Last, which considers the term “longitudinal study” as synonymous with cohort study or follow-up study. In Breslow and Day’s classic text on cohort studies, the term longitudinal is considered equivalent to cohort and is used interchangeably. However, Cook and Ware defined the longitudinal study as one in which the same individual is observed on more than one occasion and differentiated it from follow-up studies, in which individuals are followed until the occurrence of an event such as death. death or illness (although this event is already the second observation).

Since 1990, several texts consider the term longitudinal equivalent to other names, although most omit it. A reflection of this is the book co-edited by Rothman and Greenland, in which there is no specific section for longitudinal studies within the chapters dedicated to design, and the Encyclopedia of Epidemiological Methods also coincides with this trend, which does not offer a specific entry for this type of studies.

The fourth edition of Last’s Dictionary of Epidemiology reproduces his entry from previous editions. Gordis considers it synonymous with a concurrent prospective cohort study. Aday partially follows Abramson’s ideas, already mentioned, and differentiates descriptive studies (several cross-sectional studies sequenced over time) from analytical ones, among which are prospective or longitudinal cohort studies.

In other fields of clinical medicine, the longitudinal sense is considered opposite to the transversal and is equated with cohort, often prospective. This is confirmed, for example, in publications focused on the field of menopause.

The longitudinal study in statistics

Here the ideas are much clearer: a longitudinal study is one that involves more than two measurements throughout a follow-up; There must be more than two, since every cohort study has this number of measurements, the one at the beginning and the one at the end of follow-up. This is the concept existing in the aforementioned text by Goldstein from 1979. In that same year Rosner was explicit when indicating that longitudinal data imply repeated measurements on subjects over time, proposing a new analysis procedure for this type of data. . Since that time, articles in statistics journals (for example) and texts are consistent in the same concept.

Two reference works in epidemiology, although they do not define longitudinal studies in the corresponding section, coincide with the prevailing statistical notion. In the book co-directed by Rothman and Greenland, in the chapter Introduction to regression modeling, Greenland himself states that longitudinal data are repeated measurements on subjects over a period of time and that they can be carried out for exposures. time-dependent (e.g., smoking, alcohol consumption, diet, or blood pressure) or recurrent outcomes (e.g., pain, allergy, depression, etc.).

In the Encyclopedia of Epidemiological Methods, the “sample size” entry includes a “longitudinal studies” section that provides the same information provided by Greenland.

It is worth clarifying that the statistical view of a “longitudinal study” is based on a particular data analysis (taking repeated measures into account) and that the same would be applicable to intervention studies, which also have follow-up.

To conclude this section, in the monographic issue of Epidemiologic Reviews dedicated to cohort studies, Tager, in his article focused on the outcome variable of cohort studies, broadly classifies cohort studies into two large groups, ” life table” and “longitudinal”, clarifying that this classification is something “artificial”. The first are the conventional ones, in which the result is a discrete variable, the exposure and the population-time are summarized, incidences are estimated and the main measure is the relative risk.

The latter incorporate a different analysis, taking advantage of repeated measurements in subjects over time, allowing inference, in addition to population, at the individual level in the changes of a process over time or in the transitions between different states. of health and illness.

The previous ideas denote that in epidemiology there is a tendency to avoid the concept of longitudinal study. However, summarizing the ideas discussed above, the notion of longitudinal study refers to a cohort study in which more than two measurements are made over time and in which an analysis is carried out that takes into account the different measurements. . The three key elements are: monitoring, more than two measures and an analysis that takes them into account. This can be done prospectively or retrospectively, and the study can be observational or interventional.

PARTICULARITIES OF LONGITUDINAL STUDIES

When measuring over time, quality control plays an essential role. It must be ensured that all measurements are carried out in a timely manner and with standardized techniques. The long duration of some studies requires special attention to changes in personnel, deterioration of equipment, changes in technologies, and inconsistencies in participant responses over time.

There is a greater probability of dropout during follow-up. The factors involved in this are several:

* The definition of a population according to an unstable criterion. For example, living in a specific geographic area may cause participants with changes of address to be ineligible in later phases.

* It will be greater when, in the case of responders who are not contacted once, no further attempts are made to establish contact in subsequent phases of the follow-up.

* The object of the study influences; For example, in a political science study those not interested in politics will drop out more.

* The amount of personal attention devoted to responders. Telephone and letter interviews are less personal than those conducted face to face, and are not used to strengthen ties with the study.

* The time invested by the responder in satisfying the researchers’ demand for information. The higher it is, the greater the frequency of abandonments.

* The frequency of contact can also play a role, although not everyone agrees. There are studies that have documented that an excess of contacts impairs follow-up, while others have either found no relationship or it is negative.

To avoid dropouts, it is advisable to establish strategies to retain and track participating members. The willingness to participate should be assessed at the beginning and what is expected of the participants. Bridges must be established with the participants by sending congratulatory letters, study updates, etc.

The frequency of contact must be regular. Study staff must be enthusiastic, easy to communicate, respond quickly and appropriately to participants’ problems, and adaptable to their needs. We must not disdain giving incentives that motivate continuation in the study.

Thirdly, another major problem compared to other cohort studies is the existence of missing data . If a participant is required to have all measurements made, it can produce a problem similar to dropouts during follow-up. For this purpose, techniques for imputation of missing values have been developed and, although it has been suggested that they may not be necessary if generalized estimating equations (GEE analysis) are applied, it has been proven that other procedures give better results, even when the losses are completely random.

Frequently, information losses are differential and more measurements are lost in patients with a worse level of health. It is recommended in these cases that data imputation be done taking into account the existing data of the individual who is missing.

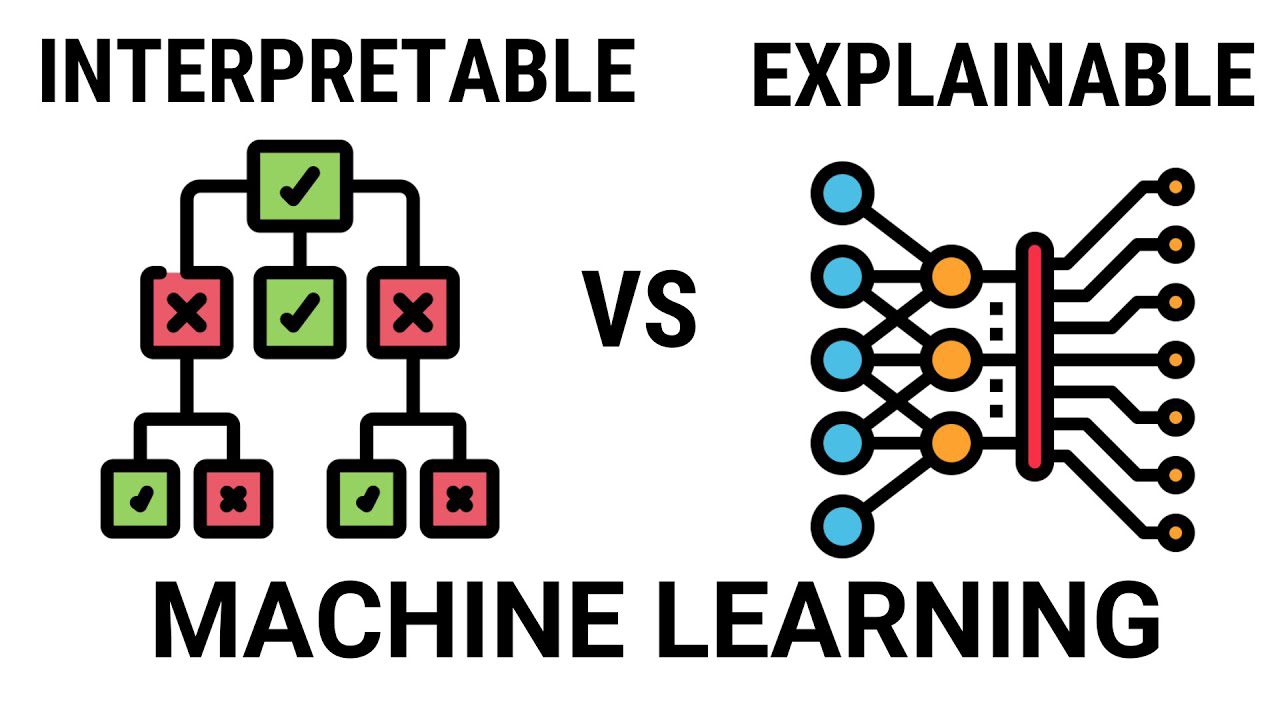

Analysis

In the analysis of longitudinal studies it is possible to treat time-dependent covariates that can both influence the exposure under study and be influenced by it (variables that simultaneously behave as confounders and intermediate between exposure and effect). Also, in a similar way, it allows controlling recurring results that can act on the exposure and be caused by it (they behave both as confounders and effects).

Longitudinal analysis can be used when there are measurements of the effect and/or exposure at different moments in time. Suppose that the relationship between a dependent variable Y is a function of a variable , which is expressed according to the following equation :

Y it = bx it + z i a + e it

where the subscript i refers to the individual, the t at the moment of time and e is an error term (Z does not change as it is stable and that is why it has a single subscript). The existence of several measurements allows us to estimate the coefficient b without needing to know the value of the stable variable, by performing a regression of the difference in the effect (Y) on the difference in values of the independent variables:

Y it – Y i1 = b(x it – x i1 ) + a( z i – z i ) +

+ e it – e i1 = b( x it – x i1 ) + e it – e i1

That is, it is not necessary to know the value of the time-independent (or stable) variables over time. This is an advantage over other analyses, in which these variables must be known. The above model is easily generalizable to a multivariate vector of factors changing over time.

The longitudinal analysis is carried out within the context of generalized linear models and has two objectives: to adopt conventional regression tools, in which the effect is related to the different exposures and to take into account the correlation of the measurements between subjects. This last aspect is very important. Suppose you analyze the effect of growth on blood pressure; The blood pressure values of a subject in the different tests performed depend on the initial or basal value and therefore must be taken into account.

For example, longitudinal analysis could be performed in a childhood cohort in which vitamin A deficiency (which can change over time) is assessed as the main exposure over the risk of infection (which can be multiple over time). , controlling the influence of age, weight and height (time-dependent variables). The longitudinal analysis can be classified into three large groups.

a) Marginal models: they combine the different measurements (which are slices in time) of the prevalence of the exposure to obtain an average prevalence or other summary measure of the exposure over time, and relate it to the frequency of the disease . The longitudinal element is age or duration of follow-up in the regression analysis. The coefficients of this type of models are transformed into a population prevalence ratio; In the example of vitamin A and infection it would be the prevalence of infection in children with vitamin A deficiency divided by the prevalence of infection in children without vitamin A deficiency.

b) Transition models regress the present result on past values and on past and present exposures. An example of them are Markov models. The model coefficients are directly transformed into a quotient of incidences, that is, into RRs; In the example it would be the RR of vitamin A deficiency on infection.

c) Random effects models allow each individual to have unique regression parameters, and there are procedures for standardized results, binary, and person-time data. The model coefficients are transformed into an odds ratio referring to the individual, which is assumed to be constant throughout the population; In the example it would be the odds of infection in a child with vitamin A deficiency versus the odds of infection in the same child without vitamin A deficiency.

Linear, logistic, Poisson models, and many survival analyzes can be considered particular cases of generalized linear models. There are procedures that allow late entries or at different times and unequally in the observation of a cohort.

In addition to the parametric models indicated in the previous paragraph, analysis using non-parametric methods is possible; For example, the use of functional analysis with splines has recently been reviewed.

Several specific texts on longitudinal data analysis have been mentioned. One of them even offers examples with the routines to write to correctly carry out the analysis using different conventional statistical packages (STATA, SAS, SPSS).