Interpretable Differencing for Machine Learning Models

At various stages of the Machine Learning model lifecycle, data scientists make decisions regarding which model to use. For instance, they may choose from a range of pre-built a Machine Learning models, select from a list of candidate models generated from automated tools like Auto Machine Learning, or simply update a model based on new training data to incorporate distributional changes. In these settings, the choice of a model is preceded by an evaluation that typically focuses on accuracy and other metrics, instead of how it differs from other Machine Learning models.

We address the problem of Machine Learning model differencing. Given two models for the same task and a dataset, we seek to learn where in the feature space the models’ predicted outcomes differ. Our objective is to provide accurate and interpretable mechanisms to uncover these differences. The comparison is helpful in several scenarios. In a Machine Learning model marketplace, multiple pre-built models for the same task need to be compared.

The Machine Learning models usually are black-box and possibly trained on different sets of data drawn from the same distribution. During model selection, a data scientist trains multiple models and needs to select one model for deployment. In this setting, the Machine Learning models are white-box and typically trained on the same training data.

For model change, where a model is retrained with updated training data with a goal towards model improvement, the data scientist needs to understand changes in the model beyond accuracy metrics. Finally, decision pipelines consisting of logic and Machine Learning models occur in business contexts where a combination of business logic and the output of Machine Learning models work together for a final output. Changes might occur either due to model retraining or adjustments in business logic which can impact the behavior of the overall pipeline. In this work we address the problem of interpretable model differencing as follows.

First, we formulate the problem as one of predicting the values of a dissimilarity function of

the two Machine Learning models’ outputs. We focus herein on 0-1 dissimilarity for two classifiers, where 0 means “same output” and 1 means “different”, so that prediction quality can be quantified by any binary classification metric such as precision and recall.

Second, we propose a method that learns a Joint Surrogate Tree (JST), composed of two conjoined decision tree surrogates to jointly approximate the two Machine Learning models. The root and lower branches of the conjoined decision trees are common to both models, while higher branches (farther from root) may be specific to one model. A JST thus accomplishes two tasks at once: it provides interpretable surrogates for the two models while also aligning the surrogates for easier comparison and identification of differences.

These aspects are encapsulated in a visualization of JSTs that we present. Third, a refinement procedure is used to grow the surrogates in selected regions, improving the precision of the dissimilarity prediction. Our design of jointly learning surrogates is motivated by the need to place model differences in the context of the overall decision logic.

This can aid users who may already have a mental Machine Learning model of (individual) AI systems, either for debugging [Kulesza et al., 2012] or to understand errors [Bansal et al., 2019]. The main contributions of the paper are (a) a quantitative formulation of the problem of model differencing, and (b) algorithms to learn and refine conjoined decision tree surrogates to approximate two models simultaneously.

A detailed evaluation of the method is presented on several benchmark datasets, showing more accurate or more concise representation of Machine Learning model differences, compared to baselines.

Related Works to Machine Learning

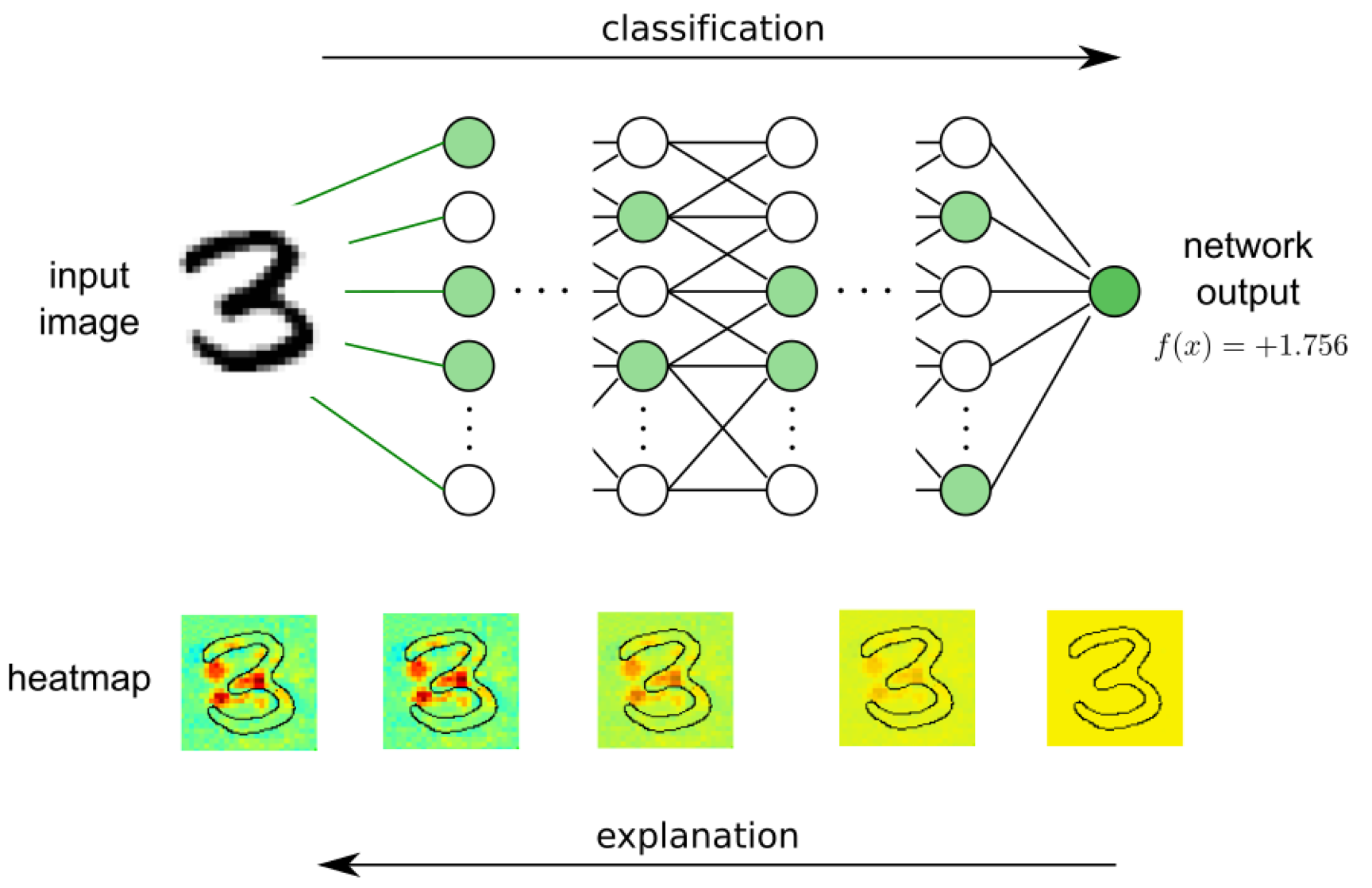

Our work touches upon several active areas of research which we summarize based on key pertinent themes. Surrogate Machine Learning models and model refinement One mechanism to lend interpretability to machine learning models is through surrogates, i.e., simpler human-readable models that mimic a complex model.

Most relevant to this paper are works that use a decision tree as the surrogate. Bastani et al. [2017] showed that interpretable surrogate decision trees extracted from a blackbox Machine Learning model allowed users to predict the same outcome as the original Machine Learning model. Freitas [2014] also discusses interpretability and usefulness of using decision trees as surrogates. None of these works however have considered jointly approximating two black-box Machine Learning models.

Decision tree generation with additional objectives Chen et al. [2019] showed that decision tree generation is not robust and slight changes in the root node can result in a very different tree structure. Chen et al. [2019], Andriushchenko and Hein [2019] focus on improving robustness when generating the decision tree while Moshkovitz et al. [2021] prioritises both robustness and interpretability.

Aghaei et al. [2019] use mixed-integer optimization to take fairness into account in the decision tree generation. However, none of these solutions consider the task of comparing

two decision trees. Predicting disagreement or shift Prior work has focused on identifying statistically whether models have significantly changed [Bu et al., 2019, Geng et al., 2019, Harel

et al., 2014], but not on where they have changed. Cito et al. [2021] present a model-agnostic rule-induction algorithm to produce interpretable rules capturing instances that are

mispredicted with respect to their ground truth.

Comparing Machine Learning models The “distill-and-compare” approach of Tan et al. [2018] uses generalized additive models (GAMs) and fits one GAM to a black-box model and a second GAM to ground truth outcomes. While differences between the GAMs are studied to uncover insights, there is only one black-box model. Demšar and Bosnic [2018] ´ study concept drift by determining feature contributions to a model and observing the changes in contributions over time. Similarly, Duckworth et al. [2021] investigated changes in feature importance rankings pre- and post-COVID. This approach however does not localize changes to regions of the feature space.

Chouldechova and G’Sell [2017] compare Machine Learning models in terms of fairness metrics and identify groups in the data where two models have maximum disparity. Prior work by Nair et al. [2021], which is most similar to our own, uses rule-based surrogates for two models and derives rules for where the models behave similarly. Their method biases the learning of the second surrogate based on inputs from the first model, a step they call grounding, and imposes a one-to-one mapping between rules in the two surrogates.

This is a strict condition that may not hold in practice. Additionally, their method does not evaluate the accuracy of resulting rules in predicting model similarities or differences.

Our approach addresses these limitations.

PROPOSED ALGORITHM

We propose a technique called IMD, which shows the differences between two Machine Learning models by constructing a novel representation called a Joint Surrogate Tree or JST. A JST is composed of two conjoined decision tree surrogates that jointly approximate the two models, intuitively capturing similarities and differences between them.

It overcomes the drawbacks of the direct and separate surrogate approaches mentioned in Section 3: it avoids the non-smoothness of direct difference modelling, aligns and promotes similarity between surrogates for the two models, and shows differences in the context of each model’s decision logic. Our method has a single hyperparameter, tree depth, which controls the trade-off between accuracy and interpretability.

IMD performs two steps as shown in Figure 1. In the first step, IMD builds a JST for models M1, M2 using data samples X, and then extracts diff regions from the JST. Interpretability is ensured by restricting the height of the JST. The IMD algorithm treats M1, M2 as black boxes and can handle any pair of classification models. It is also easy to implement as it requires a simple modification to popular greedy decision tree algorithms.

The second (optional) step, discussed at the end of Section 4.1, refines the JST by identifying diff regions where the two decision tree surrogates within the JST differ but the original models do not agree with the surrogates on their predictions. The refinement process aims to increase the fidelity of the surrogates in the diff regions, thereby generating more precise diff regions where the true models also differ.

JOINT SURROGATE TREE

Representation Figure 2 shows an example of a JST for Logistic Regression and Random Forest models on the Breast cancer dataset [Dua and Graff, 2017] (feature names are omitted to save space). The JST consists of two conjoined decision tree surrogates for the two models. The white oval nodes of the JST are shared decision nodes where both surrogates use the same split conditions.

We refer to the subtree consisting of white nodes as the common prefix tree. In contrast, the colored nodes represent separate decision nodes, pink for surrogate Mˆ 1 corresponding to M1, and orange for surrogate Mˆ 2 for M2. The rectangular nodes correspond to the leaves, and are colored differently to represent class labels — cyan for label 1, and beige for label 0. The leaves are marked as pure/impure depending on whether all the samples falling there have the same label or not.

The JST intuitively captures diff regions, i.e., local regions of feature space where the two input models diverge, and also groups them into a two-level hierarchy. As with any surrogate-based diff model, we have Dˆ(x) = 1 if and only if the constituent decision tree surrogates disagree, Mˆ 1(x) ̸= Mˆ 2(x).

Thus, diff regions can be identified by first focusing on an or-node (the dotted circle nodes in Figure 2 where the surrogates diverge) and then enumerating pairs of leaves under it with different labels. For example, considering the rightmost or-node vo1 in Figure 2, with path condition X[22] ≥ 116.05, Mˆ2 classifies all the samples to label 0 whereas Mˆ1 classifies to label 1 in the region X[22] < 118.85 ∧ X[29] < 0.1. Therefore the diff region is 118.85 > X[22] ≥ 116.05 ∧ X[29] < 0.1.

While in this case vo1 yields a single diff region, in general multiple diff regions could be grouped under a single ornode, resulting in a hierarchy. By processing all the or-nodes

of the JST, one obtains all diff-regions. Formally, JST = (V = Vdt ∪ Vo, E = Edt ∪ Eo). Vdt is a

set of decision nodes similar to decision trees (oval shaped in figure) with each outgoing edge ∈ Edt (solid arrows) representing True or False decisions as in a regular decision tree. Vo are the set of or-nodes (circular nodes) representing the diverging points where the decision trees no longer share the same split conditions.

Each child of vo ∈ Vo is denoted as v I o , i = 1, 2, with dashed edges (vo, vi o) ∈ Eo. Each v I o represents the root of an individual surrogate decision sub-tree for model i. The height of a JST is the maximum number of decision edges (solid edges) in any root-to-leaf path.

Formally, a diff region is defined by the non-empty intersection of path-conditions of differently labelled leaves l1, l2 from two decision sub-trees rooted at the same or-node vo.

The collection of all diff regions specifies the diff ruleset: R = pc(l1) ∧ pc(l2) : li ∈ leaves(v

I o ), i = 1, 2, label(l1) ̸= label(l2), vo ∈ Vo} .

Construction The objective of JST construction is twofold: (a) Maximize comparability: To achieve maximal sharing of split conditions between the two decision tree surrogates, and (b) Interpretability: Achieve the above objective under the constraint of interpretability. We have chosen the height of the JST as the interpretability constraint. The construction of a JST corresponding to the inputs M1, M2, X starts with evaluating y1 = M1(X) and y2 = M2(X).

Starting from the root, at each internal node v ∈ Vdt, with inputs (Xv, y1v = M1(Xv), y2v = M2(Xv)) filtered by the node’s path condition, the key choice is whether to create a joint decision node or an or-node for the surrogates to diverge. The choice of node type signifies whether the two surrogates will continue to share their split conditions or not. Once divergence happens at an or-node, the two sub-trees rooted at the or-node do not share any split nodes thereafter. Below we present a general condition for divergence and a simplified one implemented in our experiments.

In general, a divergence condition should compare the cost of a joint split to that of separate splits for the two Machine Learning models. In the context of greedy decision tree algorithms considered in this work, the comparison is between the sum of impurities

CONCLUSION

We addressed the problem of interpretable model differencing, localizing and representing differences between Machine Learning models for the same task. We proposed JST to provide a unified view of the similarities and dissimilarities between the models as well as a succinct ruleset representation. Experimental results indicate that the proposed IMD approach yields a favorable trade-off between accuracy and interpretability in predicting model differences.

The current work is limited to comparing classifiers in terms of 0-1 dissimilarity. Since IMD is based on decision trees, its interpretability is limited to domains where the features are interpretable. While we have chosen to extend greedy decision tree algorithms due to ease and scalability, the resulting JSTs accordingly have no guarantees of optimality.

Future work could seek to address the above limitations. To extend the framework to regression tasks, a potential avenue is to threshold the difference function D(M1(x), M2(x))

and apply the classification framework presented herein. The problem of interpretable Machine Learning model differencing for images and language remains open. The constituent features for these modalities are generally not interpretable making the diff rulesets uninterpretable without additional considerations.