Introduction to Random Forest in Machine Learning…?

Random Forest is a supervised machine learning algorithm built with tree tree algorithms. This algorithm is used in various industries such as banking and e-commerce to predict behavior and results.

This article gives an overview of the random forest algorithm and how it works. The article will introduce the features of the algorithm and how it is used in real-life applications. It also highlights the pros and cons of this algorithm.

What is a random forest?

Random Forest is a machine learning method used to solve retreat and separation problems. It uses integrated learning, which is a multidisciplinary approach to providing solutions to complex problems.

The random forest algorithm contains many trees for cutting. ‘Forest’ generated by a random forest algorithm is trained by combining bags or bootstrap. Bagging is a combination of meta-algorithms that improve the accuracy of machine learning algorithms.

The algorithm (random forest) determines the result based on the prediction of decision trees. It predicts by taking a rate or rate of output of various trees. Increasing the number of trees increases the accuracy of the result.

The random forest removes the algorithm limits of the decision tree. Reduce overload of data sets and increase accuracy. Generates predictions without the need for multiple configurations in packages (like scikit-learn).

Features of Random Forest Algorithm

• More accurate than the decision tree algorithm.

• Provides an effective way to handle missing data.

• Can produce rational speculation without high parameter adjustment.

• Resolves overcrowding in decision trees.

• In all random forest trees, a small set of features is randomly selected in the node division.

How the random forest algorithm works

Understanding the trees of decision

Decision trees are the building blocks of the random forest algorithm. Decision tree is a decision-making method that builds a tree-like structure. An overview of the decision trees will help us understand how random forest algorithms work.

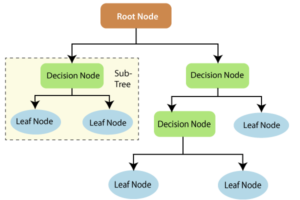

The decision tree consists of three parts: cut areas, leaf nodes, and root node. The decision tree algorithm divides the training database into branches, and then divides it into other branches. This sequence continues until a leaf node is detected. The leaf node cannot be parsed continuously.

The notes on the decision tree represent the attributes used to predict the outcome. Resolution nodes provide a link to the leaves. The following diagram shows three types of nodes in the decision tree.

Information theory can provide more information on how decision trees work. Entropy and information acquisition are the building blocks of decision trees. A closer look at these important ideas will enhance our understanding of how cutting trees are constructed.

Entropy is a metaphor for calculating uncertainty. Information gain is a measure of how to reduce the uncertainty of targeted variability, given the set of independent variables.

The concept of acquiring knowledge involves the use of independent variables (features) to obtain information about targeted variables (class). Targeted variant entropy (Y) and Y-conditioned entropy (given X) are used to measure information gain. In this case, conditional entropy is extracted from Y-entropy.

The knowledge advantage is used to train cutting trees. It helps to reduce the uncertainty of these trees. The acquisition of higher knowledge means that the higher level of uncertainty (intropy of knowledge) is removed. Entropy and acquisition of information are important in branch division, which is an important function in the formation of pruning trees.

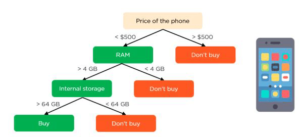

Let’s take a simple example of how a decision tree works. Suppose we want to predict whether a customer will buy a cell phone or not. Phone features form the basis of his decision. This analysis can be illustrated in the drawing of the decision tree.

The root node and resolution nodes represent the phone features mentioned above. A leaf node represents the end result, buy or not. Key decision-making features include value, internal storage, and Random Access (RAM) memory. The decision tree will appear as follows.

Using random trees in the forest

The main difference between the decision tree algorithm and the random forest algorithm is that root node formation and division are done randomly at the end. The random forest uses a bag-packing method to produce the necessary speculation.

Packing involves using different data samples (training data) rather than just one sample. The training data set includes visuals and features used to make predictions. Decision trees produce different outputs, depending on the training data provided by the random forest algorithm. These results will be evaluated, and the top will be selected as the final output.

Our first example can still be used to explain how informal forests work. Instead of having a single trunk, an unplanned forest will have more trees to cut down. Suppose we have only four trunks. In this case, the training data that combines the visuals of the phone and the features will be divided into four root nodes.

Root nodes can represent four factors that may influence customer choice (value, internal storage, camera, and RAM). The random forest will separate the nodes by selecting random elements. The final forecast will be selected based on the result of four trees.

The result chosen by many decision trees will be the final choice. If three trees predict a purchase, and one tree predicts a purchase, then the final prediction will be a purchase. In this case, it is predicted that the customer will buy the phone.

Random forest segregation

Random deforestation uses a combination method to obtain the result. Training data is provided to train trees for various decisions. This database contains visuals and features that will be randomly selected during node segmentation.

The rainforest system relies on trees for a variety of decisions. Every decision tree contains cuttings, leaf nodes, and root nodes. The leaf node of each tree is the final result produced by that particular decision tree. The election of the final result follows a multi-vote system. In this case, the preferred effect of most decision trees becomes the final result of the rainforest system.

Let’s take the example of a training database that includes a variety of fruits such as bananas, apples, pineapples, and mangoes. The random forest divider divides this database into smaller sets. These subdivisions are provided for all deciduous trees in a random forest system. Each decision tree produces its own specific effect. For example, the prediction of trees 1 and 2 is an apple.

Another tree of decision (n) predicted bananas as a result. The random forest category collects a lot of votes to provide a final estimate. Most decision-making trees have selected apples as their forecast. This allows the divider to select the apple as the final prediction.

Random descent

Undoing is another function performed by a random forest algorithm. Random deforestation follows the concept of easy retreat. Dependent values (features) and independent variables are transmitted through a random forest model.

We can use random deforestation in various systems such as SAS, R, and python. In random deforestation, each tree produces a certain prediction. The forecast in the middle of each tree is the result of a retreat. This is in contrast to the random, subdivision of the forest is determined by the mode of the tree of decision decision.

Although random deforestation and line deforestation follow the same concept, they differ in function. The function of the regression line is y = bx + c, where y is the dependent variable, x is the independent variable, b is the approximate parameter, and c is constant. The intricate random descent activity is like a black box.

Random forest applications

Some of the random forest applications may include:

A random forest is used in a bank to predict the suitability of a loan applicant. This helps the lending institution make a good decision as to whether or not to give the customer a loan. Banks also use a random forest algorithm to detect fraudsters.

Health care

Health professionals use random forest systems to diagnose patients. Patients are diagnosed by examining their previous medical history. Previous medical records are being reviewed to determine the appropriate dose for patients.

Stock market

Financial analysts use it to identify potential stock markets. It also enables them to identify stock behavior.

E-commerce

With rain forest algorithms, e-commerce marketers can predict customer preferences based on past user behavior.

When to avoid using random forests

Random jungle algorithms are incorrect in the following cases:

Extrapolation

Random deforestation is bad for data extraction. In contrast to the linear regression, the visual acuity is available to measure values beyond the viewing range. This explains why many random forest uses are related to segregation.

Separate data

A random forest does not produce good results if the data is very small. In this case, a subset of features and a bootstrapped sample will generate consistent space. This will lead to unproductive divisions, which will affect the outcome.

The benefits of a random forest

- It can perform both retrofitting and editing functions.

- The random forest produces good predictions that can be easily understood.

- Can handle large data sets well.

- Random forest algorithm provides a high level of accuracy in predicting results over the decision tree algorithm.

Random deforestation

- If you are using a random forest, additional resources are needed to calculate.

- It is more time consuming compared to the decision tree algorithm.

Conclusion

The rain forest algorithm is an easy-to-use and flexible machine learning algorithm. It uses integrated learning, which enables organizations to solve setup problems and categories.

This is a good developer algorithm because it solves the problem of data overload. It is a very clever tool

Get all your business need here only | Top Offshoring Service provider. (24x7offshoring.com)