Data distillation

It is a significant reduction of the sample that happens by creating artificial things, or we can say object that aggregate useful information stored in the data and allow tuning machine learning algorithms no less efficient than on all data.

In this we will talk about two things -a deep learning approach that solve the problem of reducing the sample size and even more ambitious task creating

synthetic data that stores all the useful information about the sample.

History and terminology of data distillation

Explore the concept of data distillation, a powerful technique that enables significant reduction of sample size while preserving useful information for efficient machine learning

data distillation is a significant process of reduction of the sample that happens by creating artificial objects, as we discuss it above paragraph.

Initially the term “distillation” appear in Hinton work, then the knowledge distillation term was established and is now widely recognized. Knowledge distillation means the construction of a simple model based on several models even though the terms sound similar, data distillation is completely different topic that solves a different difficulty, or we can say problems.

There are many classic methods for selecting objects, ranging from the selection in metric algorithm to selection of support vector in SVM. But distillation is not a selection but a synthesis of object .in the era of deep learning.

History and Terminology of Data Distillation: Extracting Insights from Complex Data

Introduction: Data distillation, also known as data cleansing or data refinement, is a process of transforming and filtering raw data to extract valuable insights and remove irrelevant or noisy information. This article explores the history and terminology of data distillation, highlighting its importance in dealing with complex data and enabling organizations to make informed decisions based on reliable information.

- Historical Context: The concept of data distillation can be traced back to the early days of data processing and analysis. As technology advanced and the volume of data grew exponentially, researchers and practitioners realized the need for methods to cleanse and refine data to extract meaningful information. Over the years, data distillation techniques have evolved and become more sophisticated, leveraging advanced algorithms and automation to handle complex datasets.

- Terminology of Data Distillation: a. Data Cleansing: Data cleansing refers to the process of identifying and correcting errors, inconsistencies, and inaccuracies in the dataset. This includes tasks such as removing duplicate entries, correcting misspelled or inaccurate data, and standardizing data formats. Data cleansing ensures data accuracy and improves the quality of the dataset.

b. Data Filtering: Data filtering involves the removal of irrelevant or redundant data from the dataset. It focuses on extracting the most relevant and valuable information while discarding noise, outliers, or data points that do not contribute to the analysis. Filtering criteria can be based on specific attributes, time frames, or predefined rules.

c. Data Integration: Data integration involves combining data from multiple sources into a unified dataset. During the integration process, redundant or duplicate data is eliminated, and data is standardized to ensure consistency. Data integration enhances data quality and provides a comprehensive view for analysis and decision-making.

d. Data Transformation: Data transformation involves converting data from one format or representation to another to meet specific requirements. This can include scaling or normalizing numerical data, converting categorical data into numerical values, or aggregating data at different levels of granularity. Data transformation enables data compatibility and enhances analysis capabilities.

e. Feature Selection: Feature selection focuses on identifying the most relevant and informative features or variables in the dataset. This process helps reduce dimensionality, eliminate irrelevant or redundant features, and improve model performance. Feature selection techniques consider factors such as feature importance, correlation, or statistical significance.

- Importance of Data Distillation: Data distillation plays a crucial role in extracting valuable insights from complex datasets. By cleansing and refining the data, organizations can: a. Improve Data Accuracy: Data distillation techniques ensure data accuracy by eliminating errors, inconsistencies, and duplicate entries. Clean and accurate data is essential for making reliable and informed decisions.

b. Enhance Data Quality: By filtering out noise, irrelevant information, and outliers, data distillation improves the quality of the dataset. This leads to more meaningful analysis and better understanding of underlying patterns and trends.

c. Reduce Bias and Variance: Data distillation helps address bias and variance issues by eliminating skewed or noisy data points. This ensures that analysis and models are more representative of the true underlying patterns in the data.

d. Optimize Analysis and Decision-Making: By providing a refined and reliable dataset, data distillation enables organizations to conduct more accurate and meaningful analysis. This, in turn, leads to better decision-making and more successful outcomes.

e. Improve Efficiency: Data distillation automates the process of data cleaning and refinement, saving time and effort for data professionals. This allows them to focus on higher-level analysis and interpretation of the data.

Conclusion: Data distillation, with its various techniques and terminology, has a rich history in the field of data processing and analysis. It has evolved to meet the growing challenges of dealing with complex datasets and has become an integral part of data-driven decision-making. By employing data cleansing, filtering, integration, transformation, and feature selection techniques, organizations can extract valuable insights, improve data accuracy and quality, reduce bias, and optimize analysis. Data distillation plays a vital role in enabling organizations to make informed decisions and unlock the full potential of their data.

History and Terminology of Data Distillation: Extracting Insights from Complex Data

Introduction: Data distillation, also known as data cleansing or data refinement, is a process of transforming and filtering raw data to extract valuable insights and remove irrelevant or noisy information. This article explores the history and terminology of data distillation, highlighting its importance in dealing with complex data and enabling organizations to make informed decisions based on reliable information.

- Historical Context: The concept of data distillation can be traced back to the early days of data processing and analysis. As technology advanced and the volume of data grew exponentially, researchers and practitioners realized the need for methods to cleanse and refine data to extract meaningful information. Over the years, data distillation techniques have evolved and become more sophisticated, leveraging advanced algorithms and automation to handle complex datasets.

- Terminology of Data Distillation: a. Data Cleansing: Data cleansing refers to the process of identifying and correcting errors, inconsistencies, and inaccuracies in the dataset. This includes tasks such as removing duplicate entries, correcting misspelled or inaccurate data, and standardizing data formats. Data cleansing ensures data accuracy and improves the quality of the dataset.

b. Data Filtering: Data filtering involves the removal of irrelevant or redundant data from the dataset. It focuses on extracting the most relevant and valuable information while discarding noise, outliers, or data points that do not contribute to the analysis. Filtering criteria can be based on specific attributes, time frames, or predefined rules.

c. Data Integration: Data integration involves combining data from multiple sources into a unified dataset. During the integration process, redundant or duplicate data is eliminated, and data is standardized to ensure consistency. Data integration enhances data quality and provides a comprehensive view for analysis and decision-making.

d. Data Transformation: Data transformation involves converting data from one format or representation to another to meet specific requirements. This can include scaling or normalizing numerical data, converting categorical data into numerical values, or aggregating data at different levels of granularity. Data transformation enables data compatibility and enhances analysis capabilities.

e. Feature Selection: Feature selection focuses on identifying the most relevant and informative features or variables in the dataset. This process helps reduce dimensionality, eliminate irrelevant or redundant features, and improve model performance. Feature selection techniques consider factors such as feature importance, correlation, or statistical significance.

- Importance of Data Distillation: Data distillation plays a crucial role in extracting valuable insights from complex datasets. By cleansing and refining the data, organizations can: a. Improve Data Accuracy: Data distillation techniques ensure data accuracy by eliminating errors, inconsistencies, and duplicate entries. Clean and accurate data is essential for making reliable and informed decisions.

b. Enhance Data Quality: By filtering out noise, irrelevant information, and outliers, data distillation improves the quality of the dataset. This leads to more meaningful analysis and better understanding of underlying patterns and trends.

c. Reduce Bias and Variance: Data distillation helps address bias and variance issues by eliminating skewed or noisy data points. This ensures that analysis and models are more representative of the true underlying patterns in the data.

d. Optimize Analysis and Decision-Making: By providing a refined and reliable dataset, data distillation enables organizations to conduct more accurate and meaningful analysis. This, in turn, leads to better decision-making and more successful outcomes.

e. Improve Efficiency: Data distillation automates the process of data cleaning and refinement, saving time and effort for data professionals. This allows them to focus on higher-level analysis and interpretation of the data.

Conclusion: Data distillation, with its various techniques and terminology, has a rich history in the field of data processing and analysis. It has evolved to meet the growing challenges of dealing with complex datasets and has become an integral part of data-driven decision-making. By employing data cleansing, filtering, integration, transformation, and feature selection techniques, organizations can extract valuable insights, improve data accuracy and quality, reduce bias, and optimize analysis. Data distillation plays a vital role in enabling organizations to make informed decisions and unlock the full potential of their data.

History and Terminology of Data Distillation: Uncovering Insights from Complex Data Sets

Introduction: Data distillation, also known as data condensation or data reduction, is a process that aims to extract valuable insights from complex and voluminous data sets by simplifying or summarizing the information. This article delves into the history and terminology of data distillation, exploring its evolution, techniques, and significance in the realm of data analysis and decision-making.

- Historical Context: The concept of data distillation has roots in the field of information theory, which emerged in the mid-20th century. Pioneers such as Claude Shannon and Norbert Wiener contributed to the development of mathematical models for information transmission and storage. Their work laid the foundation for understanding the importance of extracting relevant information from noisy and redundant data.

- Data Distillation Techniques: a. Sampling: Sampling is a common technique in data distillation, where a representative subset of data is selected for analysis. By analyzing a sample, researchers can infer insights about the entire data set, saving computational resources and time.

b. Dimensionality Reduction: Dimensionality reduction techniques aim to reduce the number of variables or features in a data set while preserving its key characteristics. Techniques such as Principal Component Analysis (PCA) and Singular Value Decomposition (SVD) are commonly used to capture the most informative aspects of the data while discarding redundant or less significant features.

c. Clustering: Clustering algorithms group similar data points together based on their attributes or characteristics. By identifying clusters, data distillation techniques can summarize the data set by representing it with a smaller number of representative cluster centroids or prototypes.

d. Feature Selection: Feature selection methods aim to identify the most relevant features or variables that contribute significantly to the data set’s overall information. This process helps eliminate noise and redundancy, focusing only on the most informative aspects of the data.

- Terminology in Data Distillation: a. Data Reduction: Data reduction refers to the process of transforming a large and complex data set into a simplified representation that retains its essential information. It involves techniques such as sampling, dimensionality reduction, and feature selection.

b. Data Condensation: Data condensation is another term used interchangeably with data distillation. It emphasizes the extraction of essential information and the compression of data sets into a more manageable form, facilitating analysis and decision-making.

c. Information Loss: Data distillation involves a trade-off between reducing complexity and retaining important information. Information loss refers to the loss of detail or precision that occurs during the distillation process. It is crucial to strike a balance between data reduction and preserving the data’s critical insights.

d. Data Abstraction: Data abstraction is the process of representing complex data in a simplified and more general form. It involves summarizing or aggregating data to capture its essential characteristics while omitting intricate details.

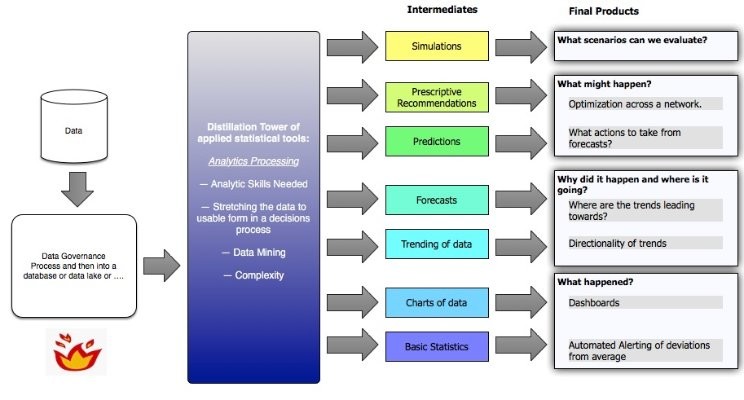

- Significance in Data Analysis: Data distillation plays a significant role in various domains where dealing with large and complex data sets is essential: a. Data Mining: Data distillation helps in discovering patterns, trends, and associations within large data sets. By simplifying the data, researchers can focus on the most informative aspects and derive meaningful insights.

b. Machine Learning: Data distillation is critical in machine learning, where processing vast amounts of data is common. Distilled data sets provide the necessary input for training models, reducing computational requirements and improving efficiency.

c. Decision-Making: Distilled data sets provide decision-makers with a concise and actionable representation of complex information. By distilling the data, decision-makers can make informed choices more efficiently and effectively.

Conclusion: Data distillation has a rich history rooted in information theory and has evolved into a critical process for extracting insights from complex data sets. With techniques such as sampling, dimensionality reduction, clustering, and feature selection, data distillation simplifies data analysis, improves efficiency, and facilitates decision-making. By understanding the history and terminology of data distillation, organizations can leverage its techniques to distill valuable insights from their data, leading to better-informed decisions and driving innovation.

Distillation method

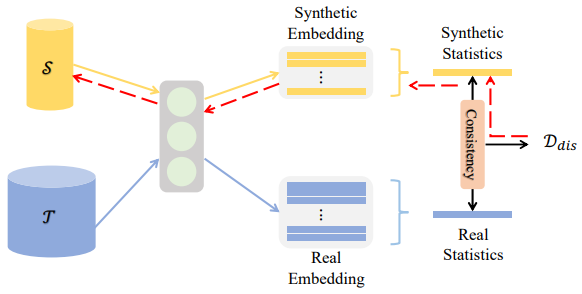

the idea of deep learning distillation of data was proposed in research paper in which an MN IST dataset of 60000 pages was reduced to 10′ in synthetic learning there was one representative image for each class.

In synthetic learning distillation there are one representative images for each class at the same time the Genet was trained on synthetic data to approx the same and converged in several steps. However, training differently provisioned network was already degrading the quality of solution that came from the research paper show the main result and the obtained synthetic data in the MINIS T dataset the data is all similar to real number, although it is logical to expect that a synthetic representative of class ‘zero’

Method development

the research gave an impetus to anew direction of research. This show how to improve the data compression during the data distillation. So in many problems a representative of artificial data could belong to several classes at least one time with different probabilities. Not only the data was distilled but also their labels and as a result learning on a sample of only five distilled images.

| In given box | in giving box |

| AI | distillation machine |

| Distillation in AI | Distillation in machine |

| Distillation knowledge is a model compression techniques whereby little network is learned by larger trained neural network. The smaller network. The smaller network is trained to behave like the high neural network. This enables the development of such models on small device such as smartphones. | In machine, here is the process of transfer knowledge in a high model to a same one. While large models have higher knowledge capacity than small models the capacity may not be fully utilized it must be computationally just as expensive to evaluate a model even if utilizes little of its knowledge. |

Distillation in neural network.

Distillation of knowledge in machine learn data distillation imagesinn is an architecture agnostic approach for generalization within a neural network to train another neural network.

Importance

most important is that it help in very large-scale models are being trained.

It helps in especially in natural language process.

It could reduce the size of models by 87% and retain 96% of its overall performance.

Difficulties with neural network

The aim of every beginner is to optimize its performance, but this does not exactly translate as a generalization of knowledge with the given dataset.

It fails to explicitly mention the shape of number just like 6 is similar to 9 they cannot understand properly.

Hence, neural network is never ever be asked to the generalization of the training data. As a result in normally trained neural network information in every neuron is not equal with respect to the desired output

OUR WEBSITE;Get all your business need here only | Top Offshoring Service provider. (24x7offshoring.com)