With the rapid ASR development of artificial intelligence, speech recognition technology has been applied to our daily life. Voice assistants on mobile phones use voice recognition technology to convert voice into text, and language control functions also appear in self-driving cars, all of which rely on ASR voice recognition technology in interaction . In speech recognition technology, the most direct type of data annotation is speech annotation.

What is Voice Annotation?

Speech annotation is a common type of annotation in the data annotation industry. Its main job is to “extract” the text information and various sounds contained in the voice, and then transcribe or synthesize it. The tagged data can be mainly used for artificial intelligence. Machine learning can be applied in speech recognition, dialogue robots and other fields. It is equivalent to installing “ears” on the computer system, so that it has the function of “listening”, so that the computer can realize accurate speech recognition.

Speech annotation classification:

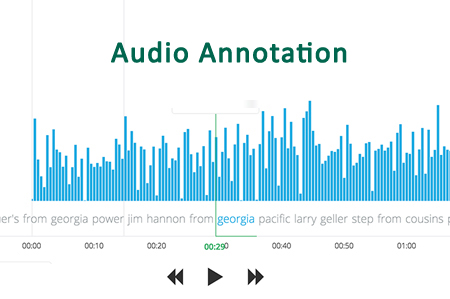

ASR Speech Transcription and Semantic Segmentation . Speech transcription is mainly to convert speech into text. Now many tagging platforms can already rely on machines to recognize part of the content, and have a certain automatic recognition function. Finally, it only needs to be checked and segmented as required.

1. ASR voice transcription

ASR is automatic speech recognition technology, which is technology that converts human speech into text. Speech transcription is the process of transcribing speech data into text data, and it is a relatively common tagging form in the field of data tagging. Transliteration is the process of converting characters in one alphabet into characters in another alphabet. Simply put, transliteration is the corresponding conversion between characters. Phonetic transliteration can only be converted correspondingly to characters in another alphabet, thus guaranteeing a complete, unambiguous, reversible conversion between the two alphabets. Therefore, transliteration is for conversion between phonetic writing systems. ASR speech transcription is a high-tech technology that converts speech signals into corresponding text or commands through the processing and understanding process.

ASR speech transcription is often used in customer service, education and training institutions, medical care, finance and other fields.

Preprocessing:

1. Silence cut at the beginning and end to reduce interference. The operation of silence cut is generally called VAD.

2. Sound framing is to cut the sound into small segments. Each segment is called a frame. It is realized by using the moving window function. It is not a simple cut, and there is generally overlap between the frames.

The Importance of Data Labeling to ASR

The essence of ASR is a pattern recognition system, which includes three basic units: feature extraction, pattern matching, and reference patterns. Feature extraction is applied to the labeling method of attribute classification. First, the input speech is preprocessed, and then the features of the speech are extracted. On this basis, the template required for speech recognition is established, and then the original speech template stored in the computer is Compare with the characteristics of the input speech signal to find the best template that matches the input speech. According to the definition of this template, by looking up the table, you can get the best recognition result of the computer. This best result is directly related to the selection of features, the quality of the speech model, and the accuracy of the template, and requires a large amount of labeled data for continuous training to obtain.

ASR data support

Technology has collected ” 1000 hours of adult Chinese voice mobile phone collection data “, “1200 hours of Korean mobile phone voice data collection”, ” 1000 hours of Japanese mobile phone voice data collection “, “200 hours of German language data collection”, id20000 Chinese wake-up word data set” and other data sets that can be directly used for algorithm research, saving the research and development time of algorithm manufacturers.

As a professional data collection and labeling company, Jinglianwen Technology has about 100T of its own copyrighted voice data sets in its existing library, including voice data sets of English spoken by people from various countries, Chinese Mandarin data sets, and local dialect data sets, etc., all of which have been collected Authorized by people, it can help optimize the speech recognition algorithm. Jinglianwen also has a professional voice collection and recording studio with a high level of scene construction capabilities. It has a reserve of nearly 10,000 people to be collected nationwide, and supports voice collection in multiple languages, dialects, and environments. Self-built advanced data labeling platform and mature labeling, review, quality inspection mechanism, support voice engineering including voice cutting, ASR voice transcription, voice emotion judgment, voiceprint recognition labeling and other types of data labeling.

Jinglianwen Technology has always focused on various needs in artificial intelligence scenarios such as smart driving, smart home, public safety, smart city, smart medical care, smart finance, smart education, and smart justice, providing underlying technical support for AI technology.

The future direction of speech annotation

The main development directions of speech annotation are TTS and ASR. TTS is speech synthesis, which intelligently converts text into natural speech. TTS technology converts text files quickly and in real time. Under the action of its unique intelligent voice controller, the voice rhythm of text output is smooth, making listeners feel natural when listening to information, which can make up for the bluntness and indifference of machine language output. TTS is a kind of speech synthesis application, which converts files or web pages stored in the computer into natural speech output. TTS can not only help people with visual impairments to read information on computers, but also increase the readability of text documents. TTS applications include voice-driven mail and voice-sensitive systems, and are often used in conjunction with voice recognition programs.

ASR is speech recognition technology , which converts sound into text. Speech recognition is one of the perfect performances of mathematical probability. A recognition system with a high accuracy rate generally corresponds to a large amount of manually labeled data. Therefore, the labeling work is equivalent to transforming artificial intelligence into machine intelligence. If the voice lights up life, then labeling Will gather wisdom, wisdom life. The performance of a speech recognition system roughly determines the following four factors: the size of the recognition vocabulary and the complexity of the speech, the quality of the speech signal, whether it is a single speaker or multiple speakers, and hardware.

If a machine wants to have a dialogue with a human, it needs to achieve three steps, namely listening, understanding and answering, which cannot be separated from language recognition technology.

With the continuous development of science and technology, many people in the industry believe that voice may become the next important technology platform, and the technology of speech recognition (ASR) and speech synthesis (TTS) is also constantly breaking through. Although the theoretical technology has made a lot of progress, the topic of data labeling is still inseparable in the actual application process, and the accuracy of the training data also greatly affects the performance of the algorithm model.