Introduction:

In the age of data-driven decision-making, the ethical considerations surrounding datasets have become a critical aspect of technological advancements. The datasets that fuel machine learning algorithms play a pivotal role in shaping outcomes across various domains, from finance to healthcare and beyond. However, the presence of bias in datasets and the resulting unfair outcomes pose significant ethical challenges. This exploration delves into the ethical dimensions of datasets, focusing on the identification and mitigation of bias, and the imperative to foster fairness in the development and deployment of machine learning models.

The Ethical Imperative in Datasets:

1. Impact on Decision-Making:

- Datasets influence the decisions and outcomes generated by machine learning models. Whether it’s in hiring processes, lending decisions, or healthcare diagnostics, biased datasets can perpetuate and amplify existing inequalities, affecting individuals and communities.

2. Algorithmic Fairness:

- The concept of algorithmic fairness centers on ensuring that machine learning models treat all individuals and groups fairly, without favoring or disadvantaging any particular demographic. Ethical datasets are fundamental to achieving algorithmic fairness.

3. Social Implications:

- Biased datasets can have far-reaching social implications, reinforcing stereotypes, exacerbating inequalities, and leading to unjust outcomes. Recognizing the ethical implications of biased datasets is crucial for fostering a technology-driven future that aligns with principles of equity and justice.

Identifying Bias in Datasets:

1. Sampling Bias:

- Sampling bias occurs when the dataset is not representative of the broader population it aims to model. This can lead to skewed predictions and outcomes that do not accurately reflect the realities of diverse groups within the population.

2. Labeling Bias:

- Labeling bias occurs when the labels assigned to data instances are influenced by subjective judgments or systemic prejudices. If training data is labeled in a biased manner, the model will learn and perpetuate those biases during training.

3. Historical Biases:

- Datasets may reflect historical biases present in the data collection processes. If historical inequities are present in the data, machine learning models trained on such datasets may inadvertently learn and perpetuate these biases.

4. Representation Bias:

- Representation bias occurs when certain groups are underrepresented or overrepresented in the dataset. This lack of diversity can result in models that are less accurate and fair when applied to underrepresented groups.

5. Algorithmic Bias:

- Algorithmic bias refers to the biases that emerge as a result of the algorithms themselves, often magnifying biases present in the training data. This can lead to discriminatory outcomes and reinforce existing inequalities.

Mitigating Bias in Datasets:

1. Diverse and Representative Data Collection:

- Ensuring diversity and representation in data collection is foundational to mitigating bias. By deliberately including diverse voices and perspectives, datasets become more comprehensive and reflective of the complexities within the population.

2. Continuous Monitoring and Auditing:

- Regularly monitoring and auditing datasets for biases is essential. This involves scrutinizing the data for patterns that may lead to biased outcomes and making adjustments as needed throughout the model development lifecycle.

3. Ethical Guidelines for Labeling:

- Establishing clear ethical guidelines for labeling data helps prevent labeling bias. Human annotators should be trained to avoid introducing subjective judgments and to adhere to ethical principles that prioritize fairness and impartiality.

4. De-biasing Techniques:

- De-biasing techniques involve adjusting the dataset or the model to reduce biases. This can include re-sampling to address representation bias, re-labeling to correct labeling bias, or modifying the model’s parameters to counteract algorithmic bias.

5. Community Engagement and Feedback:

- Engaging with the communities represented in the data is crucial. Seeking feedback and input from diverse stakeholders helps in understanding the nuances of biases and ensures that the dataset is continually refined to be more inclusive and fair.

Fairness in Machine Learning Models:

1. Fairness Metrics:

- Metrics for evaluating fairness, such as disparate impact, equalized odds, and demographic parity, provide quantitative measures to assess the fairness of machine learning models. These metrics help in identifying and addressing disparities in model predictions across different groups.

2. Explainability and Transparency:

- Transparent and interpretable models facilitate the identification of biases. When models are explainable, stakeholders can understand how decisions are made, making it easier to identify and rectify biased patterns in the predictions.

3. Fairness-Aware Algorithms:

- Fairness-aware algorithms are designed to explicitly account for fairness considerations during the training process. These algorithms aim to mitigate biases by incorporating fairness constraints and ensuring equitable treatment across different demographic groups.

4. Model Evaluation across Demographics:

- Evaluating models across diverse demographic groups is essential. Understanding how a model performs for different subgroups helps in uncovering disparities and refining the model to ensure equitable outcomes.

5. Ethical Review Boards:

- Establishing ethical review boards or committees to evaluate the impact of machine learning models on different communities adds an additional layer of oversight. These boards can provide guidance on ethical considerations, especially in sensitive domains like healthcare and criminal justice.

Challenges in Ensuring Ethical Datasets:

1. Limited Data Access:

- In certain domains, obtaining diverse and representative datasets may be challenging due to limited access to certain populations or sensitive information. Striking a balance between data access and privacy is crucial in such cases.

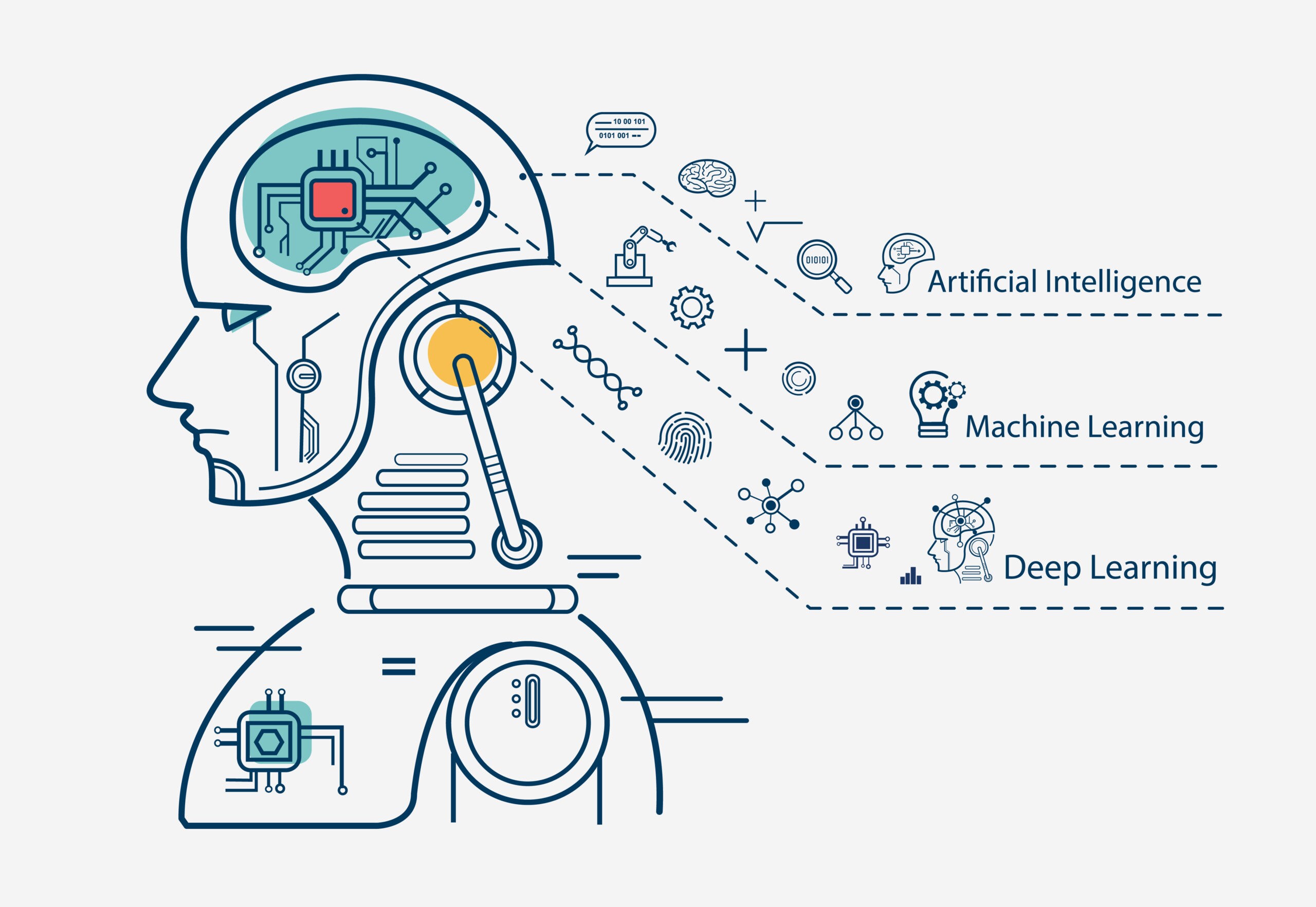

2. Algorithmic Complexity:

- The inherent complexity of machine learning algorithms, especially deep learning models, can make it challenging to identify and understand how biases emerge. Ensuring transparency in algorithmic decision-making remains a persistent challenge.

3. Dynamic Nature of Bias:

- Bias in datasets and models is dynamic and can evolve over time. New biases may emerge, or existing biases may become more pronounced as societal norms shift. Continuous monitoring and adaptability are required to address these challenges.

4. Interdisciplinary Collaboration:

- Addressing ethical considerations in datasets requires collaboration between data scientists, domain experts, ethicists, and community representatives. Interdisciplinary collaboration ensures a holistic approach to identifying and mitigating biases.

5. Lack of Standardization:

- The lack of standardized practices for addressing bias in datasets poses challenges. Developing industry-wide standards and guidelines for ethical dataset collection, annotation, and model development is essential for fostering a consistent and ethical approach.

Real-World Implications:

1. Criminal Justice System:

- Biases in criminal justice datasets have been known to contribute to discriminatory outcomes. From predictive policing algorithms to sentencing models, biased datasets can reinforce systemic injustices within the criminal justice system.

2. Healthcare Disparities:

- Biased healthcare datasets can contribute to disparities in diagnostics and treatment. For example, if a dataset is not representative of diverse demographic groups, the resulting models may not accurately predict disease risks or treatment outcomes for all populations.

3. Financial Services:

- Biases in financial datasets can lead to discriminatory lending practices. Models trained on biased data may result in unequal access to financial services, impacting marginalized communities and perpetuating economic disparities.

4. Employment and Hiring:

- Bias in hiring datasets can perpetuate inequalities in employment opportunities. If historical biases are present in the training data, machine learning models may inadvertently favor certain demographics over others, reinforcing existing disparities.

5. Education Systems:

- Biases in educational datasets can impact the fairness of educational assessments and interventions. Unfair outcomes in educational models can contribute to disparities in access to resources and opportunities for different student groups.

Future Directions in Ethical Datasets:

1. Explainable AI and Interpretability:

- The integration of explainable AI and interpretability features in machine learning models is crucial for understanding and addressing biases. Future models should prioritize transparency to enhance accountability and trust.

2. Cross-Domain Collaboration:

- Cross-domain collaboration between technologists, ethicists, policymakers, and community representatives is essential. Collaborative efforts can lead to the development of standardized ethical guidelines and practices that transcend individual sectors.

3. AI Ethics Education:

- Integrating AI ethics education into the training of data scientists and machine learning practitioners is crucial. Building awareness of ethical considerations and providing the tools to address biases should be a fundamental component of AI education.

4. Community-Centric Approaches:

- Emphasizing community-centric approaches in data collection and model development ensures that diverse voices are heard. Involving communities in the decision-making processes related to datasets fosters inclusivity and reduces the risk of perpetuating biases.

5. Regulatory Frameworks:

- Establishing robust regulatory frameworks for ethical AI practices is a key consideration for the future. Regulatory bodies can play a crucial role in ensuring adherence to ethical guidelines, promoting accountability, and safeguarding against the misuse of AI technologies.

Conclusion:

As datasets continue to power the evolution of machine learning, addressing ethical considerations has become paramount. Mitigating bias and fostering fairness in datasets are foundational to building responsible AI systems that align with principles of equity and justice. The identification and correction of biases in datasets require a concerted effort from interdisciplinary teams, involving data scientists, domain experts, ethicists, and community representatives. The real-world implications of biased datasets underscore the urgency of adopting ethical practices and standards in the development and deployment of machine learning models. Looking ahead, a commitment to transparency, community engagement, and continuous vigilance is essential to navigating the ethical landscape of datasets and ensuring the responsible and equitable use of AI technologies in diverse societal contexts.