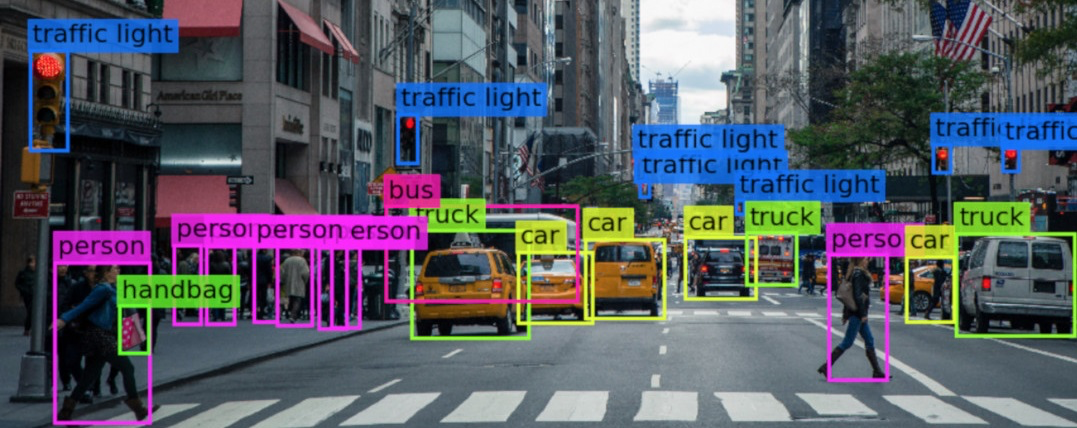

How can we achieve the Project highest quality in our AI/ML? The answer, many scientists believe, is high-quality training data . But securing such high-quality work may not be so easy. So the question is “What are the best practices for data labeling?

One might think of data labeling as a tedious job that requires no strategic thinking. Annotators simply process their data, and submit!

However, the reality is different. The process of data labeling can be long and repetitive, but it is never easy, especially when managing labeling projects. In fact, many AI projects fail due to poor training data quality and inefficient management.

1. Define and plan labeling projects

Every technology project needs to start with a definition and planning step, even for a task as seemingly simple as data labeling.

First, there needs to be clear clarification and identification of key elements in the project, including:

Key stakeholders

Overall goals

Methods of communication and reporting

Characteristics of the data to be labeled

How the data should be labeled

Data Labeling Best Practices – Training Dataset

key stakeholders

As far as key stakeholders are concerned, there are three main types:

Project manager of the overall AI product: The project manager must determine the practical application of the project and what kind of data needs to be put into the AI/ML model.

Callout Project Manager: His/her main responsibilities include day-to-day functions and they will be responsible for the quality of the output. They will work directly with the annotators and conduct the necessary training. If you have callout project managers, make sure they have subject matter expertise so they can start working on projects right away.

Annotators: For annotators, it is best if they are well trained in labeling tools (or automated data labeling tools).

Once you identify your stakeholders, you can easily identify their responsibilities. For example, the overall quality of the dataset will be the responsibility of the labeling project manager, but how the data is used in the AI/ML model will be entirely the responsibility of the project manager.

Each of these stakeholders has their own work, their own skills, and valuable perspectives to achieve the best possible outcome. If your project lacks any of these stakeholders, it may be at risk of performing poorly.

Overall objective

As with any data labeling project, you need to know what you want as an output so that you can develop the appropriate measures to achieve it. With key project stakeholders in hand, project managers can bring all their input together and come up with overall goals.

2. Manage timeline Project

The timeline is another important feature that needs to be well taken care of. Every stakeholder must be involved in the process to define expectations, constraints and dependencies on the timeline. These features can have a big impact on a project’s budget and timeline.

Data Labeling Best Practices – Managing Timelines

There are some ground rules for teams to develop an appropriate schedule:

All stakeholders must be involved in the process of creating the timeline

Schedule should be clearly stated (date, time, etc.)

The schedule must also include time for training and creating guidelines.

If there are any issues or uncertainties related to the data, the labeling process should be communicated to all stakeholders and documented as a risk where applicable.

During this process, the schedule will be determined as follows:

For product managers, they must take into account the overall needs of the project. What is the deadline? What are the requirements and user experience? Since product managers are not directly involved in the data labeling process, they need to know or understand the complexities of the project in order to set reasonable expectations.

For annotation managers, they need to know the complexity of the project to assign need-to-know annotators to complete the project. What subject knowledge is required for this project? How many people are needed to do this? How do they ensure high quality and follow schedules efficiently? These are the questions they need answered.

For data annotators, they need to articulate what type of data they are working with, what type of annotation, and the knowledge required to do the job. If they don’t, they must be trained by specialists.

3. Create Mentoring and Training Workforce

Before jumping into the labeling process, you must consider guidelines and training so the team can achieve the highest quality in their work.

create guide

To make labeled data consistent, teams need to come up with complete guidelines for a specific data labeling project.

This guide should be built on all information about the project. If you have a similar project, you should base your new guide on it as well.

Data Labeling Best Practices – Creation Guide

Here are some basic rules for creating guidelines in data callouts:

Callout Project managers need to take into account the complexity and length of the project. Especially projects with complexity can affect the complexity of the guideline.

Instructions for tools and callouts should be included in the guide. It must clearly explain what the tool is about and how to use it.

There must be examples of each label the annotator must use. This helps annotators understand data scenarios and expected outputs more easily.

Labeling project managers should consider including end-goals or downstream goals in labeling guidelines to provide context and motivation for staff.

Notation The project manager needs to ensure that this guideline is consistent with the rest of the project’s documentation to avoid conflicts and confusion.

training workforce

Labeling team managers can now easily continue training based on stakeholder guidelines.

Again, don’t think of labeling as an easy job. It can be repetitive, but also requires a lot of training and subject matter knowledge. Additionally, the training of data annotators requires attention to a number of considerations, including:

Nature of project: Is the project complex? Does the data require subject matter knowledge?

Time frame of the project: the length of the project will define the total time spent on training

Resources for individuals or groups that manage the workforce.

After the training process, annotators should fully understand the items and generate annotations that are both valid (accurate) and reliable (consistent).

Data Labeling Best Practices – Training the Workforce

During training, the annotation manager needs to ensure that:

Training is based on one guideline to ensure consistency.

If a new annotator joins the team when the project has already started, the training process will be repeated, either through direct training or recorded video training.

If there are any questions, all questions must be answered before the project begins.

If there is confusion or misunderstanding, it should be addressed at the beginning of the project to avoid any mistakes later.

Quality output issues must be clearly defined during the training process. If there is any quality assurance method, it should be disclosed to the annotator.

Written feedback is given to data annotators so they know which indicators they will be working on.

During the annotation process, the quality of the training dataset depends on how the annotation manager drives the annotation team. To ensure the best results, you can take the following steps:

After the requirements of the project are clarified, you need to set reasonable goals and timetables for the annotators.

Each estimation and testing phase needs to be done beforehand.

You need to define the quality assurance process and who will be involved

Annotation managers need to address collaboration among annotators. Who will help whom? Who will check whose work?

You break the project into smaller stages and then provide feedback on wrong work.

The Annotation Manager will ensure technical support for the annotation tools throughout the annotation process to prevent project delays. If there is any problem that cannot be solved alone, he/she needs to seek a feasible solution from the tool provider or project manager.

For more details on how to manage the data labeling process, check out: The Essential Guide to Ensuring Data Labeling Quality

4. Feedback and change

After labeling is done, it is important to evaluate the overall results and how the team got the job done. By doing this, you can confirm the validity and reliability of your callouts before submitting them to other teams or clients.

If there are additional annotations, you need to revisit the strategic adjustments to the project definition, training process, and workforce so that the next round of annotation collection can be more efficient.

It is also important to implement processes to detect data drift and anomalies that may require additional labeling.