Approach to Interpretable Machine Learning

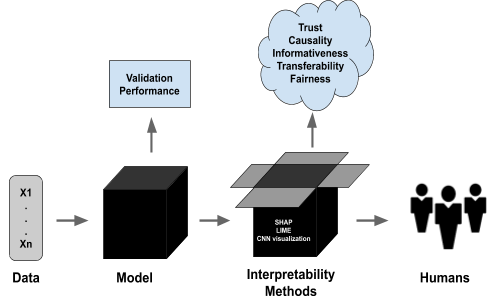

Although modern machine learning and deep learning methods allow for complex and in-depth data analytics, the predictive models generated by these methods are often highly complex, and lack transparency. Explainable machine learning (XAI) methods are used to improve the interpretability of these complex machine learning models, and in doing so improve transparency. However, the inherent fitness of these explainable methods can be hard to evaluate.

In particular, machine learning methods to evaluate the fidelity of the explanation to the underlying black box require further development, especially for tabular data. In this paper, we (a) propose a three-phase approach to developing an evaluation method; (b) adapt an existing evaluation of machine learning method primarily for image and text data to evaluate machine learning models trained on tabular data; and (c) evaluate two popular explainable methods using this evaluation method.

Our evaluations suggest that the internal mechanism of the underlying predictive machine learning model, the internal mechanism of the explainable method used, and model and data complexity all affect explanation fidelity. Given that explanation fidelity is so sensitive to context and tools and data used, we could not identify any specific explainable method as being superior to another.

Modern machine learning and deep learning techniques have allowed for the analytics of complex data and enabled decision-making (Tan et al, 2020). However, these advanced machine learning techniques often hamper human interpretability, therefore lacking transparency, affecting the safety of and fairness towards stakeholders.

Explainable machine learning (XAI) methods are used to improve the interpretability of these complex “black box” models, thereby increasing transparency and enabling informed decision-making. Despite this, methods to assess the quality of explanations generated by such explainable methods are so far under-explored. In particular, functionally grounded evaluation methods, which measure the inherent ability of explainable methods in a given situation, are often specific to a particular type of dataset or explainable method.

A key measure of functionally grounded explanation fitness is explanation fidelity, which assesses the correctness and completeness of the explanation concerning the underlying black box predictive model.

Evaluations of fidelity in literature can generally be classified as one of the following: external fidelity evaluation, which assesses how well the prediction of the underlying model and the explanation agree, and internal fidelity, which assesses how well the explanation matches the decision-making processes of the underlying model.

While methods to evaluate external fidelity are relatively common in literature, evaluation methods to evaluate internal fidelity using black box models are generally limited to text and image data, rather than tabular.

In this paper, we propose a novel evaluation method based on a three-phase approach: (1) the creation of a fully transparent, inherently interpretable white box model, and evaluation of explanations against this model; (2) the usage of the white box as a proxy to refine and improve the evaluation of explanations generated by a black box model; and (3) test the fidelity of explanations for a black box model using the refined method from the second phase.

The main contributions of this work are as follows:

- The design of a three-phase approach to developing a fidelity evaluation

method. - The development of a fidelity evaluation method for black box models

trained on tabular data. - The evaluation of two common and popular local feature attribution explainable methods (LIME and SHAP) using the proposed evaluation approach and method.

This paper is structured as follows. The following section (section 2) will provide a more detailed introduction to the field of XAI, as well as evaluations of explanations. Section 3 will outline the fidelity evaluation undertaken as part of this work, and section 4 will describe the experimental setup used to implement this approach. The results of the experiments are outlined in section 5 and section 6 will conclude this work.

Background and Related Works

Explainable AI

The “black box problem” of machine learning arises from the inherent complexity and sophisticated internal data representations of many modern machine learning and machine learning algorithms. While prediction models created by such algorithms may produce more accurate results, this accuracy comes at the cost of human interpretability of these prediction models. As such, the research theme of XAI has arisen to provide the ability to interpret the decision-making of black box prediction models, to ensure system quality and facilitate informed decision-making.

Evaluating Explanation Fidelity

The evaluation method developed in this work focuses on this latter category of functionally-grounded evaluation. Specifically, we will develop an evaluation method to assess explanation fidelity concerning the original predictive model. The evaluation of fidelity in many ways remains an open question in the field of XAI.

The term “fidelity” is generally used to refer to a measure of how faithful and relevant an explanation is to the underlying black box model. As such, fidelity is an integral requirement of explanations, given that it is a measure of explanation accuracy, and lack of fidelity in explanations could hamper decision-making, or enable misinformed decision-making

Fidelity Evaluation Approach

We used a three-phase approach to develop an appropriate method to evaluate the fidelity of a black box trained on tabular data. This approach begins with the creation of a fully transparent, inherently interpretable white-box model (in this case, a Decision Tree), and the evaluation of explanations directly against this white box model.

Following this, the white box is used as a proxy for a black box model to develop and refine a method to evaluate explanations for a black box model, which ensures that the method is suitable and accurate. In the final phase, this developed method is applied to test the fidelity of explanations for a black box model.

Phase 1: Determining Fidelity using White Box Model

The extraction of the true features and explanation features differs depending on the predictive model and explainable methods used. For a standard, single decision tree predictive model, the most appropriate approach to extract the true features for any given instance is to identify the set of unique features that fall along the decision path for that instance. For example, for the tree in Figure 1, the model has returned a result of “Positive” (diabetic) based on an individual’s test results because their glucose level is under 127.5 and they have experienced more than 5 pregnancies.

Thus, the ”true features” for this instance are ”Glucose” and ”Pregnancies”. For feature attribution explanations, or predictive models that apply a coefficient to each feature, the features can be sorted by this weighting and the most appropriate number of features can be chosen. To test the recall of the most relevant features when using a decision tree, an appropriate sample of explanation features is required.

Phase 2: White Box as Proxy for Black Box

The general approach of this method is to identify the features deemed to be important by the explanation and perturb or remove these features from the original input. A prediction is then generated from this new input, and the original and new predictions are compared (Du et al, 2019; Fong and Vedaldi, 2017; Nguyen, 2018; Samek et al, 2017).

When evaluating predictive models trained on image or text data, the relevant features can simply removed from the original input or blurred, and the degree of change in the prediction probability for the originally predicted class is used as an indicator of the correctness of the explanation (Du et al, 2019; Fong and Vedaldi, 2017; Nguyen, 2018; Samek et al, 2017). While this approach of “removal” of features is relatively simple in image or text data, this approach will not hold for tabular data, where”gaps” in the input are automatically imputed by the predictive model, or are otherwise treated as some improbable value, such as infinity.

Thus, in previous work (Velmurugan et al, 2021), rather than removing features, we attempted to alter them through perturbation to reflect a value outside of the range of values considered to be relevant by the explanation

Phase 3: Determining Fidelity using Black Box Model

The identified heuristics for parameters discovered in phase 2 will be applied during this phase to evaluate explanations for a black box model. As noted, the general approach for the fidelity evaluation method proposed in this work mirrors ablation-based methods used for text and image data, wherein the data is altered through the “removal” of relevant features and the changes in prediction probability for the original and altered inputs is compared (Du et al,

2019; Fong and Vedaldi, 2017; Nguyen, 2018; Samek et al, 2017). Two major changes are made to adapt this method for tabular data.

Conclusion

Given the increasing use of explainable machine learning methods to understand the decision-making of complex, opaque predictive models, it becomes necessary to understand how well an explainable method can interpret any given model. However, evaluation methods to achieve this remain an open question in the field of XAI, particularly for tabular data.

In this work, we proposed the following: a three-phase approach to developing an evaluation method for XAI; an evaluation method to assess the explanations provided by feature attribution explainable methods for tabular data; and an evaluation of LIME and SHAP, two popular feature attribution methods, for machine learning models trained on six, well-known, open-source datasets.

As part of our study, we refined existing evaluation approaches into an evaluation method for assessing the fidelity of black box models trained on tabular data, and defined measures of fidelity. Our evaluations of LIME and SHAP using this method revealed the internal mechanism of the underlying predictive machine learning model, the internal mechanism of the explainable method, and model and data complexity all affected explanation fidelity.

We also found that given that explanation, fidelity is so sensitive to context, there was no one explainable method that was superior to the other, and that boundaries set by explanations were rarely firm. We also highlight the future work needed to further refine and extend the proposed approach and method.