What is Image Annotation?

Image annotation is the process of labeling images from a dataset to train a machine learning model. Therefore, image annotation is used to mark the features that you need the system to recognize. Training ML models with labeled data is called supervised learning.

Labeling tasks often involve manual work, sometimes with computer assistance. Machine learning engineers predetermine labels, called “classes,” and provide image-specific information to computer vision models. After the model is trained and deployed, it will predict and recognize those predetermined features in new images that have not yet been labeled.

The role of image annotation

Labeling images is necessary for feature datasets because it lets the trained model know what the important parts of the image are (categories), so that these annotations can later be used to identify those categories in new, never-before-seen images.

The role of image annotation for computer vision

Image annotation plays an important role in computer vision, a technique that enables computers to gain high-level understanding from digital images or videos, and to see and interpret visual information like humans.

Computer vision technology has enabled several amazing artificial intelligence applications, such as self-driving cars, tumor detection, and drones. However, most notable applications of computer vision are possible without image annotation.

Image annotation is a major step in creating most computer vision models. Datasets must be useful components of machine learning and deep learning for computer vision.

This article details the purpose of image annotation, the types of image annotation, and the techniques used to make image annotation possible.

In particular, this article will discuss:

1. Definition of image annotation. What is image annotation and why is it needed?

2. The process of labeling images. How to successfully annotate image datasets.

3. Types of image annotation. Popular algorithms and unique strategies for annotating images.

Types of Image Annotation

Types of image annotation : image classification, object detection, object recognition, image segmentation, machine learning, and computer vision models. It is a technique for creating a reliable dataset for a model to train on, and thus is useful for supervised and semi-supervised machine learning models.

For more information on the difference between supervised and unsupervised machine learning models, we recommend Semi-supervised Machine Learning Models and Introduction to Self-Supervised Learning: What It Is, Examples and Methods for Computer Vision. In these articles, we discuss their differences and why some models require annotated datasets while others do not.

The purpose of image annotation (image classification, object detection, etc.) requires different image annotation techniques to develop effective datasets.

1. Image Classification

Image classification is a machine learning model that requires images to have a single label to identify the entire image. The image annotation process for image classification models aims to identify the presence of similar objects in the images of a dataset.

It is used to train an AI model to recognize an object in an unlabeled image that looks similar to a class in the annotated image used to train the model. The training images used for image classification are also called labels. Therefore, image classification aims to simply recognize the presence of a specific object and name it a predefined class.

An example of an image classification model is to “detect” different animals in an input image. In this example, annotators are given a set of images of different animals and asked to classify each image based on a label for a specific animal species. In this case, the animal species is the category and the image is the input.

Feeding the annotated images as data to a computer vision model allows the model to be trained on each animal’s unique visual characteristics. Thus, the model will be able to classify new images of unlabeled animals as related species.

2. Object detection and object recognition

Object detection or recognition models take image classification a step further to find the presence, location, and number of objects in the image. For this type of model, the image annotation process requires drawing boundaries around each detected object in each image, allowing us to localize the exact location and number of objects present in the image. So the main difference is detecting classes in an image instead of classifying the whole image into a class (image classification).

Class position is a parameter outside of class, whereas in image classification class position within an image is irrelevant since the entire image is recognized as one class. Objects within an image can be annotated with labels such as bounding boxes or polygons.

One of the most common examples of object detection is person detection. It requires computing devices to constantly analyze frames to identify specific object characteristics and identify the current object as a person. Object detection can also be used to detect any anomalies by tracking changes in features over a specific time period.

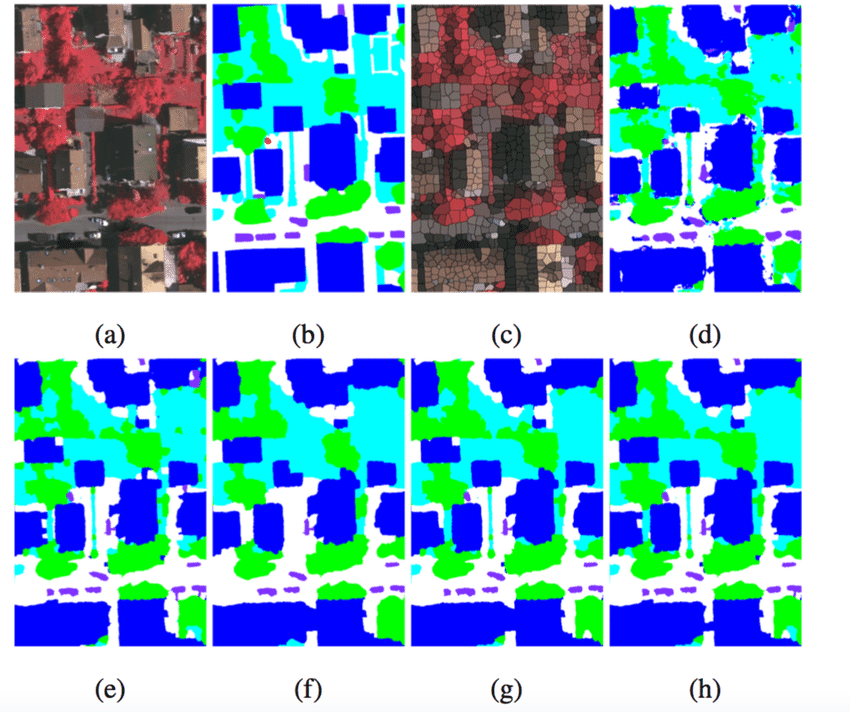

3. Image Segmentation

Image segmentation is a type of image annotation that involves segmenting an image into segments. Image segmentation is used to locate objects and boundaries (lines, curves, etc.) in an image. It performs at the pixel level, assigning each pixel in an image to a specific object or class. It is used in projects that require higher accuracy on categorical inputs.

Image segmentation is further divided into the following three categories:

Semantic segmentation delineates the boundaries between similar objects. This method is used when very precise knowledge of the presence, location, and size or shape of objects in an image is required.

Instance segmentation identifies the presence, location, number, and size or shape of objects in an image. Therefore, instance segmentation helps to mark the presence of each object in an image.

Panoptic segmentation combines semantic and instance segmentation. Panoptic segmentation thus provides data labeled for background (semantic segmentation) and objects (instance segmentation) within an image.

4. Boundary identification

This type of image annotation identifies the lines or boundaries of objects within the image. Boundaries can include the edges of specific objects or areas of terrain present in the image.

Once an image is properly labeled, it can be used to identify similar patterns in unlabeled images. Boundary recognition plays an important role in the safe operation of autonomous vehicles.

5. Shape annotation

In image annotation, images are annotated using different annotation shapes depending on the chosen technique. In addition to shapes, annotation techniques such as lines, splines, and landmarks can also be used for image annotation.

Following are popular image annotation techniques used based on use case.

5.1 Bounding box

Bounding boxes are the most commonly used annotation shapes in computer vision. A bounding box is a rectangular box used to define the position of an object in an image. They can be two-dimensional (2D) or three-dimensional (3D).

5.2 Polygons

Polygons are used to label irregular objects in images. These are used to label each vertex of the intended object and label its edges.

5.3 Landmarks

This is used to determine the basic points of interest in the image. These points are called landmarks or keypoints. Landmarking is very important in face recognition.

5.4 Lines and splines

Lines and Splines annotate images with straight or curved lines. This is important for boundary recognition to label sidewalks, road markings, and other boundary indicators.

How long does image annotation take?

The time required to annotate an image depends largely on the complexity of the image, the number of objects, the complexity of the annotation (polygons vs. boxes), and the required level of accuracy and detail.

Usually, it is difficult even for image annotation companies to know how long it takes for image annotation to label some samples to estimate based on the results. But even then, there is no guarantee that annotation quality and consistency allow for precise estimation. While automatic image annotation and semi-automated tools help speed up the process, a human element is still required to ensure a consistent level of quality (hence “oversight”).

In general, simple objects with fewer control points (windows, doors, signs, lights) require much less labeling time than region-based objects with more control points (forks, wine glasses, sky). Preliminary annotation creation tools with semi-automatic image annotation and deep learning models help to speed up annotation quality and speed.

Video annotation is based on the concept of image annotation. For video annotation, features are manually labeled on each video frame (image) to train a machine learning model for video detection. Therefore, the dataset for the video detection model consists of images of individual video frames.

what’s next

Image annotation is the task of annotating images with data labels. Labeling tasks usually involve manual work with computer-aided help.