Introduction:

In the ever-evolving landscape Computing of technology, two paradigms, Edge and Machine Learning (ML), are converging to redefine the way we process and analyze data. Edge Computing, with its focus on decentralized processing at the network’s periphery, seamlessly integrates with ML, ushering in a new era of efficiency and real-time decision-making. In this blog, we will investigate the integration of machine learning models with edge computing devices, exploring how this synergy enables more efficient and responsive processing without solely relying on centralized cloud servers.

Understanding Edge Computing:

What is Edge Computing?

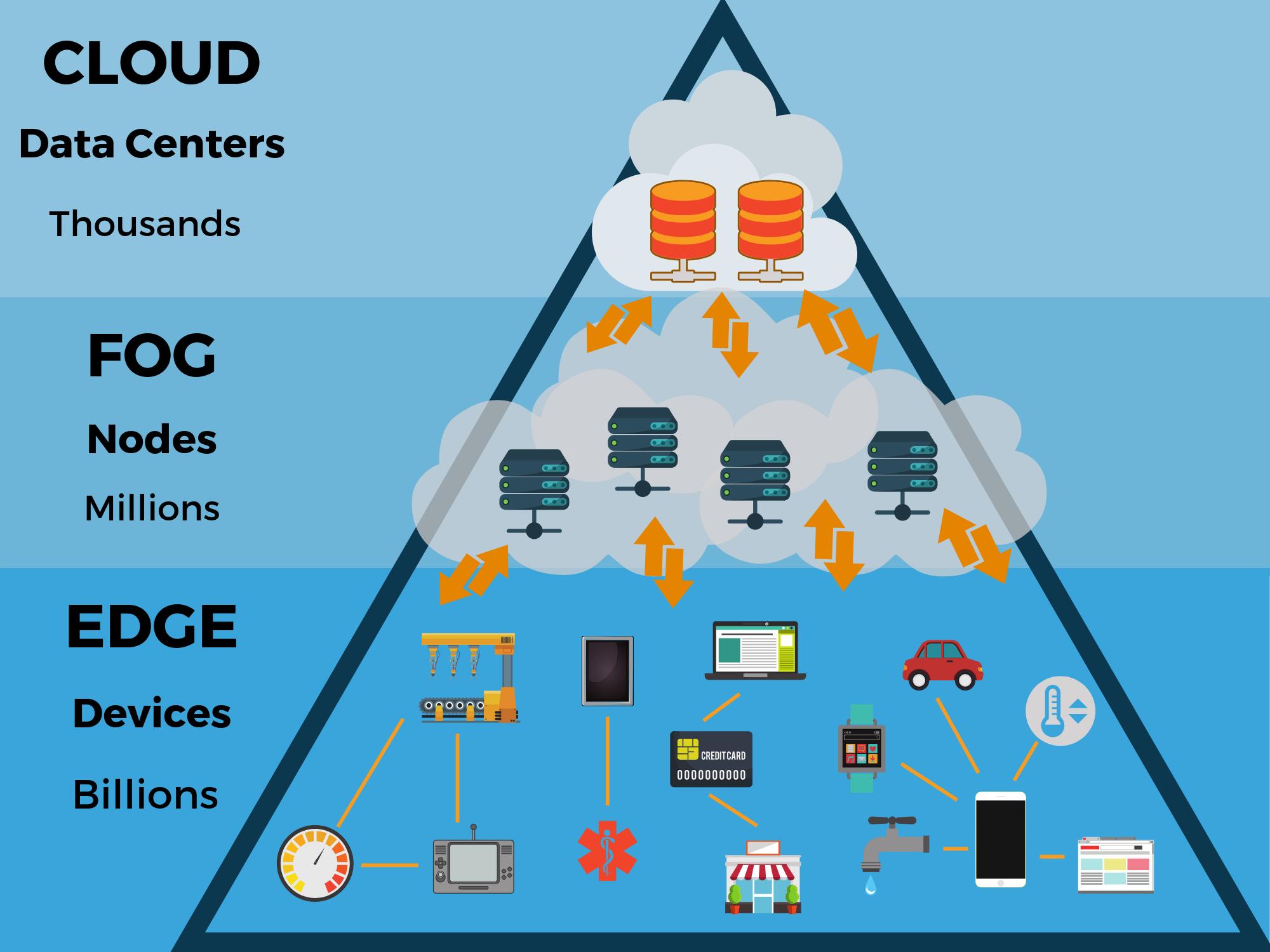

Edge Computing represents a distributed computing paradigm that brings computation closer to the data source or “edge” of the network. Unlike traditional cloud computing, where data is sent to centralized servers for processing, edge computing processes data locally, reducing latency and enabling real-time analysis. This paradigm shift is driven by the increasing demand for faster response times, reduced bandwidth usage, and enhanced privacy.

Core Characteristics of Edge Computing:

- Proximity to Data Source: Edge Computing systems are deployed close to the data source, minimizing the physical distance between where data is generated and where it is processed. This proximity reduces latency and improves the overall performance of applications.

- Decentralized Processing: Edge devices have computing capabilities, allowing them to perform data processing locally. This decentralized approach reduces the reliance on centralized servers, distributing the computational load and increasing system efficiency.

- Real-Time Processing: The proximity and decentralized nature of edge computing facilitate real-time data processing. This is particularly crucial for applications requiring instantaneous decision-making, such as autonomous vehicles, industrial automation, and augmented reality.

- Bandwidth Efficiency: By processing data locally, edge computing minimizes the need to transmit large volumes of raw data to centralized servers. This not only conserves bandwidth but also reduces the associated costs and improves the overall network efficiency.

Integration of Machine Learning with Edge Computing:

Leveraging ML in Edge Computing:

1. On-Device Machine Learning:

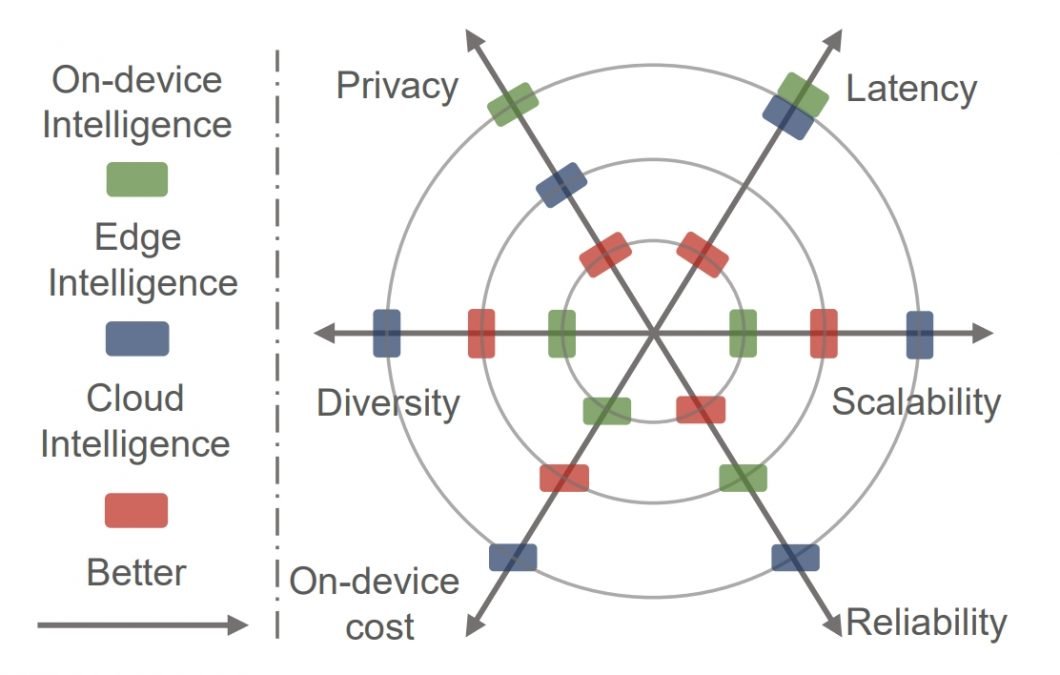

Edge devices, including smartphones, IoT devices, and edge servers, are equipped with sufficient computational power to execute machine learning models locally. On-device machine learning enables real-time inference without relying on a continuous connection to the cloud, enhancing user experiences and privacy.

2. Reduced Latency for Critical Applications:

Certain applications, such as autonomous vehicles and industrial automation, demand ultra-low latency for decision-making. By deploying machine learning models at the edge, critical tasks can be processed locally, ensuring minimal delay and improving overall system responsiveness.

3. Enhanced Privacy and Security:

Edge Computing, coupled with machine learning, addresses privacy concerns by processing sensitive data locally. This reduces the need to transmit raw data to the cloud, mitigating potential security risks associated with data transfer and storage on external servers.

4. Offline ML Capabilities:

Edge devices often operate in environments with intermittent or limited connectivity. On-device machine learning models enable these devices to perform inference offline, ensuring continuous functionality even when a reliable network connection is not available.

Real-World Applications:

1. Smart Cities:

Edge Computing and ML find extensive applications in creating smart cities. From intelligent traffic management and surveillance to waste management optimization, deploying machine learning models at the edge allows for real-time decision-making to enhance city infrastructure and services.

2. Healthcare:

In healthcare, edge devices equipped with ML capabilities can analyze patient data in real-time. This enables quicker diagnosis, personalized treatment plans, and even the monitoring of chronic conditions without relying solely on cloud servers, ensuring timely responses to critical health indicators.

3. Retail:

Edge Computing facilitates real-time inventory management and customer analytics in the retail sector. Machine learning models at the edge can analyze customer behavior, optimize shelf stocking, and enhance the overall shopping experience without causing delays associated with centralized cloud processing.

4. Industrial Internet of Things (IIoT):

Edge Computing and ML are pivotal in industrial settings. By deploying ML models on edge devices, factories can perform predictive maintenance, monitor equipment health, and optimize production processes in real time, leading to increased efficiency and reduced downtime.

Challenges and Considerations:

1. Resource Constraints:

Edge devices typically have limited computational resources compared to powerful cloud servers. Deploying ML models on resource-constrained devices requires optimization and consideration of model size, complexity, and inference speed.

2. Data Privacy and Security:

While edge enhances privacy by processing data locally, it introduces security challenges. Edge devices may be more susceptible to physical tampering or unauthorized access, requiring robust security measures to protect sensitive information.

3. Model Updates and Maintenance:

Managing machine learning models on edge devices involves challenges related to updating and maintaining the models. Ensuring that edge devices are equipped with the latest models and patches while minimizing disruptions to ongoing operations is a complex task.

4. Interoperability and Standardization:

The diverse landscape of edge devices and platforms necessitates interoperability and standardization efforts. Ensuring that ML models can seamlessly run across different edge devices and environments is crucial for widespread adoption.

The Future of Edge Computing and ML:

1. Edge-Cloud Synergy:

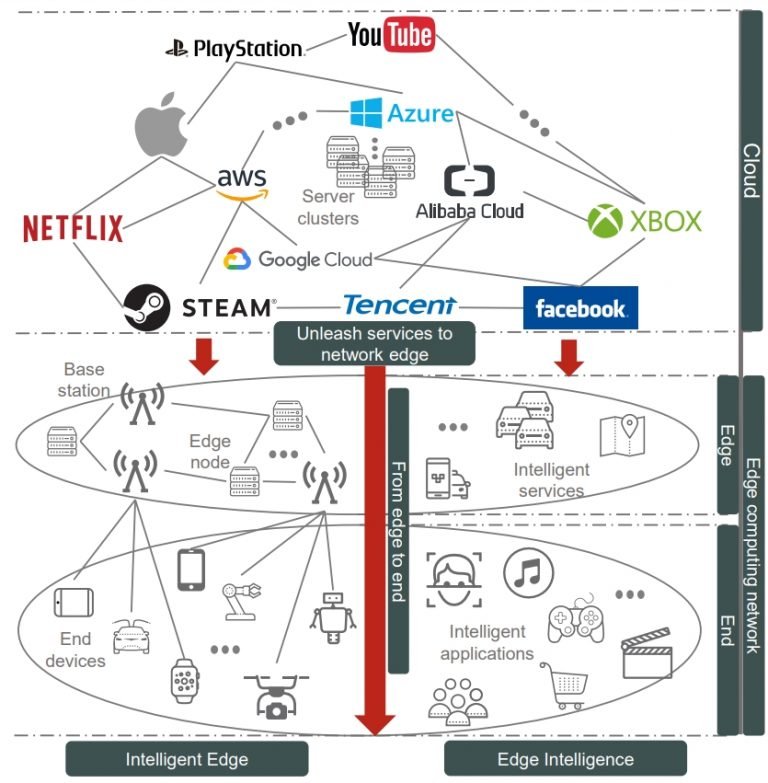

The future of computing lies in a seamless integration of edge and cloud resources. Edge devices will work collaboratively with centralized cloud servers, leveraging the strengths of both paradigms to deliver efficient and scalable solutions.

2. Advancements in Edge Device Capabilities:

As edge devices become more powerful, with enhanced processing capabilities and improved energy efficiency, the scope for deploying complex machine learning models at the edge will expand. This evolution will drive innovation across various industries.

3. 5G Connectivity Impact:

The deployment of 5G networks will further enhance the capabilities of edge computing. The high data transfer speeds and low latency offered by 5G will enable more sophisticated machine learning applications at the edge, transforming industries like augmented reality and autonomous vehicles.

4. Edge AI Ecosystem Growth:

The growth of the Edge AI ecosystem will be driven by collaborations between hardware manufacturers, software developers, and AI researchers. This collaborative effort will result in optimized ML models, edge devices with specialized AI accelerators, and innovative applications across diverse domains.

Conclusion:

The integration of machine learning with edge is a transformative force shaping the future of technology. By decentralizing data processing and enabling real-time decision-making at the network’s periphery, this synergy addresses critical challenges associated with latency, privacy, and bandwidth efficiency.

As we move forward, overcoming challenges related to resource constraints, security, and interoperability will be pivotal. The collaborative efforts of researchers, developers, and industry leaders will play a crucial role in unlocking the full potential of edge computing and machine learning, paving the way for a future where intelligent, real-time applications are an integral part of our daily lives. The convergence of Edge Computing and ML is not just a technological evolution; it is a paradigm shift that promises a more responsive, efficient, and decentralized computing landscape.