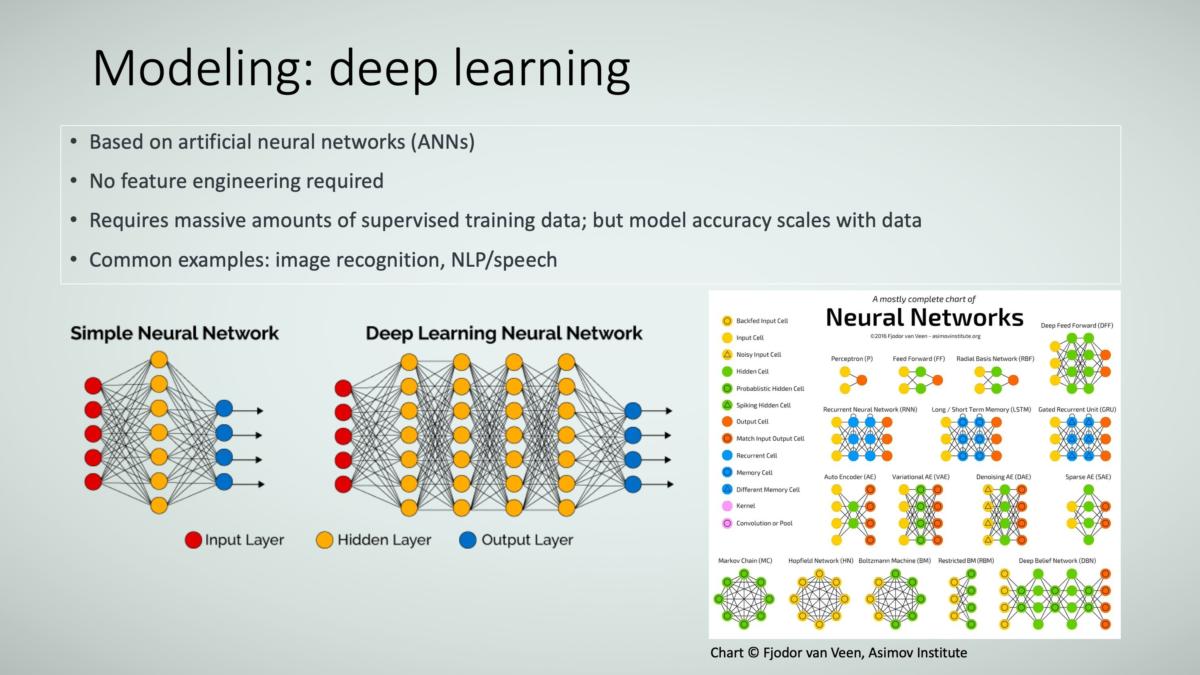

Hey there, fellow data enthusiasts! Are you looking to build your own Image deep-learning model? If so, one of the most important steps you’ll need to take is creating a large-scale image dataset to train your model. But where do you start? How do you collect, label, and store images in a way that’s efficient and effective?

Fear not, because we’ve got you covered! In this comprehensive guide, we’ll take you through the step-by-step process of creating your image dataset, including everything from planning and preparation to the nitty-gritty details of annotation and organization. So grab a cup of coffee (or tea, if that’s your thing), and let’s dive into the world of image data collection.

Understanding Your Image Dataset Needs

When it comes to building an image dataset, the first step is to understand the purpose that the dataset will serve. Whether the purpose is for research, an AI or machine learning model, or just for fun, knowing the purpose will help determine what type of data is needed and how much of it.

For instance, if the purpose of the image dataset is for a research project, then the data must be of high quality and have a specific level of detail that will aid in the study.

On the other hand, if the purpose is just for fun, then the dataset can be more relaxed, varied, and open-ended. If an AI or machine learning model will use the dataset, then it must be structured to make it easy for the system to learn and recognize patterns in the images.

Therefore, clarity on the image dataset’s purpose is crucial in building a successful and effective dataset.

When building an image dataset, one of the first things to consider is the size and complexity of your data. As such, you need to determine how many images you require and what types of images meet your needs. Are you looking for a massive set of thousands of photographs or just a handful of well-curated ones? Additionally, you must decide on the kind of images needed – simple or more complex ones with multiple objects.

It’s important to remember that the type of images in your dataset will significantly impact how accurate and effective any machine learning model you train on it will be. Therefore, spending time researching and planning the optimal size and complexity of your image dataset is crucial in obtaining reliable results.

Next, when making an image dataset, you need to consider the quality requirements the images need to meet to give accurate results.

The resolution of the images is a big part of figuring out how good the dataset is. High or low-resolution images may be required depending on the focus of the research or project. Additionally, including specific features such as color, orientation, and other visual characteristics is critical.

These features help to create a more comprehensive and detailed dataset that can be utilized for various purposes. Developing an image dataset requires careful consideration of its quality requirements and features, which helps ensure that the dataset meets its intended purpose.

Preparing Resources for Scaling

As the importance of image datasets continues to grow across different research areas, it is essential to prepare resources for scaling these datasets. -This involves understanding the various tools and techniques available to manage and store large amounts of data.

Image datasets require proper management to ensure their practical use, whether for computer vision, machine learning, or other applications. One crucial aspect of this process is selecting the most suitable storage methods, such as distributed file systems or cloud storage, which can efficiently handle the volume and complexity of the data. Additionally, managing and preparing image data requires careful consideration of factors like annotation, labeling, and data cleaning.

Scaling image datasets requires a comprehensive understanding of the tools and techniques required to optimize data storage, processing, and preparation for analysis.

Also, cloud storage not only makes data easier to find and access but also makes data more secure and reliable. With cloud storage, data is encrypted and protected from unauthorized access. -This lowers the risk of data breaches.

Also, cloud storage platforms offer solutions that can be scaled up as data storage grows. Collaborative efforts among researchers and stakeholders are also possible as cloud storage enables easy sharing and collaboration within a secure environment. As the volume of image datasets increases, leveraging cloud storage remains crucial for managing and storing large files while maintaining data security and accessibility.

Image Acquisition Strategies

Acquiring high-quality images is an essential step in building an image dataset. It requires careful consideration of several factors, such as the images’ source, format, and size. The source of the images should be reliable to ensure authenticity and credibility. Additionally, the format should be compatible with the dataset and the tools that will be used for analysis.

The size of the images is another critical factor that must be considered. Large images may consume significant memory resources, while small images may need to provide more detail for analysis. Therefore, it is necessary to balance image quality and file size. Image selection must be comprehensive and representative to ensure the dataset accurately represents the intended population or phenomena.

Acquiring images for an image dataset is a critical process that requires careful deliberation and attention to detail to ensure that the dataset is accurate and reliable.

Lastly, making an image dataset is crucial in many fields, such as computer vision and machine learning. -This can be achieved through the manual or automated acquisition of images from diverse sources like online databases, repositories, structured web searches, or customized camera setups. The acquisition process must comprehensively include variations like angles, lighting conditions, and objects.

It is important to note that creating an image dataset is not only about collecting but also ensuring they are high quality, diverse, and relevant to the task. Once the image dataset is created, it can be used for various applications like object recognition, image classification, and scene analysis. In conclusion, creating an dataset is critical in advancing the field of computer vision and machine learning.

Image Pre-Processing and Preparation

When working on a project with an imge dataset, it is essential to set up a reliable way to handle the data. pre-processing and preparation are integral steps toward achieving this goal. These steps involve manipulating and optimizing the iages to ensure that they are in a format that is conducive to the project’s requirements.

During the pre-processing phase, tasks like filtering, resizing, and normalization are usually done to ensure that all have the same quality and format. This step ensures that the data is ready for analysis, allowing researchers to draw insights from the data more effectively.

Also, pre-processing makes machine learning models more accurate, which makes them better at tasks like recognition. Good pre-processing is one of the most critical steps in making sure that an dataset project meets its goals and meets high quality and accuracy standards.

Besides, it is imperative to note that adequately handling an dataset is crucial for its successful use in machine learning and computer vision applications. The resizing and cropping of during the preparation phase must be carried out with great care to ensure that the data accurately represents the objects and scenes it was initially taken from.

-This is particularly important when using the dataset for tasks like object detection or classification, as the integrity of the directly affects the accuracy of the algorithms. To make a reliable and helpful dataset, you must pay close attention to every detail during preparation.

Validation and Quality Assurance Steps

dataset is crucial to many industries and research fields, including machine learning, computer vision, and artificial intelligence. To ensure the highest quality data for validation and quality assurance processes, it is crucial to thoroughly vet and check every dataset for accuracy before use.

In this process, each is looked at, its metadata is checked, and it is made sure that it meets specific standards. The dataset must be accurate and consistent for machine learning models to be reliable and robust.

Poor quality data may lead to inaccurate predictions, which can be detrimental to the overall system’s performance. Therefore, it is essential to prioritize data quality when working with datasets to achieve accurate and reliable results.

When dealing with datasets, ensuring that the data within them meets the expected quality standards is crucial. One way to ensure this is by running automated tests on the dataset.

-This helps to identify any anomalies or inconsistencies in the data, which can then be addressed before they become significant issues. It is also recommended to carry out manual tests to verify the accuracy of any additional details included in the dataset, such as labels or annotations.

-This is especially important for sensitive or essential applications, like medical imaging or self-driving cars. Taking the time to test and confirm the accuracy of an dataset can save time and money in the long run and make the dataset more reliable and trustworthy overall.

After that, it’s essential to check the dataset often to ensure its accuracy stays the same over time.

Changes can happen to datasets for several reasons, such as when data gets corrupted, or new technologies emerge. Validation and quality assurance tests should be done regularly, and any necessary changes should be made to keep accurate results. Moreover, it is recommended to establish a protocol for regular dataset maintenance and update. This practice ensures that dataset users have access to reliable and updated data.

By following a well-planned maintenance process, organizations can use their datasets as a reliable tool to gain insights and make intelligent decisions. Ultimately, the success of any dataset depends on the commitment to its continuous improvement and maintenance.

Post-Processing and Finalizing the Dataset

An dataset is a collection of used for various purposes, including training machine learning algorithms or conducting research. Post-processing of the dataset is the final and crucial step in preparing the dataset for use. It involves a set of quality assurance steps that ensure data correctness, accuracy, and reliability. Post-processing includes various techniques such as correction, data normalization, filtering, and segmentation.

These methods guarantee that the are high quality, free of noise, and maintain the desired level of consistency. Because of this, post-processing plays a significant part in ensuring that the dataset is ready to be used, reliable, and helpful in working toward the goal set out for it.

Then, if you have an dataset that meets industry standards, you can use it in many different ways. The tedious and time-consuming post-processing tasks such as labeling, categorizing, and creating metadata are critical in making the a valuable dataset for machine learning and computer vision applications.

The dataset is improved in terms of both its quality and its reliability after the elimination of duplicate and the execution of quality checks. Metadata provides essential information about the and contributes to more accurate and helpful search results. Post-processing is an essential step in creating an accurate and valuable dataset that can be used in various contexts and applications.

Conclusion

Creating a large-scale dataset for your deep-learning models may initially seem daunting. Still, if you employ the appropriate strategy, you can complete the task promptly and efficiently. If you follow this detailed guide, you will end up with a dataset that will assist you in developing machine-learning models that can be relied upon more often and

maintain order and cleanliness concerning anything and everything associated with annotating and storing . If you keep these guidelines in mind, you can construct an dataset that will significantly boost your deep learning endeavors. First, brew yourself a cup of coffee or tea, and then dive right into that data set.