Makes a Good Dataset

There is a great deal of information on the planet. The hardest part is getting a handle on it. How can be taken a dataset from just existing to being an important asset?

Information ought to be Findable, Open, Interoperable, and Reusable. Information that incorporates FAIR information standards will essentially affect established researchers. We should push science ahead with FAIR!

That all sounds perfect, yet how precisely might you at any point make information FAIR? We have a couple of tips to kick you off.

Portray any place you can

Metadata matters. On the off chance that you’re presenting your information to a stage and there is recommended metadata, use it! This is an extraordinary method for giving data that you may not remember to remember for your own. Clients frequently drive the incorporation of specific fields, so you can make your dataset more helpful and discoverable by utilizing fields that have a demonstrated history of upgrading datasets.

Enlightening title

We should move past final_FINAL_forRealThisTime_v5. That doesn’t educate me anything regarding your dataset except that you’ve struggled with trading the right rendition. Keep your title compact and instructive. Recollect that individuals taking a gander at your dataset don’t have all of the settings that you have.

Clear keys

Have you at any point taken a gander at a dataset and not had the option to sort out what’s happening? How about we keep away from that with your dataset? The most effective way to do that is to utilize keys and names that appear to be legitimate to individuals other than yourself. Are there standard names in your space? Use them! Once more, consider individuals who don’t have all the settings you have. Help them out so your information can be valuable to all.

Portrayal and documentation

An overall portrayal of your dataset is very useful. This can be remembered for a README or anything that documentation you have accessible. If somebody wasn’t a piece of gathering the information, they won’t know all about your cycle and consequently, won’t have the foggiest idea about the intricate details of the dataset (ie. that smart shorthand you came up with or what unit of estimation you utilized). Ponder including:

- When and how the information was gathered

- Size of the dataset

- Any handling you’ve done to the information

- Information fields and a portrayal explaining each field

- Clarifications of any abbreviations and shortened forms

- Creator names, establishments, and contact data

Consider different clients

Get your dataset to a spot where somebody other than yourself can utilize it. Come at the situation from their perspective: assuming you were seeing this dataset interestingly, how might you undoubtedly be utilizing it? Set up the information that makes that conceivable.

Clean the information

Might it be said that you are utilizing any kind of preprocessing step before utilizing the information yourself? Eliminating copy values, eliminating inadequate sections, switching values over completely to a similar unit of estimation, and so on. Consider assuming this preprocessing will be helpful to other people and will make the dataset more usable.

Structure the information

On the off chance that relevant, adding construction to your dataset can be unimaginably valuable. Assuming you are adding construction to your information to utilize it, consider keeping that structure while offering the information to other people.

Information Prep Agenda

At the Materials Information Office, we’ve seen what makes a dataset great and FAIR, and what doesn’t. Here is an agenda that sums up the focuses above more compactly and comes right from our dataset guidelines. We’ve found that these principles have a tremendous effect on convenience and interpretability.

Our Dataset Guidelines at the Materials Information Office

- Give a README document and depiction. This ought to depict the items in the dataset, the format of the registries, document naming plans, the size of the dataset, and connections to any connected distributions, codes, and so forth.

- Portray Information Provenance: Remember for the depiction, data about who, what, where, when, how, and why the information was gathered.

- Detail Information Quality: Record information assortment strategies, approval techniques, and any known predispositions or limits to give setting and backing to clients.

- Utilize Open Record Organizations: Whenever the situation allows, information ought to partake in designs that are open and clear by normal programming bundles.

Give Models: Whenever the situation allows, incorporate instances of how to stack, investigate, and plot your information. These models could be remembered for the store or connected in e.g., a GitHub vault. - Detail Information Security and Moral Contemplations: Address any protection concerns or moral contemplations connected with the dataset, and guarantee consistency with important guidelines.

- Add Authorizing Data: Determine the permit under which the dataset is disseminated, enumerating any use limitations or necessities.

Your dataset is prepared and prepared for the world! Presently how would you share it?

Congrats on preparing your dataset and reported. That is difficult work! Time to pick a stage to share your work.

Find a stage that checks out for sharing and getting to locally. Not certain where to begin? Simply sit back and relax, we have a couple of we suggest.

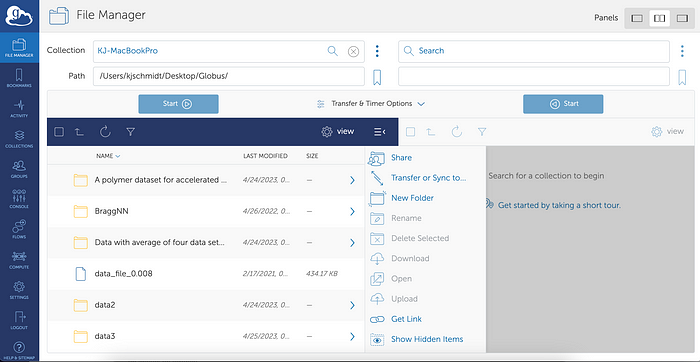

Globus

For easily sharing data with others, Globus is a great option. All you need to do is set up an endpoint on your laptop (or whatever computer the data is stored on) and transfer the data to another endpoint via Globus. This requires the person you’re sharing the data with to set up their endpoint where they would like the data live.

Required:

- To publish data: A (free) Globus account

- To use data: A (free) Globus account

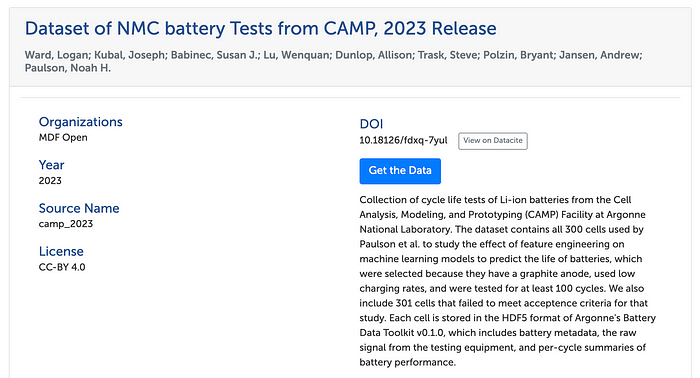

Materials Data Facility (MDF)

If you’d like to make your data more discoverable and accessible, consider using the Materials Data Facility (MDF). MDF uses Globus to transfer data but doesn’t require people to use data to create their endpoints. Each dataset on MDF can be found through the search page, which allows your data to reach more people. Datasets also get their unique page on the website, making it easy to share with others by simply sharing the link.

Required:

- To publish data: A (free) Globus account

- To use data: Nothing

Foundry-ML

Is your data structured and ready to be used programmatically? Sounds like a fit for Foundry-ML!

Foundry-ML is built with MDF, so Foundry-ML datasets get all the same benefits as MDF (appearing in MDF search and its website page) with even more accessibility features. Foundry-ML datasets can be loaded directly into a DataFrame with a Python SDK. They also get a more detailed page on the Foundry-ML website, with step-by-step instructions on how to use it.

Required:

- To publish data: A (free) Globus account

- To use data: Nothing

The FAIRest data of them all

Making a good dataset is all about following FAIR data principles: describing and documenting; making data useable to others; and hosting on platforms that make data accessible. Use these tips with your data and let us know how it goes!