Are you wondering what data labeling datasets is and what it can do for you? Are you considering annotated datasets for your AI and machine learning projects? Would you like to learn more about data labeling, the benefits it can bring to your projects, and how to integrate it into your machine learning workflow?

Machine learning is increasingly an integral part of day-to-day products and operations, and the performance of these models depends on the quality of the data they use. This dependency highlights the importance of datasets in machine learning and the methods by which we collect them.

Often, the data we use already has high-quality labels. For example, when predicting stock prices based on past values, the price serves as both the target label and the input feature.

However, our data is not always labeled or of the required quality. Labels can be noisy, limited, and biased (e.g., user-added labels and categories) or missing entirely (e.g., object detection).

In order to obtain labels or improve label quality, we can perform data annotation. In data labeling, we label or re-label our data with the help of human labelers supported by labeling tools and algorithms. This enables model training, improves data quality, or improves model performance. Among other use cases, we can use data annotation to determine uncertainty predictions (probability approximation) or to validate our models.

Data labeling is a widely used practice.

If you label data, you should do it well. Data labeling can be a complex, slow, and expensive process that requires evaluation and quality assessment. If you need to label data on a regular basis, you may want to do this as an integral part of your machine learning workflow. Fortunately, there are a few things you can do to make labeling efficient and less error-prone.

In this article, you’ll learn about data labeling, its types, and how including humans in the process can benefit your machine learning models. We’ll also share some guidelines you can use to consider labeling your project.

Data labeling usually occurs with the help of human annotators, but algorithms or a combination of both can also be used. In this article, we focus on human annotation and highlight the options you can use for both.

What is Data Labeling for AI and Machine Learning?

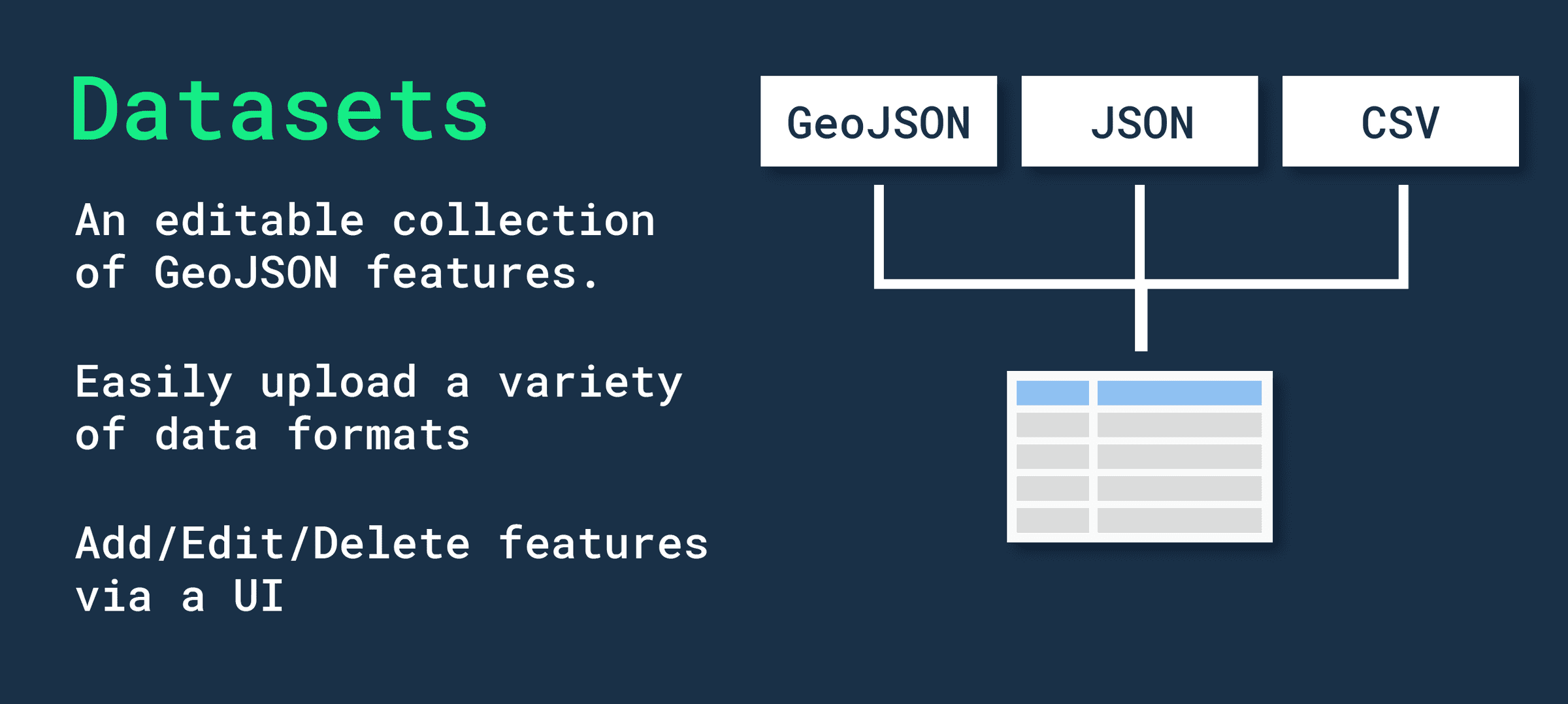

Data annotation (or sometimes called “data labeling”) represents the active labeling of a training dataset for a machine learning model. This means most often adding target labels, but can also represent adding feature values or metadata. In some contexts, one might also refer to human validation of model predictions as data labeling, since it requires annotators to (re)label the data.

Depending on the context, one might also refer to this activity as “tagging,” “classifying,” or “transcribing.” However, in this context, all of these terms mean that labels extend the data with the information used in the modeling process.

The following are the main use cases for data labeling:

Generate labels: In some cases, annotation is the only way to record the target label or feature. For example, training a model to classify cats and dogs requires a dataset of images that contain the explicit labels “cat” and “dog”. We need taggers to label these samples.

Generating features: Annotated data can datasets highlight relationships in our models that cannot be automatically identified from noisy real data.

Improve labeling quality: Relabel noisy, limited, inaccurate or biased labels.

Validate model performance: Compare model-generated labels with human-annotated labels.

Convert Unsupervised to Supervised: Convert an unsupervised or one-class supervised problem to a supervised problem (for example, in anomaly detection).

What is human-labeled data in machine learning?

Manually annotated data means that humans are the main source of data annotation.

As it stands, humans can recognize and understand things that machine learning models cannot. It’s not always clear what those things are because of the variety of models, humans, and business problems. In certain contexts, humans may be better than models at identifying the following:

Subjectivity and intent

Uncertainty, vague concepts and irregular categories

The context relevant to the business problem and whether the data point “makes sense” in that context

Human validation of model predictions increases trust in our data and modeling process, and because models are often opaque, humans can identify “unrealistic” predictions and link results into their context.

Complying with regulations may also datasets require human validators to participate in machine learning workflows.

How and at what step you rely on human or automatic labeling is a problem-specific problem.

In a semi-automatic labeling approach, you combine machine learning techniques and manual labeling methods. For example, you can use models to reduce data labeling time. Alternatively, you can interactively propagate samples for labeling based on classification confidences.

Types of data annotations

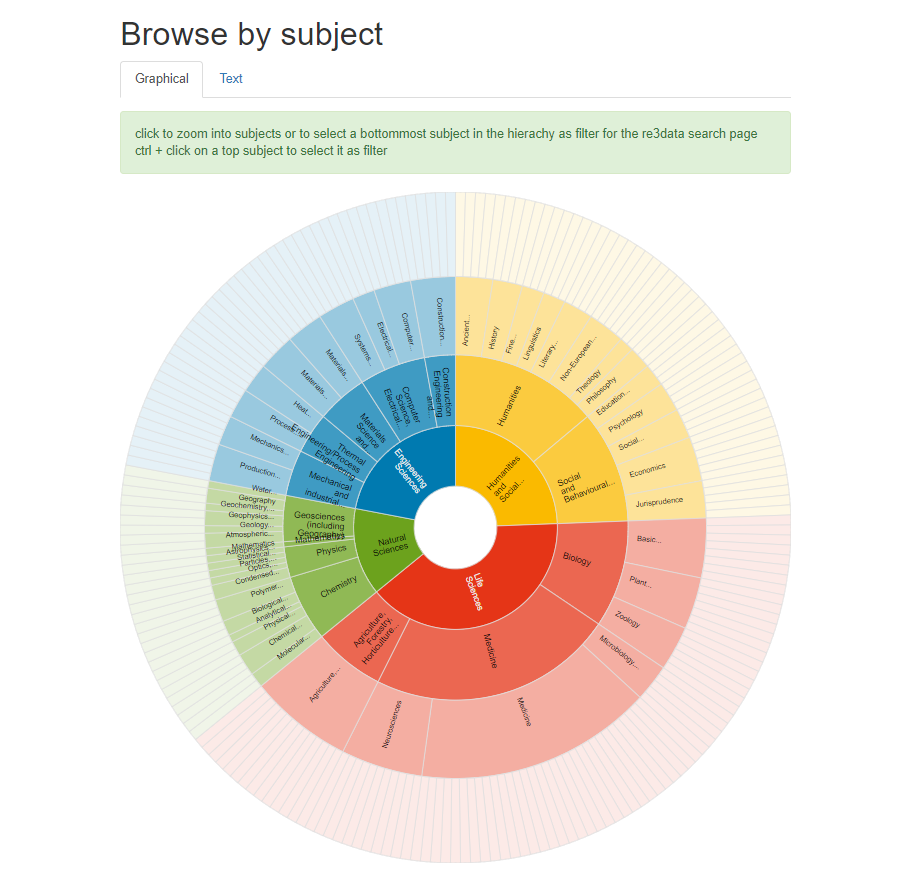

We can distinguish different types of data labeling problems and approaches based on the type of data being labeled or whether the data labeling is internal or external to the organization.

Data Type-Based Data Labeling Categories

Classifications based on data types are relatively straightforward because they follow common data types used in machine learning:

text

Pictures

video

Sound

These data types represent data formats that datasets humans perceive relatively directly. It is less common to use human-annotated tabular, network, or time-series data, as human annotators typically have less advantage in these domains.

Different data formats require different labeling methods. For example, to generate high-quality computer vision datasets, you can choose between different types of image annotation techniques.

Regardless of the data format used, labeling projects tend to work in the following phases:

1. Identify entities in the data and distinguish them from each other.

2. Identify the metadata attributes of the element.

3. Store the element’s metadata attributes in a specific form.

Internal and external data annotation

Another classification depends on whether the labeling occurs inside or outside the datasets organization.

In the internal case, data annotation can be part of internal training data creation or model validation. Most of the resources we discuss here describe this situation.

In the external case, an organization uses an external source to label its data. There are different sources for doing this, such as annotation contests or professional annotation services.

In some cases, certain components of a data labeling workflow are “internal” while other components are “external”. For example, we can hire external annotators who will work in our internal annotation workflow.

A Guide to Data Labeling in Machine Learning

To do data labeling well, you need to think of it as part of your machine learning workflow and build it as a combination of labelers, algorithms, and software components.

Two major problems in data labeling projects are how to effectively use limited labeling resources and how to evaluate the quality of labeling.

There are different techniques to solve these problems. In this section, we’ll discuss both:

Active learning: the way of sampling data for labeling

Quality Assessment: Validate annotation performance

Active Learning: Sampling Data for Labeling

Active learning is a method of selecting data samples datasets in the context of data annotation.

When you combine human annotation with a machine learning model, a key decision you need to make is which part of the data the human annotator will annotate. You have limited time and money to spend on data labeling, so you need to be selective.

Different types of active learning can help you select only relevant samples for labeling, saving time and cost. Here are three popular ones:

Random sampling

Uncertainty Sampling

Diversity sampling

1. Random sampling

Random sampling is the simplest type of active learning. It serves as a good benchmark against which you can compare other strategies.

However, due to the distribution of the received data, it is not always easy to have a truly random sample, since random sampling may miss problems that other methods are actively looking for.

2. Uncertainty sampling

In uncertainty sampling, you choose the datasets unlabeled samples that are closest to the model’s decision boundary.

The value of this approach is that these samples have the highest probability of being misclassified, so labeling them manually corrects their possible errors.

A possible problem with uncertainty sampling is that the labels it selects may belong to the same problem space and focus on only one specific aspect of the decision boundary.

Uncertainty sampling is more useful for models that efficiently estimate their prediction uncertainty. For other types (e.g., deep neural networks), we can improve uncertainty estimates using other confidence estimation methods, such as those provided by monitoring and model validation tools.

Uncertainty Sampling in Active Learning

3. Diversity Sampling

Diversity sampling needs to label samples with underrepresented or even unknown eigenvalues in the model training data. Other names for this tool might be anomaly or outlier detection, representative sampling, or stratified sampling.

The main benefit of this tool is to teach your model to consider information that it might ignore because of its low occurrence in the training dataset.

We can use diversity sampling to prevent performance loss due to data drift. Data drift occurs because the data received by our model contains a large number of sample regions that were previously poorly predicted. We can use diversity sampling to identify and label these underrepresented sample regions and improve the predictive power on them. Doing so will limit the impact of data drift.

How to Assess the Quality of Data Labeling Projects

Your annotators can make mistakes, and datasets you need to introduce checks and verification points to systematically catch them.

Here are a few areas that can help you improve your labeling performance:

Expertise: Experienced annotators and subject matter experts can provide high-quality information and datasets conduct final review.

Team: Sometimes, more than one person is needed to improve annotation accuracy and achieve “consensus” on relevance.

Diversity: Insights from team members with different backgrounds, skills and levels of expertise complement each other well and prevent systemic bias.

In this article, you learned what data annotation is and how it can benefit your machine learning models.

Annotations can provide you with labeled datasets, improve data quality, or validate models. It also helps your machine learning model de-bias and learn about relationships it cannot, based on the available data.

Even if you have a well-trained model, you must be aware of data drift and concept drift that affect its performance. You can do this using data annotation by rechecking the model as part of the continuous model validation process.