What Is a Dataset in Machine Learning

A dataset in AI and man-made reasoning alludes to an assortment of information that is utilized to prepare and test calculations and models. These datasets are significant to the turn of events and outcome of AI and simulated intelligence frameworks, as they give the essential information and result information for the calculations to gain from.

Datasets, all things considered, including both organized and unstructured information, can be utilized in AI and man-made intelligence. Information that has been coordinated in a specific way, for example, a calculation sheet or data set table, is alluded to as organized information.

Considering that data is as of now in a helpful structure, this kind of information is easy to evaluate and manage. Unstructured information, then again, depicts the data that isn’t set out in a specific configuration, like text or pictures. Before being utilized in AI and simulated intelligence frameworks, this sort of information should be additionally handled and broken down.

Datasets from different sources, for example, produced datasets, created datasets, and private datasets, can likewise be used in AI and man-made intelligence. Datasets that are freely open to the general population are known as “public datasets” and scientists and designers as often as possible use them to test and survey AI and man-made reasoning (artificial intelligence) calculations.

Exclusive datasets are those that have a place with a specific business or association and are simply open to choose individuals or gatherings. Produced datasets, which are now and again utilized in the improvement of new frameworks, are datasets that are grown especially to prepare and test AI and artificial intelligence calculations.

AI is at the pinnacle of today’s prominence. Regardless of this, a ton of chiefs are uninformed about what precisely is expected to configure, train, and effectively send an AI calculation. The insights concerning gathering the information, constructing a dataset, and commenting particulars are disregarded as strong errands.

Notwithstanding, unscripted TV dramas that working with datasets is the most tedious and difficult aspect of any artificial intelligence project, some of the time taking up to 70% of the time generally speaking. Also, developing a great dataset requires experienced, prepared experts who understand how to manage the genuine information that can be gathered.

We should begin from the outset by characterizing what a dataset for AI is and why you want to focus closer on it.

What Is a Dataset in Machine Learning and Why Is It Essential for Your AI Model?

Oxford Word reference characterizes a data set as “an assortment of information that is treated as a solitary unit by a PC”. This implies that a data set contains a great deal of independent bits of information yet can be utilized to prepare a calculation determined to find unsurprising examples inside the entire data set.

Information is a fundamental part of any man-made intelligence model and, essentially, the sole justification for the spike in the notoriety of AI that we witness today. Because of the accessibility of information, versatile ML calculations became practical as genuine items that can carry worth to a business, instead of being a result of its fundamental cycles.

Your business has forever been founded on information. Factors, for example, what the client purchased, the notoriety of the items, and irregularity of the client stream have forever been significant in business making. Be that as it may, with the appearance of AI, presently it means a lot to gather this information into datasets.

Adequate volumes of information permit you to break down the patterns and secret examples and go with choices in light of the data set you’ve assembled. Nonetheless, while it might look rather basic, working with information is more convoluted since it requires legitimate treatment of the information you, first of all, have, from the reasons for utilizing a data set to the planning of the crude information for it to be usable.

Splitting Your Data: Training, Testing, and Validation Datasets in Machine Learning

Typically, a data set is utilized not just for preparing. A solitary preparation data set that has proactively been handled is normally parted into a few sections, which is expected to check how well the preparation of the model went. For this reason, a testing data set is normally isolated from the information. Then, an approval dataset, while not stringently vital, is very useful to try not to prepare your calculation on similar sort of information and make one-sided expectations.

Elements of the Information: How to Construct Yourself a Legitimate Dataset for an AI Project?

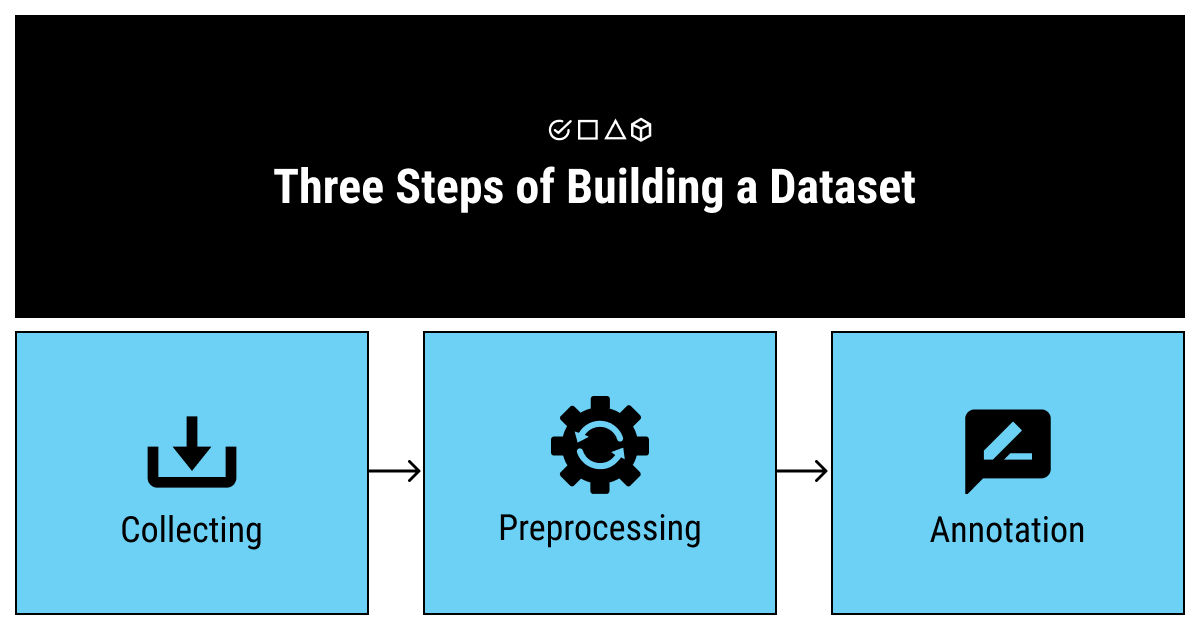

Crude information is a decent spot to begin yet you clearly can’t simply push it into an AI calculation and trust it offers you important experiences into your clients’ ways of behaving. There are many advances you want to take before your data set becomes usable.

Gather. The principal thing to do while you’re searching for a data set is to settle on the sources you’ll use to gather the information. Ordinarily, there are three sorts of sources you can browse: the unreservedly accessible open-source data sets, the Web, and the generators of fake information. Every one of these sources has its upsides and downsides and ought to be utilized for explicit cases.

There’s a standard in information science that each accomplished proficient sticks to. Begin by addressing this inquiry: has the data set you’re utilizing been utilized previously? On the off chance that not, accept this dataset is defective. If indeed, there’s as yet a high likelihood you’ll have to re-proper the put forth to accommodate your particular objectives.

Clarify. After you’ve guaranteed your information is spotless and important, you likewise need to ensure it’s reasonable for a PC to process. Machines don’t comprehend the information the same way as people do (they can’t relegate similar importance to the pictures or words as we do).

This step is where a ton of organizations frequently choose to reevaluate since keeping a prepared comment proficient isn’t feasible all the time. We have an extraordinary article on building an in-house marking group as opposed to re-appropriating this errand to assist you with understanding what direction is ideal best for you.

The hotspots for gathering a dataset change and firmly rely upon your venture, financial plan, and size of your business. The most ideal choice is to gather the information that straightforwardly corresponds with your business objectives. Notwithstanding, while this way you have the most command over the information that you gather, it might demonstrate muddled and requesting as far as monetary, time, and HR.

Alternate ways like consequently produced data sets require critical computational powers and are not appropriate for any task. For the motivations behind this article, we might want to explicitly recognize the free data sets for AI. There are huge far-reaching stores of public datasets that can be uninhibitedly downloaded and utilized for the preparation of your AI calculation.

The undeniable benefit of free datasets is that they’re, indeed, free. Then again, you’ll doubtlessly have to tune any of such downloadable data sets to accommodate your task since they were worked for different purposes at first and won’t fit exactly into your specially fabricated ML model. In any case, this is a choice of decision for some new companies, as well as small and medium-sized organizations since it requires fewer assets to gather a legitimate data set.

The Elements of a Legitimate, Top notch Dataset in AI

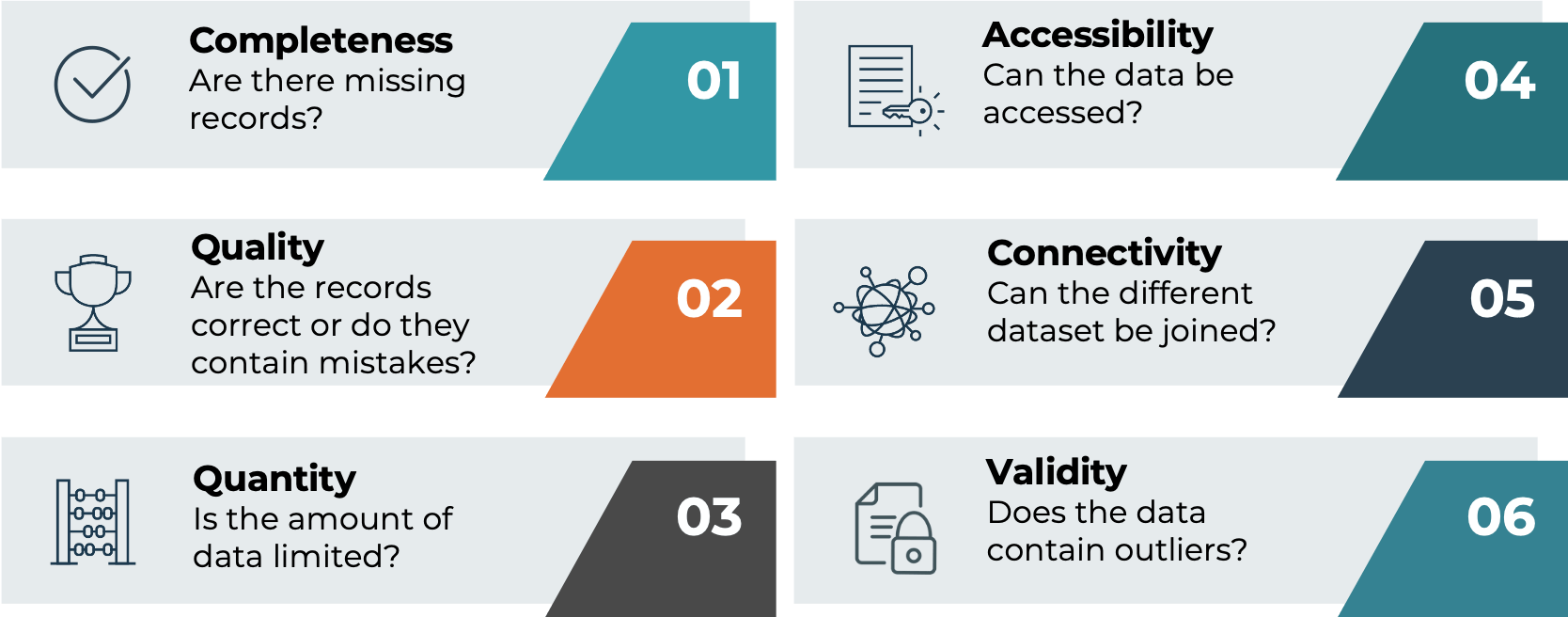

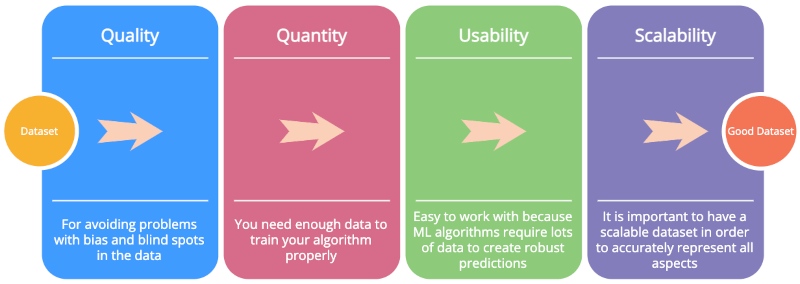

Nature of a Dataset: Pertinence and Inclusion

Excellent is the fundamental thing to think about when you gather a data set for an AI project. However, what’s the significance here practically speaking? The information, most importantly, pieces ought to be pertinent to your objective. Assuming you are planning an AI calculation for an independent vehicle, you will not need in any event, for the best of data sets that comprise superstar photographs.

Moreover, it’s vital to guarantee the bits of information are of adequate quality. While there are approaches to cleaning the information and making it uniform and reasonable before comment and preparing processes, it’s ideal to have the information compared to a rundown of required highlights. For instance, while building a facial acknowledgment model, you will require the preparation of photographs to be of sufficient quality.

What’s more, in any event, for pertinent and top-notch data sets, there is an issue of vulnerable sides and predispositions that any information can be dependent upon. An imbalanced dataset in AI represents the risks of losing the expectation aftereffects of your painstakingly assembled ML model.

Suppose you’re wanting to fabricate a text characterization model to orchestrate an information base of texts by subject. In any case, if you just use texts that don’t cover an adequate number of subjects, your model will probably neglect to perceive the more uncommon ones.

Tip: attempt to utilize live information. Counterfeit information could appear to be smart while you’re fabricating your model (it is less expensive, cleaner, and accessible in enormous volumes). However, on the off chance that you attempt to reduce expenses by utilizing a phony dataset, you could wind up with an unusually prepared calculation. Counterfeit information could end up being excessively unsurprising or not unsurprising enough. One way or the other, it’s anything but an incredible beginning for your man-made intelligence project.

Adequate Amount of a Dataset in AI

Quality as well as amount matters, as well. It’s vital to have an adequate number of information to appropriately prepare your calculation. There’s likewise a chance of overtraining a calculation (known as overfitting) however it’s more probable you will not get an adequate number of great information.