Everything You Need to Know About Texts Annotation

Every day, we interact with different media such as text, audio, images, and video, relying on our brains to process and derive meaning from the media we see to influence our behavior. One of the most common types of media is texts, which makes up the language we use to communicate. Since it is so commonly used, text annotation needs to be done accurately and comprehensively.

Through machine learning (ML), machines are taught how to read, understand, analyze and generate text in a valuable way for technological interaction with humans. As machines improve their ability to interpret human language, the importance of using high-quality texts data for training becomes increasingly undisputed. In all cases, preparing accurate training data must start with accurate and comprehensive texts annotations.

What are texts annotations ?

Algorithms use large amounts of labeled data to train AI models as part of a larger data labeling workflow. In the labeling process, metadata tags are used to mark the characteristics of the dataset. With text annotation, the data includes labels that highlight criteria such as keywords, phrases, or sentences. In some applications, annotation can also include labeling various emotions in the text, such as “anger” or “sarcasm,” to teach machines how to recognize the human intent or emotion behind the words.

Annotated data, called training data, is what the machine processes. Target? Help machines understand human natural language. This process, combined with data preprocessing and labeling, is called Natural Language Processing or NLP.

These labels must be accurate and comprehensive. Poorly done annotation can lead to grammatical errors or problems with clarity or context in the machine. If you ask a bank’s chatbot, “How do I suspend my account?” and it responds, “Your account doesn’t hold it,” then it’s clear that the machine misunderstood the question and needs to be retrained on more accurately annotated data .

After being trained on accurately labeled data, the machine will learn to communicate effectively enough in natural language. It can perform more repetitive and mundane tasks that humans would otherwise perform. This frees up time, money, and resources in the organization to focus on more strategic efforts.

The applications of natural language-based AI systems are endless: intelligent chatbots, e-commerce experience improvements, voice assistants, machine translation, more efficient search engines, and more. The ability to simplify transactions by leveraging high-quality textsual data has profound implications for customer experience and an organization’s bottom line across all major industries.

type of texts annotation

Textsannotations include various types, such as sentiment, intent, semantics, and relation. These options are available for many human languages.

1. Emotion labeling

Sentiment labeling evaluates the attitude and sentiment behind by labeling it as positive, negative, or neutral.

2. Intent labeling

Intent annotation analyzes the need or desire behind the texts, grouping it into several categories such as request, order, or confirmation.

Semantic annotation attaches various tags to that refer to concepts and entities such as people, places, or topics.

4. Relationship labeling

Relational annotations aim to draw various relationships between different parts of a document. Typical tasks include dependency resolution and coreference resolution.

The type of project and associated use case will determine which annotation technology should be chosen.

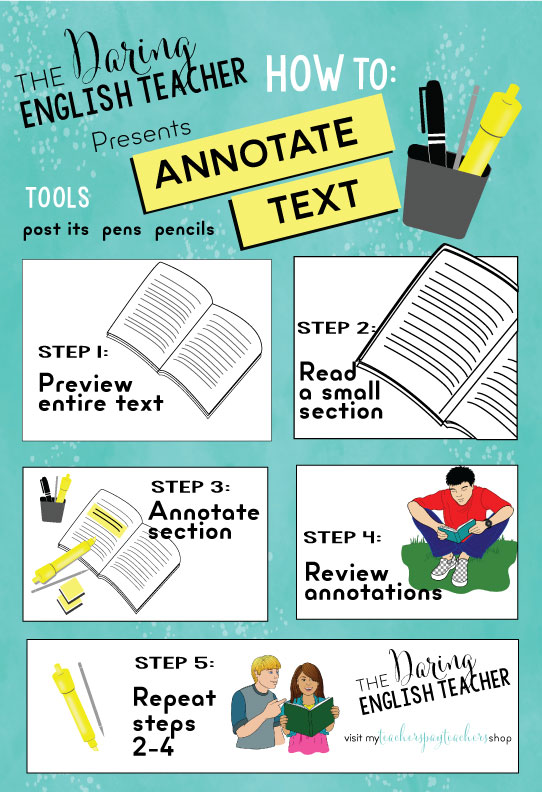

How is the texts annotated?

Most organizations are looking for human annotators to label data. Human taggers are especially valuable when analyzing sentiment data, which is often subtle and depends on modern trends in slang and other language usage.

Nonetheless, existing large-scale annotation and classification tools can help you deploy your AI models quickly and cheaply. The route you take will depend on the complexity of the problem you are trying to solve, and the resource and financial commitment your organization is willing to make.

Understand your current goals and long-term vision

What kind of data do you need

Define what types of annotations are required as training data for the model—whether document-level labels or token-level labels, whether collecting data from scratch or labeling data or checking machine predictions. This is an important first step in defining your goals.

How much data you need and for how long

Volumetric data and required data throughput are important factors in determining data labeling strategies. When your needs are low, it might be a good idea to start with an open source annotation tool or subscribe to a self-service platform. However, if you foresee a rapid increase in your team’s need for labeled data, it might be a good idea to take the time to evaluate your options and choose a platform or service partner that will work in the long run.

Whether your data is in a specialized domain or in a non-English language

data in specialized domains or in languages other than English may require relevant knowledge and skills from annotators. This can create limitations when you scale your data labeling efforts. In this case, it becomes crucial to choose the right partner who can meet these special needs.

What resources do you have

You probably have an experienced engineering team working on your data and building models. You probably already have a team of expert annotators. You might even have your own callout tool. No matter what resources you have, you want to maximize their value when acquiring external sources.

Go beyond texts-based data

Texts data can also be extracted from image, audio and video files. If such a need arises, you need your annotation platform or service provider to be able to handle the transcription tasks from this non data. This is also something you should consider when choosing a labeling solution.