Something about Self supervised learning?

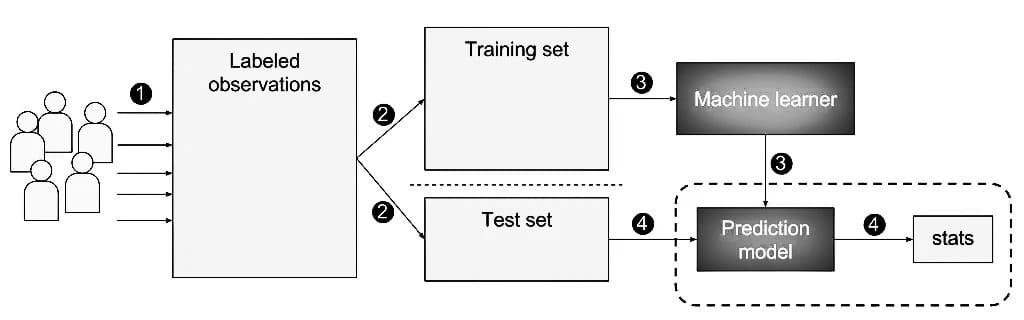

(SSL) is a form of machine learning. Reading from unlabeled sample data. It can be considered as an intermediate form between supervised and supervised learning. Based on the neural implant network. [1] The neural network learns in two steps. First, the task is solved based on fake labels that help launch network devices. [2] [3] Second, the actual work is done through supervised or unsupervised learning. [4] [5] [6] Self-guided reading has produced promising results in recent years and has found an effective application in audio processing and used by Facebook and others for speech recognition. [7] The main complaint of SSL is that training can be done with lower quality data, rather than improving the final results. Self-guided reading greatly imitates

Types of self supervised learning

Training details can be divided into positive and negative examples. Specific examples are those that correspond to the target. For example, if you are learning to identify birds, the best training details are those pictures that contain birds. Negative examples are those that do not.

SSL Partition Edit

SSL partitions use both good and bad examples. The differential reading loss function reduces the distance between positive samples while increasing the distance between negative samples.

A completely different SSL setting

Non-comparable SSL uses only good examples. Conversely, the NCSSL is changing to a practical usable localization rather than reaching the target of targeting work with zero losses. Active NCSSL requires an additional predictor on the online side that does not extend back to the target.

Types of Self-Supervised Learning: Unleashing the Power of Unlabeled Data

Introduction: Self-supervised learning has emerged as a powerful approach in machine learning, enabling models to learn from unlabeled data and extract valuable representations without the need for explicit supervision. By leveraging the inherent structure and patterns in the data, self-supervised learning algorithms aim to uncover meaningful representations that can benefit downstream tasks. In this article, we will explore various types of self-supervised learning methods, highlighting their unique approaches and applications in the field of artificial intelligence.

- Contrastive Learning: Contrastive learning is a popular self-supervised learning technique that aims to learn representations by contrasting similar and dissimilar samples in the data. The algorithm learns to map similar samples closer in the representation space while pushing dissimilar samples further apart. This is achieved by creating positive and negative pairs from the unlabeled data and optimizing a contrastive loss function. Contrastive learning has shown promising results in various domains, including computer vision and natural language processing.

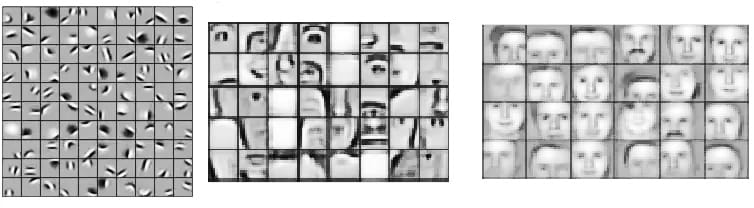

- Predictive Coding: Predictive coding is a self-supervised learning method inspired by theories of predictive coding in neuroscience. It involves training models to predict missing or corrupted parts of the input data. By learning to reconstruct the original input from partial or corrupted versions, the model learns meaningful representations that capture relevant features and patterns in the data. Predictive coding has been successfully applied in image and video analysis tasks, as well as speech recognition and generation tasks.

- Generative Models: Generative models, such as autoencoders and generative adversarial networks (GANs), can also be used for self-supervised learning. Autoencoders learn to encode the input data into a low-dimensional representation and reconstruct it back to its original form. By training on the reconstruction error, the model learns to capture salient features in the data. GANs, on the other hand, learn to generate realistic samples by competing against a discriminator network that distinguishes between real and generated samples. Generative models can learn meaningful representations by training on large amounts of unlabeled data, enabling them to generate new samples and capture the underlying data distribution.

- Temporal Learning: Temporal learning methods leverage the temporal structure in sequential data to learn representations. For example, in video analysis tasks, models can be trained to predict the next frame in a video given a sequence of preceding frames. By learning to capture the dynamics and temporal dependencies in the data, the model can extract meaningful representations that capture motion and temporal patterns. Temporal learning has been successfully applied in video action recognition, video prediction, and language modeling tasks.

- Contextual Representation Learning: Contextual representation learning focuses on learning representations that capture contextual relationships between different elements in the data. For instance, in natural language processing, models can be trained to predict missing words in a sentence based on the surrounding context. By understanding the contextual relationships between words, the model learns to capture semantic and syntactic properties of the language. Contextual representation learning methods, such as masked language models and sequence-to-sequence models, have revolutionized natural language understanding tasks.

- Spatial Relationship Learning: Spatial relationship learning is particularly relevant in computer vision tasks, where models need to understand the spatial relationships between objects or parts within an image. By training models to predict relative positions, orientations, or relationships between objects or image patches, the model can learn representations that capture spatial dependencies and geometric properties. Spatial relationship learning has applications in object detection, image segmentation, and scene understanding tasks.

Conclusion

Self-supervised learning offers a powerful paradigm for leveraging unlabeled data to learn meaningful representations. Through contrastive learning, predictive coding, generative models, temporal learning, contextual representation learning, and spatial relationship learning, models can extract valuable features and patterns from unannotated data. These self-supervised learning methods have demonstrated remarkable performance in various domains, including computer vision, natural language processing, and sequential data analysis. As research in self-supervised learning continues to advance, we can expect further innovations in unsupervised representation learning, enabling machines to unlock the hidden knowledge within vast amounts of unlabeled data.

Types of Self-Supervised Learning: Expanding the Boundaries of Unsupervised Learning

Introduction: Self-supervised learning has gained significant attention in the field of machine learning as a powerful approach to learning representations from unlabeled data. Unlike traditional supervised learning, which relies on labeled data for training, self-supervised learning leverages the inherent structure or context within the data to create supervision signals. In this article, we will explore various types of self-supervised learning methods, highlighting their unique approaches and applications in unlocking the potential of unsupervised learning.

- Contrastive Learning: Contrastive learning is a popular self-supervised learning technique that aims to learn representations by contrasting positive and negative samples. The objective is to maximize the similarity between different augmented views of the same data instance (positive samples) while minimizing the similarity between views of different instances (negative samples). Contrastive learning methods, such as SimCLR and MoCo, have demonstrated impressive results in various domains, including image recognition and natural language processing.

- Generative Modeling: Generative modeling is another type of self-supervised learning that focuses on modeling the underlying distribution of the data. By learning to generate samples that resemble the training data, the model can capture meaningful representations. Autoencoders, Variational Autoencoders (VAEs), and Generative Adversarial Networks (GANs) are commonly used generative models. These models learn to reconstruct input data or generate synthetic data, providing useful representations that capture the essential characteristics of the data.

- Predictive Learning: Predictive learning is based on the principle of predicting missing or future information within the data. This approach utilizes temporal or spatial dependencies in the data to create self-supervision signals. For example, in video understanding, predicting the next frame or future frames given the past frames can enable the model to learn temporal dependencies. Similarly, in natural language processing, predicting the next word in a sentence can capture semantic relationships. Predictive learning methods like Deep Infomax, BERT, and GPT have achieved remarkable results in various tasks by leveraging this self-supervised learning paradigm.

- Inpainting and Completion: Inpainting and completion methods focus on reconstructing or completing missing parts of the data. By training a model to fill in the gaps in an input image, video, or sequence, it learns to capture the underlying structure and context of the data. Inpainting techniques have been widely used in image processing and computer vision tasks, where missing or occluded regions need to be filled in. Similarly, completion methods in natural language processing can fill in missing words or phrases in a sentence, enabling the model to understand the context and meaning of the text.

- Relational Learning: Relational learning approaches focus on capturing relationships and interactions between different elements within the data. This type of self-supervised learning aims to learn representations that encode the associations between various entities, such as objects in images or words in sentences. By training the model to predict relationships or infer connections between different data elements, it can acquire rich and meaningful representations. Relational learning has found applications in visual question answering, knowledge graph completion, and recommendation systems.

- Temporal and Spatial Context Modeling: Temporal and spatial context modeling involves learning representations that capture the temporal or spatial dependencies within the data. This type of self-supervised learning is particularly relevant in sequential or time-series data, such as speech, videos, or sensor data. By training the model to predict future time steps or infer spatial relationships, it learns to encode the contextual information and dependencies present in the data. Recurrent Neural Networks (RNNs), Transformers, and Convolutional Neural Networks (CNNs) with temporal or spatial attention mechanisms are commonly used for temporal and spatial context modeling.

Conclusion

Self-supervised learning has emerged as a promising avenue in machine learning, enabling the learning of meaningful representations from unlabeled data. The various types of self-supervised learning methods, including contrastive learning, generative modeling, predictive learning, inpainting and completion, relational learning, and temporal and spatial context modeling, offer diverse approaches to leverage the inherent structure and context within the data. These methods have demonstrated impressive results across different domains and tasks, providing valuable insights and expanding the boundaries of unsupervised learning. As self-supervised learning continues to advance, it holds great potential for unlocking the latent knowledge within unlabeled data and driving further advancements in artificial intelligence.

Types of Self-Supervised Learning: Exploring Unsupervised Learning Methods

Introduction: Self-supervised learning is a branch of unsupervised learning that leverages the inherent structure or patterns in data to learn representations without explicit human annotations. In self-supervised learning, the model learns from the data itself, extracting meaningful features that can be used for downstream tasks. This article explores various types of self-supervised learning methods, highlighting their key principles and applications in machine learning.

- Contrastive Learning: Contrastive learning is a popular approach in self-supervised learning, where the model learns by contrasting positive samples with negative samples. The objective is to pull positive samples closer together in the learned feature space while pushing negative samples farther apart. This forces the model to capture meaningful differences and similarities in the data. Contrastive learning has been successfully applied to various domains, including computer vision and natural language processing, enabling tasks such as image recognition, object detection, and document classification.

- Temporal Prediction: Temporal prediction is a self-supervised learning method that leverages the sequential nature of data. The model is trained to predict the next element in a sequence given the previous elements. By learning to predict future states, the model implicitly captures the underlying temporal dependencies and can extract useful representations. This approach has found applications in video analysis, speech recognition, and natural language processing tasks such as language modeling and machine translation.

- Autoencoders: Autoencoders are neural network architectures that learn to reconstruct input data from a compressed representation. The model consists of an encoder that maps the input to a lower-dimensional latent space and a decoder that reconstructs the input from the latent representation. By forcing the model to learn to compress and reconstruct the input, autoencoders learn meaningful representations that capture the salient features of the data. Autoencoders have been used for tasks such as dimensionality reduction, anomaly detection, and generative modeling.

- Generative Modeling: Generative modeling is a self-supervised learning approach that focuses on learning the underlying data distribution. Models such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) are trained to generate new samples that resemble the training data. By learning to generate realistic samples, these models implicitly capture the data’s inherent structure and learn meaningful representations. Generative models have applications in image synthesis, text generation, and data augmentation.

- Inpainting: Inpainting is a self-supervised learning method that involves filling in missing parts of data. The model is trained to predict the missing regions based on the surrounding context. Inpainting has applications in image editing, restoration, and denoising. By learning to inpaint missing regions, the model learns to capture the structure and semantics of the data, enabling tasks such as image completion and inpainting of corrupted images.

- Context Prediction: Context prediction is a self-supervised learning approach that focuses on capturing contextual relationships in the data. The model is trained to predict missing or masked parts of the input based on the remaining context. By learning to infer missing information, the model captures meaningful dependencies and relationships between different elements in the data. Context prediction has applications in natural language processing tasks such as sentiment analysis, text classification, and named entity recognition.

- Cluster Assignments: Cluster assignments involve organizing data into meaningful groups without any explicit labels. The model learns to assign similar samples to the same cluster and dissimilar samples to different clusters. By capturing similarities and dissimilarities, the model learns representations that group similar instances together. Cluster assignments have applications in data clustering, anomaly detection, and recommendation systems.

Conclusion: Self-supervised learning has emerged as a powerful approach for training machine learning models without the need for explicit supervision. By leveraging the inherent structure and patterns in data, self-supervised learning methods enable the extraction of meaningful representations that can be used for downstream tasks. The various types of self-supervised learning, including contrastive learning, temporal prediction, autoencoders, generative modeling, inpainting, context prediction, and cluster assignments, have shown promising results in different domains and applications. As research in self-supervised learning continues to evolve, these methods hold great potential for advancing the field of unsupervised learning and enabling models to learn from vast amounts of unlabeled data.

Comparing with other types of machine learning with self supervised learning.

SSL is an intentionally controlled learning method, as the purpose is to produce a separate result from the input. At the same time, however, it does not require the explicit use of dual-input output labels. Instead, mergers, embedded metadata, or domain information contained in entries are completely and independently extracted from the data. [10] These management symbols, made in detail, can be used for training.

SSL is the same as unregulated reading because it does not require labels on sample data. Unlike unsupervised reading, however, reading is not done using databases.

Slightly supervised education includes supervised and supervised learning, which requires only a small portion of labeled reading data.

In transfer teaching, a model designed for one task is reused for another task.

Examples

Self-supervised learning is particularly suitable for speech recognition. For example, Facebook developed wav2vec, a self-supervised algorithm, to perform speech recognition using two deep convolutional neural networks that build on each other.

Google’s Bidirectional Encoder Representations from Transformers (BERT) model is used to better understand the context of search queries.

OpenAI’s GPT-3 is an autoregressive language model that can be used in language processing. It can be used to translate texts or answer questions, among other things.

Bootstrap Your Own Latent is a NCSSL that produced excellent results on Imagine and on transfer and semi-supervised benchmarks.[

Directed is a NCSSL that directly sets the predictor weights instead of learning it via gradient update.

Some about Supervised Reading?

If you are studying a job under supervision, someone is judging that you get the right answer. Similarly, in supervised learning, that means having a complete set of labeled data while training the algorithm.

Full labeling means that each model in the training database is marked with an answer algorithm that must come up with its own. Thus, a database with flower photo labels can tell the model which images were flowers, daisy and daffodils. When a new image is displayed, the model compares it with training examples to predict the appropriate label Self supervised learning.

For more visit our website

Get all your business need here only | Top Offshoring Service provider. (24x7offshoring.com)