In the previous issue, we briefly introduced artificial intelligence data labeling (2) text labeling. In this issue, we will introduce image labeling for image recognition . What is Image Annotation? (easy to understand guide)

Image data annotation/acquisition

First of all, let’s quote a simple explanation of machine learning from a post on Zhihu:

Recognize the handwritten number “8”

Image recognition is realized when we have a certain amount of data, so first of all we have to have a large number of handwritten “8”, just as MINIST provides a picture library of handwritten numbers, and each picture is 18*18 picture:

The digit “8” in the MNIST database

Neural networks can’t recognize images, but neural networks will take numbers as input, but for computers, pictures happen to be a series of numbers representing the color of each pixel:

Handwritten numeral “8”

We treat a 18×18 pixel picture as a series of 324 numbers, and we can input it into our neural network:

An example of handwritten “8” input into the neural network

To better manipulate our input data, we scale up the neural network to have 324 input nodes:

The first output will predict the probability that the picture is “8” and the second will output the probability that it is not “8”. In a nutshell, we can use the neural network to group the items to be identified, relying on a variety of different outputs.

image data training

The only thing left to do now is to train our neural network. First, mark a large number of various “8” and non-“8” pictures, which is equivalent to telling it that the probability of our input picture being “8” is 100% for the pictures we judge as “8”, not The probability of “8” is 0, and the corresponding non-“8” picture, we clearly tell it that the probability of our input picture is “8” is 0, and the probability of not “8” is 100%.

Here is some training data:

Well… that’s the training data…

We can now train this neural network in minutes on our laptops. After completion, we can get a neural network with a high recognition rate of “8” pictures.

(The content has been edited by me, and I personally feel that I can better understand this simple process. The original post address: https://www.zhihu.com/question/27790364, which is more in-depth on machine learning, and you can take it yourself if you need it. )

Well, now let’s go back to our image data annotation. The mainstream applications of image annotation include automatic driving, portrait recognition, object recognition in pictures, and the non-mainstream application scope of medical image recognition.

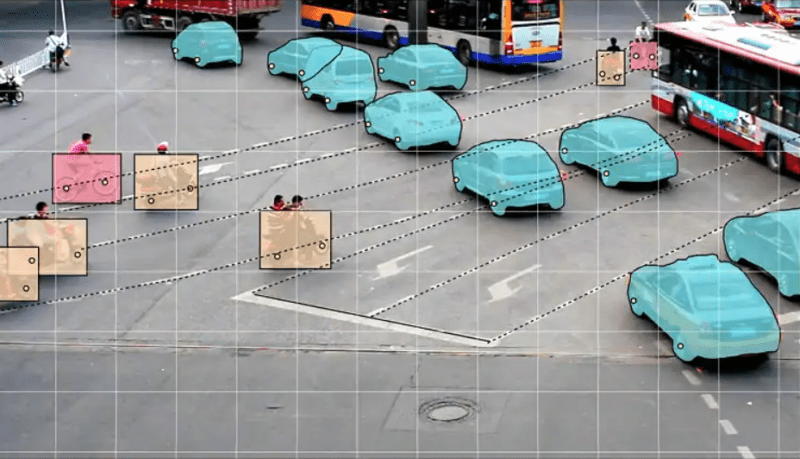

Autopilot image drawing frame labeling

The labeling principles of automatic driving and object recognition in pictures are similar. There are two main labeling methods, one is drawing frame labeling, and the other is fine cutting labeling.

First, let’s look at the drawing box annotation:

This is the drawing frame of the vehicle.

This is an example of a vehicle frame labeling. Cars, SUVs, vans, and minivans are marked in the picture. When labeling, we put the edge of the frame close to the edge of the vehicle and indicate the attributes of each frame. For the algorithm , each box is a small picture, and each small picture corresponds to a kind of car.

When labeling, you need to pay special attention to the tangent between the frame and the edge of the vehicle. If it is not tangent, such as selecting a part that does not belong to the vehicle, then the machine may recognize the part selected by multiple frames as a vehicle during learning. , resulting in inaccurate or even wrong machine recognition; in addition, the same is true when selecting the attributes of the box. If it is originally a car, but it is marked as a truck, it is equivalent to telling the machine that the probability of this car being a truck is 100%. , When machine learning, use a more vivid sentence. At this time, the machine is confused, and it doesn’t know whether it is correct or not.

The reason why we need a lot of data is that the machine algorithm will summarize the high-dimensional features of these objects by itself during the learning of a large amount of data. When recognizing a new image, it can use the high-dimensional features summarized by itself to judge the new image. Each possible outcome is given a probability.

Face Recognition Image Annotation

Compared with the object recognition of objects, the recognition of portraits uses a different principle. Now the more widely used method is to locate multiple landmarks on the face, which is what we are doing now. Labeling, each point corresponds to a feature position, from the most basic five-point labeling to hundreds of points:

This is the 27-point annotation legend from the face key point recognition on CSND

Take the above picture as an example, we can see that the three points 1-3 correspond to the two ends and the center of the eyebrows respectively, and the points 7-11 correspond to the center of the upper and lower eyelids and the center of the eyeball at the left and right corners of the eyes respectively. The remaining points are the same as we can see in the picture and will not go into details.

What is clear is that each point represents a key point, which corresponds to a key position of the facial features, and when connected together, the facial features of a person are formed. At present, there are 240 face key point annotations with more points, which can include human face contour, lip shape, nose shape, eye contour, eyebrow contour, etc., forming a complete distribution map of face key points .

It is relatively cumbersome to mark the key points of portrait recognition. It is necessary to move the default point to each point in the corresponding position. However, it is precisely because this mark is a little more troublesome that the unit price of marking a face is relatively high.

In addition, if you need the principle of portrait recognition algorithm, here is the portal: https://blog.csdn.net/amds123/article/details/72742578

Medical Image Annotation

Image annotation is taken out separately because this field is medical. At present, medical image recognition is not very mature, and the entry threshold is relatively high. Annotators are also concentrated on full-time doctors.

It just so happens that we are doing medical treatment ourselves, so use our own data as an example:

Lumbar MRI data

This is an MRI of the lumbar spine. From the picture, we can see that T12, L2, and L3 show signs of compression fractures. The degree of T12 is relatively mild, while L2 and L3 show signs of more severe compression fractures. Therefore, T12 is marked as a mild compression fracture, and L2\L3 is a severe compression fracture, which are represented by boxes of different colors.

This kind of labeling is similar to the way of drawing frame labeling on vehicles, but because it involves more professional medical knowledge, the requirements for labeling accuracy are extremely high. If you make a mistake, it will cause serious consequences, so you usually choose on-the-job Doctors and postgraduates do it in their spare time. For some complex films, even these postgraduates who are interns in tertiary hospitals can’t do it. Therefore, the resources for labeling are very scarce, and the cost of labeling is relatively higher, but it is the next one after all. In the field of artificial intelligence, the competition is actually fierce. I don’t know which companies will be left after the smart medical industry has been reshuffled for a period of time.

Beep softly, the current smart medical care needs to be combined with the existing mature business model to realize its implementation. It is simply a fantasy to make something like a castle in the air and then directly apply it to the medical industry. Most of the current smart medical care In the initial stage, there is no mature business model and sufficient financial support for landing.

Industry evaluation image annotation

Some time ago, a reporter talked with me about labeling this industry, so let me also share my views on this industry here.

The labeling industry actually started relatively early. The earliest companies I have come into contact with have specialized in data processing since the last century. Now they process data from governments and universities in various countries. The professionalism of data processing makes me Jaw-dropping. But the line of data labeling really became popular when deep neural networks began to be applied in the artificial intelligence industry.

The learning principle of the deep neural network is to use a large amount of data to pile up the training, just like the principle of drawing the frame of the image I introduced earlier, the larger the amount of data, the more accurate the robot’s response.

Image Annotation Price Quotes

Therefore, in the past two years, a large number of teams and companies that do data collection and labeling have emerged in China. It can be roughly divided into the following categories:

Small teams : Mostly 5-20 to pick up a project together, basically exists in the form of part-time jobs, the quality of labeling is difficult to guarantee, usually pick up some projects that are collected and resold, and there are also those who recruit a group of part-time people from the Internet. Of course, this is also the part with the lowest income.

Small companies : Most of them exist in the form of 5-20 full-time employees + a group of part-time employees. The quality of labeling is uneven. Some labeling teams have good labeling quality, but some even find it difficult to test the label. These people are The main force of price reduction is mostly in fourth- and fifth-tier cities.

Small-scale data labeling companies : There are already 50-100 full-time teams, the labeling quality has been recognized by Party A, the income is relatively balanced, and generally there is a relatively fixed Party A and business.

Crowdsourcing companies : Undertake large-scale collection and labeling needs, have their own labeling platform and collection tools, and the quality platform will do better control. Big crowdsourcing platforms: Baidu Crowdsourcing, Totoro Crowdsourcing, JD Zhongzhi, etc., and some new annotation platforms will not go into details one by one.

Enterprise-owned platforms : JD.com, Baidu, Tencent, and Ali all have their own labeling platforms and tools, and collect and process data through internal personnel or some outsourced operations.

Differences in Data Labeling Quality

Generally speaking, large-scale companies will have a better grasp of the labeling quality.

In addition, the distribution of domestic labeling teams is also relatively concentrated. Small teams are mainly located in fourth- and fifth-tier cities in Henan, Hebei, and Hubei. They are characterized by low labor costs, and slightly larger teams are distributed throughout the country, such as Shanghai, Hangzhou, Chengdu, and Guiyang. There are many places such as Beijing, Tianjin, etc., which are characterized by a relatively good ratio of resources.

The prospect of the data labeling industry, to be honest, when I look at many teams now, I don’t see it very well. Now the industry’s labeling is mostly concentrated in low-end data labeling, such as the drawing of ordinary images, sound transcription, Simple labeling of text, etc. These are the basic data for the start of artificial intelligence. The characteristics of the presentation are that the barriers to entry are very low. A normal person can use a computer to label, but few teams can undertake things involving professional fields.

There is a more realistic problem: most of the teams now can only undertake this kind of labeling, especially the small labeling teams in the fourth and fifth tier cities. I once released an entity recognition labeling of medical records, and the rules are a bit complicated. , but in fact, you only need to memorize the rules, and then practice more. If you are serious, you can get started in about a week. But when I assigned this labeling task to some small teams, I found that they failed to master the rules after a week. The reason , or because they can’t calm down to study the rules and understand the rules repeatedly in the connection, so although the income of this label is more than 4 times their current label income, they still can’t do it.

In fact, these basic data annotations are gradually being replaced by machines. What is needed in the future must be professional data annotations, such as annotations of speech mixed with foreign languages, medical image recognition of images, and professional sentence annotations of texts.

So in the end, I still hope that our domestic labeling team will gradually start to accumulate resources in the professional direction. I am still very optimistic about crowdsourcing in the professional direction.