Data Is Required for Machine Learning?

Assuming you ask any information researcher how much information is required for AI, you’ll most presumably get it is possible that “It depends” or “The more, the better.” And truly, the two responses are right.

It truly relies upon the sort of task you’re dealing with, and it’s consistently really smart to have as numerous pertinent and dependable models in the datasets as you can get to get precise outcomes. Be that as it may, this has yet to be addressed: how much is sufficient? Also, on the off chance that there isn’t an adequate number of information, how might you manage its need?

The involvement in different undertakings that elaborate man-made brainpower artificial intelligence (AI) and machine learning (ML), permitted us at Postindustria to think of the most ideal ways of moving toward the information amount issue. This is the very thing we’ll discuss in the read underneath.

Factors that influence the size of datasets you need

Each machine learning project has a bunch of explicit variables that influences the size of the computer based intelligence preparing informational indexes expected for effective displaying. Here are the most fundamental of them.

The intricacy of a model

Basically, it’s the quantity of boundaries that the calculation ought to learn. The more highlights, size, and fluctuation of the normal result it ought to consider, the more information you want to enter. For instance, you need to prepare the model to anticipate lodging costs. You are given a table where each line is a house, and sections are the area, the area, the quantity of rooms, floors, restrooms, and so on, and the cost. For this situation, you train the model to anticipate costs in view of the difference in factors in the sections. Furthermore, to figure out what each extra info include means for the information, you’ll require more information models.

The intricacy of the learning calculation

More mind boggling calculations generally require a bigger measure of information. In the event that your undertaking needs standard Machine Learning calculations that utilization organized learning, a more modest measure of information will be sufficient. Regardless of whether you feed the calculation with additional information than it’s adequate, the outcomes will not improve definitely.

The circumstance is different with regards to profound learning calculations. Not at all like customary AI, profound learning doesn’t need include designing (i.e., building input values for the model to squeeze into) and is as yet ready to gain the portrayal from crude information. They work without a predefined design and sort out every one of the actual boundaries. For this situation, you’ll require more information that is pertinent for the calculation created classifications.

Naming necessities

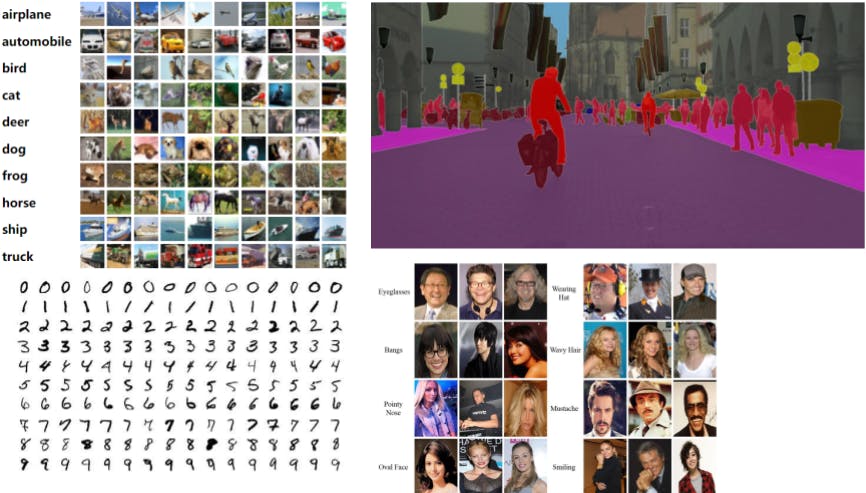

Contingent upon the number of names the calculations that need to foresee, you might require different measures of information. For instance, if you need to figure out the photos of felines from the photos of the canines, the calculation needs to get familiar with certain portrayals inside, and to do as such, it changes over input information into these portrayals. However, on the off chance that it’s finding pictures of squares and triangles, the portrayals that the calculation needs to learn are more straightforward, so how much information it’ll require is a lot more modest.

Adequate blunder edge

The sort of undertaking you’re chipping away at is one more variable that influences how much information you really want since various ventures have various degrees of capacity to bear blunders. For instance, assuming that your assignment is to anticipate the climate, the calculation expectation might be mistaken by nearly 10 or 20%. Be that as it may, when the calculation ought to tell regardless of whether the patient has malignant growth, the level of blunder might cost the patient life. So you want more information to come by additional exact outcomes.

Input variety

At times, calculations ought to be educated to work in unusual circumstances. For instance, when you foster a web-based remote helper, you normally maintain that it should comprehend what a guest of an organization’s site inquires. In any case, individuals don’t generally compose entirely right sentences with standard solicitations. They might pose great many various inquiries, utilize various styles, commit syntax errors, etc. The more uncontrolled the climate is, the more information you really want for your Machine Learning project.

In light of the elements above, you can characterize the size of informational collections you want to accomplish great calculation execution and solid outcomes. Presently how about we jump further and track down a solution to our primary inquiry: how much information is expected for AI?

What is the ideal size of artificial intelligence preparing informational indexes?

While arranging a Machine Learning project, many concern that they have relatively little information, and the outcomes will not be really dependable. Be that as it may, a couple really know how much information is “excessively little,” “to an extreme,” or “enough.”

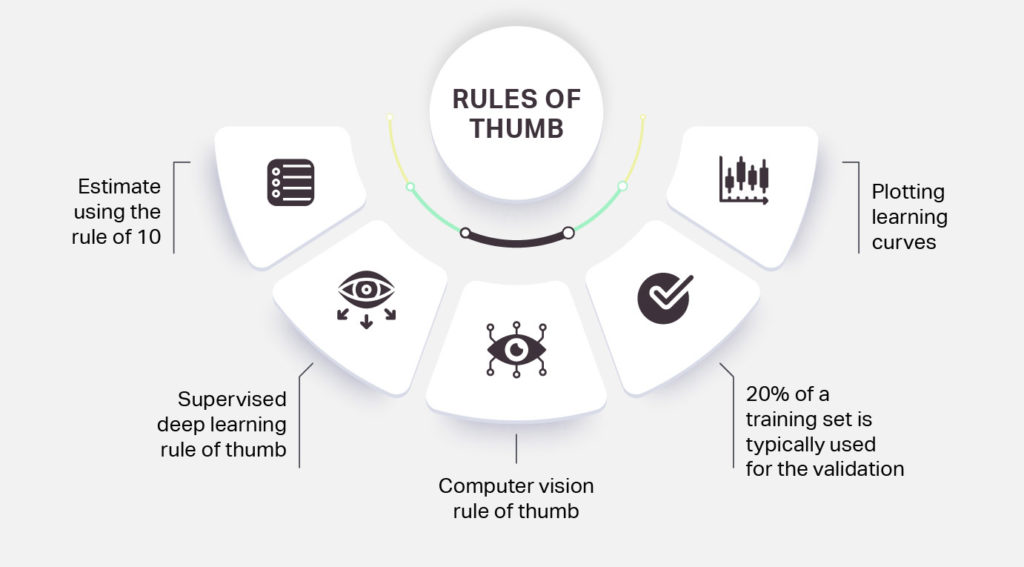

The most well-known method for characterizing whether an informational collection is adequate is to apply a 10 times rule. This standard implies that how much info information (i.e., the quantity of models) ought to be multiple times more than the quantity of levels of opportunity a model has. Normally, levels of opportunity mean boundaries in your informational collection.

24x7offshoring

[web_stories title=”true” excerpt=”false” author=”false” date=”false” archive_link=”true” archive_link_label=”” circle_size=”150″ sharp_corners=”false” image_alignment=”left” number_of_columns=”1″ number_of_stories=”5″ order=”DESC” orderby=”post_title” view=”carousel” /]

Thus, for instance, assuming that your calculation recognizes pictures of felines from pictures of canines in light of 1,000 boundaries, you want 10,000 pictures to prepare the model.

Albeit the multiple times rule in AI is very famous, it can work for little models. Bigger models don’t adhere to this guideline, as the quantity of gathered models doesn’t be guaranteed to mirror the real measure of preparing information. For our situation, we’ll have to count the quantity of lines as well as the quantity of segments, as well. The right methodology is increase the quantity of pictures by the size of each picture by the quantity of variety channels.

You can involve it for good guess to get the venture going. Be that as it may, to sort out how much information is expected to prepare a specific model inside your particular undertaking, you need to track down a specialized cooperate with important skill and talk with them.

In addition, you generally ought to recall that the computer based intelligence models don’t concentrate on the information yet rather the connections and examples behind the information. So amount will impact the outcomes, yet in addition quality.

In any case, what can really be done if the datasets are scant? There are a couple of methodologies to manage this issue.

The most effective method to manage the absence of information

Absence of information makes it difficult to lay out the relations between the information and result information, in this manner causing what’s known as “‘underfitting”. On the off chance that you need input information, you can either make engineered informational indexes, increase the current ones, or apply the information and information created before to a comparable issue. We should audit each case in more detail underneath.

Information expansion

Information expansion is a course of extending an information dataset by marginally changing the current (unique) models. It’s generally utilized for picture division and grouping. Common picture change methods incorporate editing, pivot, zooming, flipping, and variety alterations.

As a rule, information expansion helps in tackling the issue of restricted information by scaling the accessible datasets. Other than picture characterization, it tends to be utilized in various different cases. For instance, this is the way information expansion works in regular language handling (NLP):

As a rule, information expansion helps in tackling the issue of restricted information by scaling the accessible datasets. Other than picture characterization, it tends to be utilized in various different cases. For instance, this is the way information expansion works in regular language handling (NLP):

- Back interpretation: deciphering the text from the first language into an objective one and afterward from target one back to unique

- Simple information increase (EDA): supplanting equivalents, irregular inclusion, arbitrary trade, irregular erasure, mix sentence requests to get new examples and bar the copies

- Contextualized word embeddings: preparing the calculation to involve the word in various settings (e.g., when you want to comprehend whether the ‘mouse’ signifies a creature or a device)

Information expansion adds more flexible information to the models, assists resolve with classing awkwardness issues, and increments speculation capacity. Nonetheless, if the first dataset is one-sided, so will be the expanded information.

Manufactured information age.

Engineered information age in AI is once in a while thought to be a sort of information expansion, however these ideas are unique. During increase, we change the characteristics of information (i.e., obscure or edit the picture so we can have three pictures rather than one), while engineered age implies making new information with the same yet not comparative properties (i.e., making new pictures of felines in light of the past pictures of felines).

During engineered information age, you can name the information immediately and afterward create it from the source, foreseeing the very information you’ll get, which is helpful when not much information is free. Nonetheless, while working with the genuine informational indexes, you really want to initially gather the information and afterward name every model. This manufactured information age approach is generally applied while creating artificial intelligence based medical services and fintech arrangements since genuine information in these businesses is dependent upon severe security regulations.

At Postindustria, we likewise apply a manufactured information method in Machine Learning. Our new virtual gems take a stab at is a perfect representation of it. To foster a hand-following model that would work for different hand sizes, we’d have to get an example of 50,000-100,000 hands. Since it would be unreasonable to get and mark such various genuine pictures, we made them artificially by attracting the pictures of various hands different situations in an extraordinary perception program. This gave us the essential datasets for preparing the calculation to follow the hand and make the ring fit the width of the finger.

While manufactured information might be an extraordinary answer for some undertakings, it is imperfect.

Engineered information versus genuine information issue

One of the issues with manufactured information is that it can prompt outcomes that have little application in tackling genuine issues when genuine factors are stepping in. For instance, on the off chance that you foster a virtual cosmetics take a stab at utilizing the photographs of individuals with one skin tone and afterward create more manufactured information in light of the current examples, then, at that point, the application wouldn’t function admirably on other skin tones. The outcome? The clients will not be happy with the component, so the application will cut the quantity of expected purchasers as opposed to developing it.

One more issue of having dominatingly engineered information manages creating one-sided results. The predisposition can be acquired from the first example or when different variables are ignored. For instance, in the event that we take ten individuals with a specific medical issue and make more information in view of those cases to foresee the number of individuals that can foster a similar condition out of 1,000, the produced information will be one-sided in light of the fact that the first example is one-sided by the decision of number (ten).

Move learning

Move learning is one more method of taking care of the issue of restricted information. This strategy depends on applying the information acquired while dealing with one errand to another comparable assignment. Move learning is that you train a brain network on a specific informational collection and afterward utilize the lower ‘frozen’ layers as element extractors. Then, top layers are utilized train other, more unambiguous informational indexes. For instance, the model was prepared to perceive photographs of wild creatures (e.g., lions, giraffes, bears, elephants, tigers). Then, it can separate highlights from the further pictures to do more speicifc investigation and perceive creature species (i.e., can be utilized to recognize the photographs of lions and tigers).

The exchange learning strategy speeds up the preparation stage since it permits you to utilize the spine network yield as elements in additional stages. However, it tends to be utilized just when the assignments are comparable; if not, this technique can influence the viability of the model.

Significance of value information in medical care projects

The accessibility of enormous information is one of the greatest drivers of Machine Learning progresses, remembering for medical services. The potential it brings to the area is proven by some high-profile bargains that shut throughout the last 10 years. In 2015, IBM bought an organization called Union, which spent significant time in clinical imaging programming for $1bn, gaining tremendous measures of clinical imaging information for IBM. In 2018, a drug goliath Roche gained a New York-put together organization centered with respect to oncology, called Flatiron Wellbeing, for $2bn, to fuel information driven customized malignant growth care.

Be that as it may, the accessibility of information itself is in many cases sufficiently not to effectively prepare a machine learning model for a medtech arrangement. The nature of information is of most extreme significance in medical care projects. Heterogeneous information types is a test to explore in this field. Information from lab tests, clinical pictures, important bodily functions, genomics all come in various organizations, making it hard to convey machine learning calculations to every one of the information on the double.

One more issue is broad availability of clinical datasets. MIT, for example, which is viewed as one of the trailblazers in the field, cases to have the main significantly measured data set of basic consideration wellbeing records that is freely available. Its Copy data set stores and examines wellbeing information from more than 40,000 basic consideration patients. The information incorporate socioeconomics, lab tests, important bodily functions gathered by persistent worn screens (pulse, oxygen immersion, pulse), prescriptions, imaging information and notes composed by clinicians. Another strong dataset is Truven Wellbeing Investigation data set, which information from 230 million patients gathered north of 40 years in light of protection claims. Nonetheless, it’s not openly accessible.

One more issue is little quantities of information for certain sicknesses. Distinguishing illness subtypes with man-made intelligence requires an adequate measure of information for each subtype to prepare machine learning models. At times information are excessively scant to prepare a calculation. In these cases, researchers attempt to foster machine learning models that advance however much as could reasonably be expected from solid patient information. We should utilize care, be that as it may, to ensure we don’t inclination calculations towards sound patients.