Introduction:

As machine learning continues Federated Learning to weave its way into various aspects of our lives, the importance of privacy has taken center stage. Traditional methods of centralized data processing and model training pose significant privacy risks, leading to the emergence of innovative approaches such as Federated Learning. In this blog, we will delve into the growing significance of Federated Learning as a privacy-preserving paradigm, exploring how it enables the training of machine learning models across decentralized devices without the exchange of raw data.

Understanding Federated Learning:

What is Federated Learning?

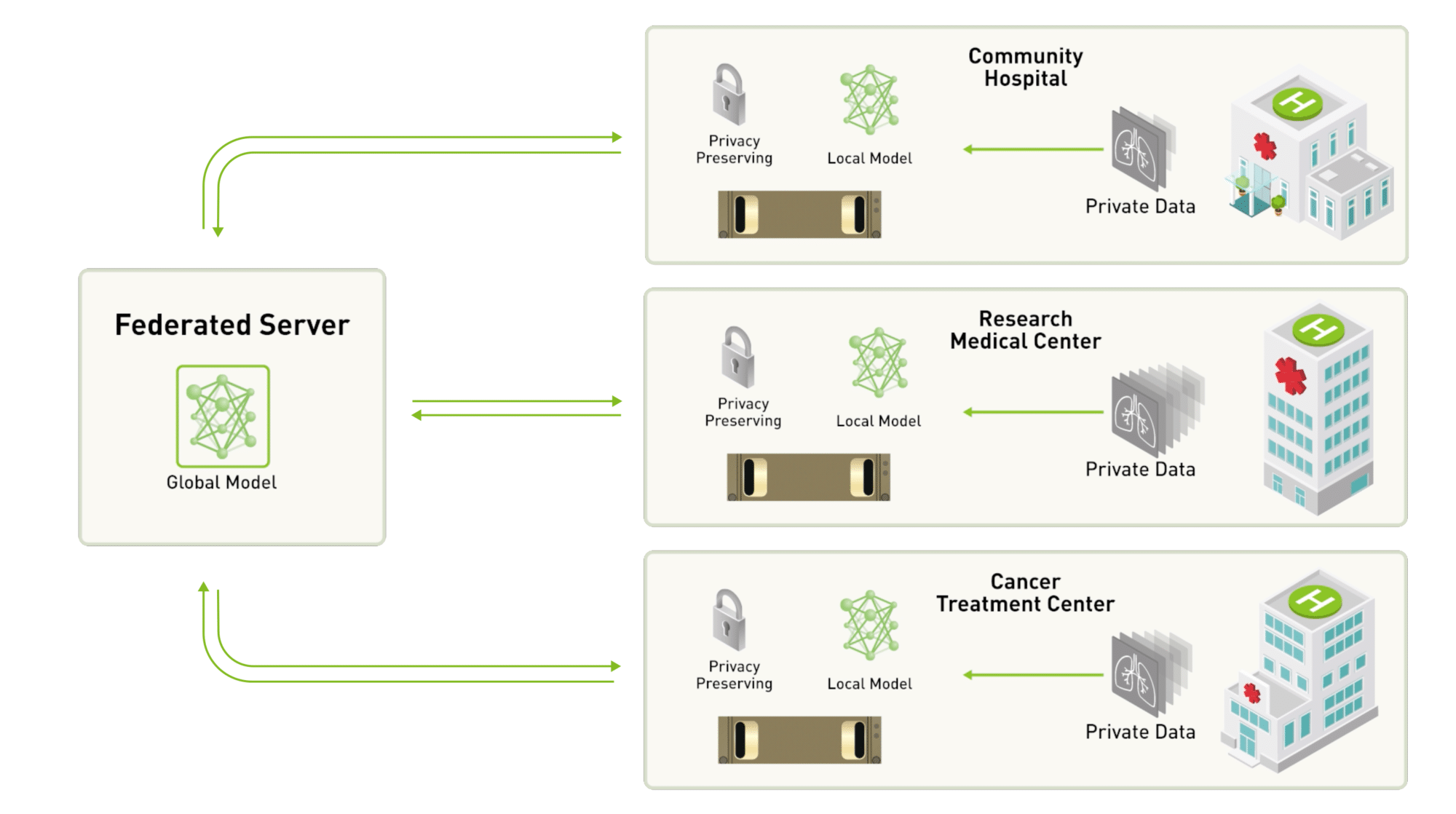

Federated Learning is a decentralized machine learning approach that allows model training to occur across multiple devices or servers holding local data samples. Unlike conventional methods where data is aggregated in a central server, Federated Learning distributes the training process, ensuring that raw data never leaves the user’s device. This privacy-centric approach aims to harness the collective intelligence of decentralized devices while preserving individual privacy.

Core Components of Federated Learning:

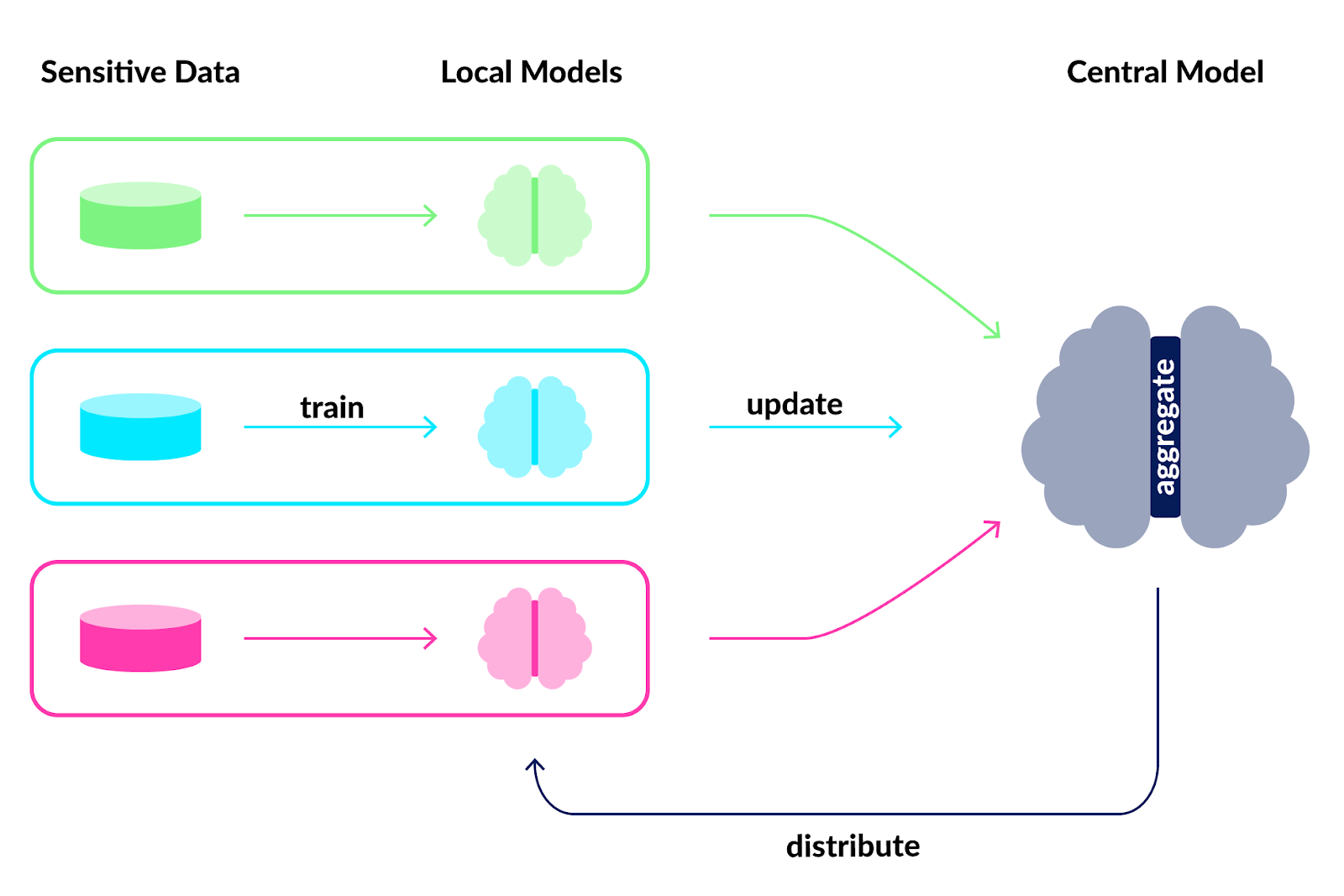

- Local Models: In Federated Learning, each device maintains a local model that is trained on its respective dataset. The local model’s parameters are updated iteratively using local data.

- Central Server: A central server coordinates the training process by aggregating the local model updates from participating devices. The server then computes a global model update that encapsulates knowledge from all devices.

- Model Aggregation: Model updates from local devices are aggregated to create a global model that is shared across all devices. This global model represents a collective learning from the distributed datasets without the need to share raw data.

The Federated Learning Process:

- Initialization: The central server initializes a global model, which is then sent to all participating devices.

- Local Training: Each device trains the global model on its local data, generating a local model update based on its unique dataset.

- Model Update Transmission: Devices send only the model updates (not raw data) to the central server.

- Aggregation: The central server aggregates the received model updates to create an improved global model.

- Iteration: Steps 2-4 are repeated iteratively, refining the global model with insights from all participating devices.

Importance of Federated Learning in Preserving Privacy:

1. Data Privacy:

a. Personalized Learning:

Federated Learning allows models to be personalized to individual users without exposing their raw data. This ensures that sensitive information, such as user behavior or preferences, remains on the user’s device.

b. Healthcare Applications:

In healthcare, Federated Learning facilitates collaborative model training across various medical devices without centralizing sensitive patient data. This enables the development of robust healthcare models while respecting patient privacy.

2. Security and Confidentiality:

a. Financial Services:

Financial institutions can employ Federated Learning to develop fraud detection models without centralizing transaction details. This protects the confidentiality of financial data while enhancing security measures.

b. Corporate Training:

Companies can leverage Federated Learning for employee training without sharing individual performance data centrally. This ensures that proprietary information remains secure while improving overall training effectiveness.

3. Regulatory Compliance:

a. GDPR Compliance:

Federated Learning aligns with privacy regulations like the General Data Protection Regulation (GDPR) by minimizing the need to transfer personal data across networks. This compliance reduces legal risks associated with data handling.

b. Education Sector:

In the education sector, Federated Learning supports compliance with student data protection laws. Schools and institutions can collaboratively improve educational models without compromising the privacy of student information.

4. Decentralized Nature:

a. Edge Devices:

Federated Learning is well-suited for edge computing environments where devices have limited computational resources. Decentralized training on edge devices ensures that models can be improved without relying on constant communication with a central server.

b. Internet of Things (IoT):

IoT devices benefit from Federated Learning by participating in collaborative model training without compromising the privacy of the data they collect. This is particularly valuable in smart homes, healthcare wearables, and industrial IoT applications.

5. User Control:

a. Consent and Transparency:

Federated Learning empowers users by giving them more control over their data. Users can choose to participate or withdraw from the collaborative learning process, promoting transparency and informed consent.

b. Personal Devices:

Personal devices, such as smartphones, can contribute to Federated Learning while keeping user data on the device. This approach aligns with user expectations of privacy and control over personal information.

Real-World Applications of Federated Learning:

1. Predictive Text and Keyboard Suggestions:

Federated Learning is widely used in mobile devices for improving predictive text and keyboard suggestions. Local models on individual devices learn user-specific language patterns without sending raw text data to a central server.

2. Healthcare and Medical Research:

In healthcare, Federated Learning is applied to collaborative research without sharing patient data. Medical institutions can collectively improve diagnostic models, treatment recommendations, and drug discovery without compromising patient privacy.

3. Collaborative Traffic Prediction:

Federated Learning is employed in traffic prediction models for smart cities. By aggregating insights from various local models, traffic prediction can be enhanced without centralizing data from individual vehicles or sensors.

4. Financial Fraud Detection:

Financial institutions use Federated Learning to improve fraud detection models collaboratively. Each institution trains its local model on transaction data, contributing to a more robust global fraud detection system without sharing sensitive details.

5. Smart Home Devices:

Federated Learning is employed in smart home devices for personalizing user experiences. Local models on individual devices learn user preferences without transmitting data to a central server, ensuring privacy in smart home applications.

Challenges and Considerations:

1. Communication Overhead:

Federated Learning introduces communication overhead, as devices need to communicate with a central server during model updates. Efficient strategies for minimizing communication while maintaining model accuracy are essential.

2. Non-IID Data Distribution:

Devices may have non-identically distributed data, impacting the performance of the global model. Addressing non-IID data distribution requires careful model aggregation techniques to prevent bias.

3. Model Poisoning Attacks:

Adversarial attacks, such as model poisoning, pose a challenge in Federated Learning. Robust defenses against malicious contributions are crucial for maintaining the integrity of the global model.

4. Differential Privacy:

Ensuring differential privacy in Federated Learning is a complex task. Techniques to protect against the extraction of sensitive information from model updates need to be implemented to maintain user privacy.

Future Prospects:

1. Advancements in Privacy-Preserving Techniques:

Ongoing research is focused on developing advanced privacy-preserving techniques within Federated Learning. This includes improved methods for secure aggregation, cryptographic techniques, and better protection against privacy attacks.

2. Standardization and Frameworks:

The development of standardized frameworks for Federated Learning will facilitate broader adoption. Industry-wide standards will streamline implementation, making it easier for organizations to incorporate Federated Learning into their machine learning workflows.

3. Interdisciplinary Collaboration:

Interdisciplinary collaboration between researchers, privacy experts, and industry professionals will play a crucial role in advancing Federated Learning. A collective effort is necessary to address challenges, enhance security, and unlock the full potential of this privacy-preserving paradigm.

4. Edge Computing Integration:

Federated Learning is expected to integrate seamlessly with edge computing environments. As edge devices become more powerful, the collaborative training of machine learning models on decentralized devices will become increasingly efficient and widespread.

Conclusion:

Federated Learning stands as a beacon of innovation in the quest for privacy-preserving machine learning. By distributing the model training process across decentralized devices, Federated Learning not only enhances privacy but also empowers users with control over their data. As industries embrace this transformative approach, the landscape of machine learning is evolving towards a future where innovation and privacy coexist harmoniously. Federated Learning is not just a technological advancement; it is a testament to the potential of collaborative, privacy-conscious approaches that prioritize the individual while driving progress in the field of machine learning.