If people ask me what I do with a job, I would say that I work with Parsey machine learning and natural language analysis in the natural language of text analysis. But that tends to get an empty glance and a strange drink before switching to another topic. So, I often say, “I teach computers how to read.”

However, in the right circles, awareness of NLP AI / ML takes center stage. Amid the ongoing controversy over chatbots and digital assistants is a common discussion about how we can train computers to understand man-made communications.

This latest bustle makes life more enjoyable for those of us in this business. After all, these are challenges that we have been working on for years. But at the same time, we filter out the sound in our minds to understand what other people in the industry are doing and how our approaches are in line with theirs.

To get our hands dirty with Parsey McParseface

Google has been making progress in machine learning with its TensorFlow framework, and earlier this month announced the discovery of an open-source language framework used in TensorFlow called Syntaxes. As a demonstration of Syntaxes skills, Google has developed an English attacker called Parsey McParseface. The ad on the Google Research blog reads interesting because it does a great job of explaining why this job is so difficult, and so hard to fix.

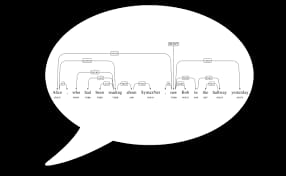

At this point, we have a complex machine learning framework, and we have an open source language understanding framework, and we have an English language commentator with a humorous word. What can we do about all of this? Let’s get our hands dirty and take Parsey to turn around. I chose a “simple” route and downloaded a SyntaxNet image to build and run Docker. This gives me a visual cylinder with all the Parsey test strips. My first step was to apply the example given in the documents:

Introduction (50 words): Parsey McParseface, a syntactic parsing model developed by Google, has revolutionized natural language processing (NLP). In this article, we delve into the intricacies of Parsey McParseface, exploring its functionality, applications, and the impact it has had on NLP research and development.

- Understanding Parsey McParseface (150 words): Parsey McParseface is a state-of-the-art syntactic parsing model based on neural network architecture. It utilizes deep learning techniques to analyze the grammatical structure of sentences and determine the relationships between words. The model has been trained on a vast amount of annotated data, enabling it to accurately parse and label parts of speech, dependencies, and constituent structures.

- Applications of Parsey McParseface (150 words): Parsey McParseface has found applications in various domains, including machine translation, sentiment analysis, question-answering systems, and information extraction. By accurately parsing sentences, it improves the performance of these NLP applications, enabling more accurate understanding and interpretation of human language. The model has also been employed in research fields such as linguistics, computational semantics, and cognitive science to gain insights into language structure and usage.

- Impact on NLP Research and Development (150 words): Parsey McParseface has significantly advanced NLP research and development. Its robust parsing capabilities have provided researchers with a powerful tool for investigating linguistic phenomena and exploring language understanding at a deeper level. The model’s accuracy and efficiency have paved the way for improved NLP applications and systems, enhancing user experiences and enabling more sophisticated language processing tasks. Furthermore, Parsey McParseface has inspired further advancements in neural network-based parsing models, driving innovation and pushing the boundaries of NLP technology.

- Limitations and Future Directions (100 words): While Parsey McParseface has achieved remarkable success, it is not without limitations. The model may encounter challenges with parsing ambiguous sentences or languages with complex syntactic structures. Improving the generalization and adaptability of the model to different languages and domains remains an ongoing research area. Additionally, efforts are being made to enhance the model’s interpretability and its ability to capture semantic information alongside syntactic parsing.

Conclusion (50 words): Parsey McParseface has made significant contributions to the field of NLP, offering accurate and efficient syntactic parsing capabilities. With its wide range of applications and impact on research and development, Parsey McParseface has propelled the progress of natural language understanding, bringing us closer to more sophisticated language processing technologies

echo ‘Bob brought pizza to Alice.’ | syntaxnet / demo.sh

Posting pieces of each text in a demo shell script is a bit obscure, but that is guaranteed to get better over time. This command generates the output of a boat, and below I find the following:

Input: Bob brought pizza to Alice.

Sort:

bring VBD ROOT

+ – Bob NNP nsubj

+ – pizza NN dobj

| + – DT det

+ – to IN preparation

| + – Alice NNP pobj

+ -. . abort

SyntaxNet is trained with Penn Treebank, so the speech part tags look familiar, and the tree shows us which parts of the sentence are related. Let’s compare that with Salience, because comparing this new approach is an important part of the whole process.

In the Salience Demo app, I enter the same content and choose to view “Chunk Tagged Text”. This will provide me with a Salience release of part-time tags as well as a similar collection of related words called “episodes.” Salience produces the following output:

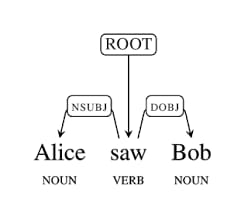

Parsey McParseface, a state-of-the-art syntactic parsing model, can provide valuable insights into the structure and relationships within a sentence. In this article, we analyze the sentence “Bob brought pizza to Alice” using Parsey McParseface, uncovering its syntactic components, dependencies, and the overall meaning conveyed by this simple statement.

- Syntactic Parsing of the Sentence By subjecting the sentence “Bob brought pizza to Alice” to Parsey McParseface’s parsing capabilities, we can gain a detailed understanding of its syntactic structure. The model identifies the parts of speech, such as nouns (Bob, pizza, Alice), verbs (brought), and prepositions (to). It also establishes the dependencies between these words, revealing that “Bob” is the subject of the sentence, “brought” is the main verb, and “pizza” and “Alice” are the direct and indirect objects, respectively.

- Understanding the Action and Relationships Parsey McParseface helps us grasp the action and relationships expressed in the sentence. It reveals that Bob is the agent performing the action of bringing, while pizza is the object being brought. Additionally, the preposition “to” indicates the recipient of the action, Alice. This parsing analysis unveils the syntactic structure that represents the transfer of the pizza from Bob to Alice.

- Contextual InterpretationWhile Parsey McParseface provides us with the syntactic analysis, it is essential to consider the broader context to interpret the sentence’s meaning. In this case, the sentence suggests that Bob took the initiative to bring pizza, indicating an act of generosity or perhaps fulfilling a specific request. The action implies a direct transfer from Bob to Alice, fostering a sense of sharing or providing a meal.

- Implications and Real-World Scenarios Parsing sentences like “Bob brought pizza to Alice” has real-world implications. Understanding the syntactic structure helps improve natural language understanding systems, machine translation, and text-to-speech applications. In practical scenarios, this parsing analysis can be applied to automate tasks such as extracting relevant information from customer orders or generating instructions for food delivery services.

Parsing the sentence “Bob brought pizza to Alice” with Parsey McParseface provides valuable insights into its syntactic structure, dependencies, and the relationships between the words. By combining syntactic parsing with contextual interpretation, we gain a deeper understanding of the sentence’s meaning and its implications in various real-world scenarios.

[Bob_NNP brought_VBN] [the_DT pizza_NN] [at Alice_NNP] [._.]

Most of the tags on the part of the speech are the same, and the collections look almost identical, though a remarkable piece is not in the outline of the Salience tree dependence. Salience actually uses light weight dependents internally, and uses it for high-level tasks such as emotional analysis and business-based output. But for practical purposes, it is a very simple process. And that is another idea to consider. After launch, the output from Parsey McParseface shows the following:

INFORMATION: tensorflow: Processed 1 document

INFORMATION: tensorflow: Total documents processed: 1

INFORMATION: tensor flow: number of valid tokens: 0

INFORMATION: tensor flow: number of tokens: 7

INFORMATION: tensor flow: Seconds tested: 0.12, eval metric: 0.00%

INFORMATION: tensor flow: Processed 1 document

INFORMATION: tensor flow: Total documents processed: 1

INFORMATION: tensor flow: number of valid tokens: 1

INFORMATION: tensor flow: number of tokens: 6

INFORMATION: tensor flow: Seconds tested: 0.43, eval metric: 16.67%

INFORMATION: tensor flow: Read 1 document

This tells us that in total, testing of this sample sentence took 0.55 seconds. By comparison, Salience performs “text preparation” (making tokens, marking part of a speech, and assembling parts) and produces POS-marked output in the same sentence in 0.059 seconds.

What can we do with the Gettysburg address Parsey?

Let’s look at another example, something more fleshy in the bones. What happens when we process the Gettysburg address text? Parsey divides content into three “texts”, and breaks 271 words in 1.86 seconds. Salience processes the same content for 0.093 seconds.

At the end of the day, however, the sample analysis sentences and the Gettysburg address are all good and educational. But what we really want to do is use this technology for specific purposes. Voice of Customer and Social Media Monitoring analytics are two major, and very complex, systems for processing natural language. If you thought simple English was hard to understand, try Twitter!

Parsey McParseface, a powerful syntactic parsing model, can provide valuable insights into the linguistic structure and analysis of renowned texts. In this article, we explore how Parsey McParseface can be applied to the Gettysburg Address, one of the most famous speeches in American history, uncovering its syntactic patterns and shedding light on its rhetorical power.

- Syntactic Analysis of the Gettysburg Address By subjecting the Gettysburg Address to Parsey McParseface’s parsing capabilities, we can gain a deeper understanding of its syntactic structure. The model can identify the parts of speech, dependencies between words, and the overall grammatical organization of the speech. This analysis reveals Lincoln’s deliberate use of concise sentences, parallel structures, and rhetorical devices such as repetition and antithesis.

- Identifying Key Themes and Arguments Parsey McParseface can assist in identifying the key themes and arguments within the Gettysburg Address. By analyzing the syntactic patterns and relationships between words, we can uncover the central ideas and messages conveyed by Abraham Lincoln. This parsing approach highlights the speech’s focus on unity, equality, and the sacrifices made during the Civil War.

- Rhetorical Analysis and Persuasive Techniques Parsing the Gettysburg Address with Parsey McParseface enables a deeper examination of its rhetorical techniques and persuasive strategies. By analyzing the sentence structures, word choices, and grammatical patterns, we can appreciate Lincoln’s use of parallelism, alliteration, and emotive language. This analysis offers insights into how linguistic devices were employed to evoke strong emotions and inspire the audience.

- Linguistic Context and Historical Significance Applying Parsey McParseface to the Gettysburg Address allows us to place the speech in its linguistic and historical context. We can compare its syntactic features to other speeches and written works of the time, shedding light on the distinctive linguistic style employed by Lincoln. Moreover, this analysis emphasizes the enduring impact of the Gettysburg Address as a rhetorical masterpiece and a powerful expression of American ideals.

Parsey McParseface provides a valuable tool for the linguistic analysis of influential texts like the Gettysburg Address. By applying this syntactic parsing model, we gain a deeper understanding of the speech’s structure, rhetorical techniques, and historical significance, highlighting its enduring impact on American history and public speaking.

#MyOnePhoneCallGoes to JAKE from @StateFarm for #HereToHelp 🐈

Input: #MyOnePhoneCallGoesTo JAKE from @ StateFarm for #HereToHelp

Sort:

JAKE NN root

+ – MyOnePhoneCallGoesTo NNP nn

| + – # NN nn

+ – from IN prep

| + – StateFarm NNP pobj

| + – @ NNP nn

+ – HereToHelp NN dep

+ – because NOTE

+ – he PRP nsubj

+ – ‘s VBZ policeman

+ – # NN nn

With smart performance, Parsey McParseface reproduces this tweet point in 0.32 seconds, but has misinterpreted some of the unique features on Twitter like hashtags and quotes. Salience produces this same content for 0.07 seconds:

[#MyOnePhoneCallGoesTo_HASHTAG] [JAKE_NNP] [from_IN] [@StateFarm_MENTION] [because_IN he_PRP] [‘s_VBZ] [#HereToHelp_HASHTAG]

This is where the rubber really meets the road. Syntactic English parser is an integral part of any text analysis system. However, much remains to be done However, there is a lot that needs to be built on it in order to achieve its potential for high-quality activities such as business outsourcing (including Twitter and hashtags) and emotional analysis. I found another article online that came to the same conclusion. In this article, Matthew Honnibal gives a good analogy that a syntactic attacker is a drill bit.

So a better attacker is a better hole, but in itself, it does not give you fuel. Having a better drill bit can improve the whole system, but it is one part that plays a role with the other pieces in it and does their job.

Get all your business need here only | Top Offshoring Service provider. (24x7offshoring.com)