The Top 6 Business Advantages of the ETL Process

The ETL Process

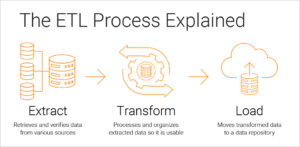

The ETL Process, also known as Extract, Transform, and Load, is a process that involves scraping and then transferring data to a central repository, from which these datasets are sent downstream for purification. This is how a large database is turned into a cloud-based digital warehouse, with its architecture streamlined and optimized for changing business needs.

To effectively and economically utilize storage and satisfy scaled computing demands, we now have data lakes, warehouses, and the cloud. Some of the more modern ETL Processsystems use automation to speed up and simplify these processes.

Directly connecting users to data sources compels them to undertake all of the effort of connecting, processing, and understanding the raw data – a job that is beyond the capabilities of most business users and simply not practicable at an enterprise scale.

Hand-coding an ETL process to condense data, standardize data formats, and load it into a source system takes too long and can lead to a fragile nest of code on top of code. ETL Processhas various advantages, including the capacity to extract data from multiple sources and, more recently, the ability to load data into a cloud data warehouse and convert it for analytics using the cloud’s power and scalability.

ETL Process Advantages

Here’s a rundown of some of the commercial advantages that this method provides:

- Database Migration

For an accurate analysis of claims or transactions, many outsourcing and data solutions providers depend on it. This is the most common use, in which this process aggregates and highlights transactions from a given server, cloudETL Process, or another resource so that its stakeholders may see the intelligence that underpins them using deep eyes.

Traditionally, this method allows for full data movement from outdated systems to contemporary warehouses. Most entrepreneurs have been collecting and integrating data from external suppliers or partners to consolidate insights into their performance and operations.

- Incorporate Change

The importance of digital transformation has already been established. It’s all due to the information that resides inside the data. Those who have the most of these will be in chargeETL Process. This is why companies in eCommerce, retail, manufacturing, supply chain, automotive, education, healthcare & wellness, finance & marketing, and banking are willing to spend money blindly on web scraping for content from videos, social media, the internet of things (IoT), server logs, timestamps, and open or crowdsourced data, among other things.

A competitive advantage may be gained by having simple access to these, which opens up a wide range of possibilities for screening breakthroughs to change their firm into something unique. Trends, technological upgrades, evolutions, and innovations are all part of this changeETL Process, which aims to increase productivity and reduce turnaround time.

- Data warehousing is a term that refers to the process of storing

Data warehousing is used to achieve data mining goals by doing this technique to free up resources for cleaning and moving the results through the analysis and strategy-making funnel. One may import and transform organized and unstructured data into the Cloud or Hadoop using its capabilities.

more like this, just click on: https://24x7offshoring.com/blog/

When it comes to combining digital transformation, this appears to be a piece of cakeETL Process. You may also use cloud data to enable the transformation of customer interactions, transactions, operational insights, BI platforms, and master data to identify unique patterns that AI can learn from.

- Data Access through Self-Service

Self-service data ingestion is the process of utilizing a tool to retrieve data chunks from several sources. This allows non-technical employees to connect to specific data at a given location, which they may access remotely for self-service analysis and intelligence buildingETL Process.

This is fast becoming a trend that improves a company’s or organization’s efficiency and agility. Because the tool is in control, the IT staff has more time to focus on inspiring thoughts, analyzing data, and digging for breakthroughs to make changes that benefit the company. This is how productivity grows and scalability increases.

- Analyze the Data Quality

Data science examines the authenticity, validity, and value proposition of datasets through cleansing, profiling, and auditingETL Process. The specialists combine data solutions while assessing the outcome’s lineage and quality.

- Make a Metadata diagram

Because it is derived from some external resources, the metadata contains a critical overview of the data lineage. It’s difficult to remember what the original data are about because managing and mining is such a complicated process. As a result, the ETL process keeps track of how different kinds of data are utilized and how many are related.

- Make a visual flow.

Modern ETL Processsystems have a graphical user interface (GUI) that allows users to construct ETL procedures with little or no programming knowledge. Rather than wrangling with SQL, Python, or Bash scripts, stored procedures, and other technologies, all your users have to do is set rules and map data flows in a process using a drag-and-drop interface.

They have a better knowledge of the rationale underlying the data flow by being able to witnessETL Process each step between source systems and the data warehouse. These self-service technologies also provide excellent collaboration options, allowing more individuals in the business to contribute to the development and maintenance of the data warehouse.

ETL technologies are designed for sophisticated data integration operations such as transferring data, creating a data warehouse, and combining data from numerous sources. They also give information about the data they handle and assist with data governance activities, supporting data quality procedures, and assisting even inexperienced teams in building and expanding data warehousesETL Process.

Advantages of ETL Interaction

Here is a gathering of a couple of business helps that this interaction gives:

Relocate Information bases

Many reevaluating and information arrangements giving associations depend on it to an exact investigation of cases or exchanges. This is all there is to it run of the mill use wherein this cycle joins and spotlights exchanges from a particular server or cloud or a few different assets so that its partners can find the insight basic them through profound eyes.

Customarily, this cycle empowers information relocation from inheritance frameworks to current distribution centers with an exhaustive organization. For the most part, business people have been combining experiences of their exhibition and tasks to gather and coordinate information from outside providers or accomplices.

Mix Change

Advanced change has previously taking the middle stage. All due to data keeps within the information. The individuals who have a greater amount of these, they win the initiative. For this reason chiefs in Web based business, retail, producing, store network, auto, schooling, medical care and wellbeing, finance and promoting and banking need to spend aimlessly on web scratching for the substance from recordings, virtual entertainment, the web of things (IoT), server logs, time stamp and open or publicly supported information and so on..

A simple admittance to these can include an upper hand, which opens a wide extension for sifting leap forwards to change their business for being abnormal. This change has patterns, innovation updates, advancements and developments to ground up effectiveness and inject speedy time required to circle back.

Information Warehousing

Information warehousing is intended for accomplishing information mining objectives, which does this technique to benefit assets for purging and pushing the result to the channel of investigation and procedure making. With its instruments, one can load and change over organized and unstructured information into the Cloud or Hadoop. This appears to be a walkover with regards to converging for computerized change. Indeed, you can uphold the change of client connections, exchanges, functional experiences, BI stages and expert information with the cloud information for tracking down special examples to advance man-made intelligence.

Continue Reading: https://24x7offshoring.com/blog/

Extract, transform, and load (ETL) is a process of copying data from one or more sources into a destination system while transforming the data to fit the destination system’s data model. The process is often used to move data from a data warehouse or data mart to a data lake or operational database.

The ETL process typically consists of the following steps:

- Extract: The first step is to extract the data from the source system. This can be done using a variety of methods, such as exporting data from a database, copying files from a file system, or extracting data from a web service.

- Transform: The second step is to transform the data to fit the destination system’s data model. This may involve cleaning the data, removing duplicate records, or converting data types.

- Load: The third step is to load the data into the destination system. This can be done using a variety of methods, such as inserting data into a database, copying files to a file system, or loading data into a data lake.

ETL is a common process in data warehousing and data integration. It is used to move data from a variety of sources into a single repository, where it can be analyzed and used for reporting and decision-making.

Here are some of the benefits of using ETL:

- Data consolidation: ETL can be used to consolidate data from multiple sources into a single repository. This makes it easier to manage and analyze the data.

- Data cleaning: ETL can be used to clean data, removing duplicate records, correcting errors, and filling in missing values. This ensures that the data is accurate and consistent.

- Data integration: ETL can be used to integrate data from multiple sources into a single data model. This makes it easier to use the data for reporting and decision-making.

Here are some of the challenges of using ETL:

- Data complexity: ETL can be challenging to implement in complex data environments. This is because the data may be in different formats, or it may be stored in different systems.

- Data volume: ETL can be challenging to implement in data environments with large volumes of data. This is because the ETL process may need to be scaled to handle the large volume of data.

- Data latency: ETL can introduce latency into the data pipeline. This is because the ETL process may need to be run periodically, which can delay the availability of the data.

Overall, ETL is a valuable process for consolidating, cleaning, and integrating data from multiple sources. However, it is important to be aware of the challenges of ETL before implementing it in a data environment.

Extract, transform, and load (ETL) is a process of copying data from one or more sources into a destination system while transforming the data to fit the destination system’s data model. The process is often used to move data from a data warehouse or data mart to a data lake or operational database.

The ETL process typically consists of the following steps:

- Extract: The first step is to extract the data from the source system. This can be done using a variety of methods, such as exporting data from a database, copying files from a file system, or extracting data from a web service.

- Transform: The second step is to transform the data to fit the destination system’s data model. This may involve cleaning the data, removing duplicate records, or converting data types.

- Load: The third step is to load the data into the destination system. This can be done using a variety of methods, such as inserting data into a database, copying files to a file system, or loading data into a data lake.

ETL is a common process in data warehousing and data integration. It is used to move data from a variety of sources into a single repository, where it can be analyzed and used for reporting and decision-making.

Here are some of the benefits of using ETL:

- Data consolidation: ETL can be used to consolidate data from multiple sources into a single repository. This makes it easier to manage and analyze the data.

- Data cleaning: ETL can be used to clean data, removing duplicate records, correcting errors, and filling in missing values. This ensures that the data is accurate and consistent.

- Data integration: ETL can be used to integrate data from multiple sources into a single data model. This makes it easier to use the data for reporting and decision-making.

Here are some of the challenges of using ETL:

- Data complexity: ETL can be challenging to implement in complex data environments. This is because the data may be in different formats, or it may be stored in different systems.

- Data volume: ETL can be challenging to implement in data environments with large volumes of data. This is because the ETL process may need to be scaled to handle the large volume of data.

- Data latency: ETL can introduce latency into the data pipeline. This is because the ETL process may need to be run periodically, which can delay the availability of the data.

Overall, ETL is a valuable process for consolidating, cleaning, and integrating data from multiple sources. However, it is important to be aware of the challenges of ETL before implementing it in a data environment.

The Extract, Transform, and Load (ETL) process is a common way to move data from one system to another. It is a three-step process that involves extracting data from the source system, transforming it to fit the destination system, and then loading it into the destination system.

The different steps of the ETL process are:

- Extract: The first step is to extract the data from the source system. This can be done using a variety of methods, such as exporting data from a database, copying files from a file system, or extracting data from a web service.

- Transform: The second step is to transform the data to fit the destination system’s data model. This may involve cleaning the data, removing duplicate records, or converting data types.

- Load: The third step is to load the data into the destination system. This can be done using a variety of methods, such as inserting data into a database, copying files to a file system, or loading data into a data lake.

Here is a more detailed explanation of each step:

Extract: The extract step involves identifying the data that needs to be extracted from the source system. This may involve identifying specific tables, fields, or records. Once the data has been identified, it can be extracted using a variety of methods.

Transform: The transform step involves cleaning the data, removing duplicate records, or converting data types. This ensures that the data is in the correct format and that it is ready to be loaded into the destination system.

Load: The load step involves loading the data into the destination system. This may involve inserting data into a database, copying files to a file system, or loading data into a data lake.

The ETL process can be a complex and time-consuming process. However, it is a valuable process for consolidating, cleaning, and integrating data from multiple sources.

Here are some of the benefits of using ETL:

- Data consolidation: ETL can be used to consolidate data from multiple sources into a single repository. This makes it easier to manage and analyze the data.

- Data cleaning: ETL can be used to clean data, removing duplicate records, correcting errors, and filling in missing values. This ensures that the data is accurate and consistent.

- Data integration: ETL can be used to integrate data from multiple sources into a single data model. This makes it easier to use the data for reporting and decision-making.

Here are some of the challenges of using ETL:

- Data complexity: ETL can be challenging to implement in complex data environments. This is because the data may be in different formats, or it may be stored in different systems.

- Data volume: ETL can be challenging to implement in data environments with large volumes of data. This is because the ETL process may need to be scaled to handle the large volume of data.ETL Process

- Data latency: ETL can introduce latency into the data pipeline. This is because the ETL process may need to be run periodically, which can delay the availability of the data.ETL Process

Overall, ETL is a valuable process for consolidating, cleaning, and integrating data from multiple sources. However, it is important to be aware of the challenges of ETL before implementing it in a data environment.ETL Process