Different Ways to Perform Tokenization 2021

Intermediate Data NLP Python Technique Unstructured Text

View all

Want to start with Natural Language Processing (NLP)? Here is a good first step

Learn how to use token – a key element in preparing your NLP model building data

We present different ways of making tokens in text data.

Tokenization is a fundamental task in natural language processing (NLP) that involves breaking down text into smaller units called tokens. This article explores different methods and techniques employed for tokenization in 2021, showcasing advancements and their impact on text analysis tasks. By understanding these approaches, NLP practitioners can enhance their text processing pipelines.

Rule-Based Tokenization

Rule-based tokenization relies on predefined rules to segment text into tokens. These rules may consider punctuation, white spaces, or specific patterns. Regular expressions are commonly used to define these rules. While rule-based approaches are straightforward, they may not handle all tokenization scenarios accurately, especially when dealing with informal text or complex linguistic structures.

Statistical Tokenization

Statistical tokenization utilizes machine learning techniques to learn tokenization patterns from annotated data. This approach involves training models on large corpora, allowing them to predict token boundaries based on statistical patterns. Statistical tokenizers can handle various languages, adapt to different text domains, and improve accuracy by considering context and word frequencies.

Neural Network-Based Tokenization

Neural network-based tokenization leverages deep learning models, such as recurrent neural networks (RNNs) or transformers, to perform tokenization. These models learn token boundaries based on sequential or contextual information present in the text. Neural network-based approaches can handle complex tokenization cases and adapt to different languages and domains. They can capture contextual dependencies and are effective for tokenizing noisy or informal text.

Hybrid Approaches and Customization

Hybrid approaches combine multiple tokenization techniques to capitalize on their strengths. They may use rule-based methods as a starting point and apply statistical or neural network-based models to refine token boundaries. Furthermore, practitioners can customize tokenization by incorporating domain-specific dictionaries, handling specific abbreviations or tokenization challenges, and incorporating domain knowledge into the process.

In 2021, tokenization has seen advancements with rule-based, statistical, and neural network-based approaches. NLP practitioners can choose the most suitable method or utilize hybrid approaches to achieve accurate tokenization. Customization and adaptation to specific domains and languages further enhance tokenization’s effectiveness in various text analysis tasks, enabling more robust and precise NLP applications.

Introduction about Tokenization 2021

Are you fascinated by the amount of text information available online? Looking for ways to work with this text data but don’t know where to start? Machines, of course, recognize numbers, not the letters of our language. And that can be a tricky place to navigate in machine learning.

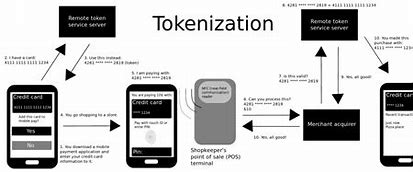

Tokenization is a fundamental process in natural language processing (NLP) that involves breaking down text into smaller units called tokens. In 2021, tokenization continues to play a crucial role in various NLP tasks, such as text analysis, sentiment analysis, machine translation, and information retrieval. This article provides an overview of tokenization techniques and their significance in the ever-evolving landscape of text processing.

The Importance of Tokenization

Tokenization serves as a foundational step in NLP, enabling the transformation of raw text into a structured format that can be processed by computers. By breaking text into tokens, NLP models can analyze, understand, and extract meaningful information from textual data. Tokenization allows for more accurate text analysis, improved language modeling, and efficient computation, as it reduces the complexity of working with raw text.

Rule-Based Tokenization

Rule-based tokenization relies on predefined rules to segment text into tokens. These rules may consider punctuation, whitespace, or specific patterns. Regular expressions are commonly used to define tokenization rules. While rule-based tokenization is straightforward and computationally efficient, it may struggle with handling irregular text patterns or informal language.

Statistical Tokenization

Statistical tokenization utilizes machine learning techniques to learn tokenization patterns from annotated data. These methods involve training models on large corpora to predict token boundaries based on statistical patterns. Statistical tokenizers can handle various languages, adapt to different text domains, and improve accuracy by considering contextual information and word frequencies Tokenization 2021

Neural Network-Based Tokenization

Neural network-based tokenization employs deep learning models, such as recurrent neural networks (RNNs) or transformers, to perform tokenization. These models learn token boundaries based on sequential or contextual information present in the text. Neural network-based approaches excel in handling complex tokenization scenarios, adapting to different languages and domains, and capturing contextual dependencies effectively Tokenization 2021

In 2021, tokenization remains a critical component of text processing in NLP. Rule-based, statistical, and neural network-based tokenization approaches offer various strengths and trade-offs. Choosing the appropriate tokenization method depends on the specific task and dataset. Understanding and implementing the right tokenization technique is essential for unlocking the full potential of NLP and driving advancements in text analysis Tokenization 2021

So how can we control and refine this text data to create a model? The answer lies in the wonderful world of Natural Language Processing (NLP) Tokenization 2021

Solving the NLP problem is a multistep process. We need to clean up random text data first before we even consider reaching the modeling stage. Cleaning the data has a few important steps:

The making of Word tokens

Predicting the speech parts of each token

Text of lemmatization

Identify and delete static words, and more

In this article, we will talk about the first step in making tokens. We will first see what tokens are made and why they are needed in NLP. We will then look at six different ways to make Python tokens Tokenization 2021

This text is not required. Anyone with an interest in NLP or data science will be able to follow along. If you are looking for an end-to-end course for NLP, you should check out our comprehensive course:

Indigenous Language Processing using Python

What is token production in NLP?

Why is token production required?

Different Ways to Perform Tokenization in Python

Making tokens using Python split function ()

Making tokens using Common Expressions

Making tokens using NLTK

Making tokens using Space

Making tokens using Kara

To make tokens using Gensim

What is token production in NLP?

Making tokens is one of the most common tasks when it comes to working with text data. But what does the word ‘tokenization’ mean?

Making tokens actually breaks a phrase, sentence, paragraph, or whole text document into smaller parts, such as individual words or terms. Each of these subdivisions is called tokens Tokenization 2021

The tokens could be words, numbers or punctuation marks. In tokenization, smaller units are created by locating word boundaries. Wait – what are word boundaries?

These are the ending point of a word and the beginning of the next word. These tokens are considered as a first step for stemming and lemmatization (the next stage in text preprocessing which we will cover in the next article).

Difficult? Do not worry! The 21st century has made learning and knowledge accessibility easy. Any Natural Language Processing Course can be used to learn them easily Tokenization 2021

Token production is a crucial step in natural language processing (NLP) that involves transforming raw text into discrete units known as tokens. This article explores the concept of token production, its significance in NLP, and the various techniques employed to generate tokens. By understanding this process, readers can gain insights into the fundamental building blocks of NLP analysis Tokenization 2021

Defining Token Production

Token production, also known as tokenization, is the process of segmenting textual data into individual units called tokens. Tokens are typically words, but they can also represent phrases, characters, or subword units. Tokenization aims to break down text in a way that facilitates further analysis and processing in NLP tasks such as text classification, information retrieval, and machine translation Tokenization 2021

Importance of Tokenization in NLP

Tokenization is essential in NLP because it transforms unstructured text into structured data that can be analyzed by machines. It forms the foundation for numerous downstream tasks by enabling efficient text processing, language modeling, and feature extraction. Token production facilitates accurate word counting, syntactic analysis, and statistical modeling, improving the overall performance of NLP systems Tokenization 2021

Techniques for Token Production

Various techniques are employed for token production in NLP. Rule-based tokenization relies on predefined rules, such as punctuation marks or white spaces, to identify tokens. Statistical tokenization utilizes machine learning algorithms trained on annotated data to predict token boundaries based on statistical patterns. Neural network-based tokenization leverages deep learning models to learn token boundaries from sequential or contextual information present in the text Tokenization 2021

Challenges and Considerations

Token production can present challenges in certain scenarios, such as handling informal language, unknown words, or ambiguous token boundaries. Techniques like handling abbreviations, compound words, or handling out-of-vocabulary words require careful consideration. Additionally, multilingual tokenization and domain-specific tokenization may require customized approaches to address specific challenges in different contexts Tokenization 2021

Token production is a fundamental step in NLP, enabling the transformation of raw text into meaningful units for analysis. Through rule-based, statistical, or neural network-based techniques, tokenization facilitates accurate language processing, enhances NLP system performance, and enables various downstream tasks. Understanding the process of token production is vital for effective text analysis and unlocking the potential of NLP applications Tokenization 2021

Why is Tokenization required in NLP? Tokenization 2021

I want you to think about the English language here. Pick up any sentence you can think of and hold that in your mind as you read this section. This will help you understand the importance of tokenization in a much easier manner Tokenization 2021

Before processing a natural language, we need to identify the words that constitute a string of characters. That’s why tokenization is the most basic step to proceed with NLP (text data). This is important because the meaning of the text could easily be interpreted by analyzing the words present in the text.

Let’s take an example. Consider the below string:

“This is a cat.”

What do you think will happen after we perform tokenization on this string? We get [‘This’, ‘is’, ‘a’, cat’].

There are numerous uses of doing this. We can use this tokenized form to:

Count the number of words in the text

Count the frequency of the word, that is, the number of times a particular word is present

And so on. We can extract a lot more information which we’ll discuss in detail in future articles. For now, it’s time to dive into the meat of this article – the different methods of performing tokenization in NLP Tokenization 2021

Tokenization is a critical step in natural language processing (NLP) that involves breaking down raw text into smaller units called tokens. This article explores the importance of tokenization in NLP, its relevance in 2021, and how it empowers text analysis tasks. Understanding the role of tokenization is essential for extracting meaningful insights from textual data Tokenization 2021

Structuring Textual Data

Tokenization is required in NLP to structure textual data and convert it into a manageable format. By breaking down text into tokens, such as words, phrases, or subword units, tokenization provides a foundation for further analysis. It allows for efficient processing, simplifies language modeling, and enables various NLP tasks, including sentiment analysis, information extraction, and machine translation Tokenization 2021

Enabling Language Understanding

Tokenization plays a vital role in facilitating language understanding in NLP models. Tokens serve as the input units for training machine learning algorithms, allowing models to learn the relationships between words, their context, and syntactic structures. Tokenization helps capture the meaning of words, identify parts of speech, and extract relevant features for accurate language processing Tokenization 2021

Handling Text Complexity

Textual data often exhibits complexity through punctuation, abbreviations, compound words, and linguistic variations. Tokenization addresses these challenges by segmenting text into meaningful units. It helps handle sentence boundaries, punctuation marks, and special characters. Furthermore, tokenization aids in handling unknown or out-of-vocabulary words by creating separate tokens, enabling better coverage and understanding of diverse language patterns Tokenization 2021

Advancements in Tokenization

In 2021, tokenization techniques continue to evolve with the introduction of rule-based approaches, statistical models, and neural network-based methods. Rule-based tokenization utilizes predefined patterns to split text, while statistical models learn tokenization patterns from annotated data. Neural network-based approaches leverage deep learning models to capture contextual information for accurate tokenization. These advancements enhance the accuracy and adaptability of tokenization, enabling better analysis of various text types and domains Tokenization 2021

Tokenization is a critical component of NLP, enabling structured text analysis and language understanding. In 2021, tokenization continues to be indispensable for empowering various text analysis tasks. By breaking down text into tokens, tokenization facilitates efficient processing, enhances language modeling, and enables the extraction of meaningful insights from textual data, paving the way for advancements in NLP applications Tokenization 2021

Methods to Perform Tokenization in Python

We are going to look at six unique ways we can perform tokenization on text data. I have provided the Python code for each method so you can follow along on your own machine.

1. Tokenization using Python’s split() function

Let’s start with the split() method as it is the most basic one. It returns a list of strings after breaking the given string by the specified separator. By default, split() breaks a string at each space. We can change the separator to anything Tokenization 2021

Tokenization is a crucial step in natural language processing (NLP) that involves breaking down text into individual tokens. Python, with its rich ecosystem of libraries, offers several methods for performing tokenization. This article explores various tokenization techniques in Python, highlighting their features, implementation, and use cases to empower text processing and analysis Tokenization 2021

NLTK Library

The Natural Language Toolkit (NLTK) is a popular Python library for NLP tasks, including tokenization. NLTK provides various tokenization methods, such as word tokenization, sentence tokenization, and regular expression-based tokenization. It offers pre-trained tokenizers for different languages and enables customization to handle specific requirements. NLTK’s tokenization methods are widely used and provide a solid foundation for text analysis in Python Tokenization 2021

spaCy Library

spaCy is a powerful Python library known for its efficient and fast NLP capabilities. It includes a tokenization module that provides high-performance tokenization algorithms. spaCy’s tokenizers handle sentence segmentation, word tokenization, and other tokenization tasks seamlessly. It also incorporates advanced linguistic features, such as part-of-speech tagging and named entity recognition, making it a comprehensive tool for text analysis.

Tokenization with Regular Expressions

Python’s built-in regular expression (regex) module allows for flexible and customizable tokenization using pattern matching. Regular expressions can define rules to split text based on specific patterns, such as whitespace, punctuation, or special characters. Regex-based tokenization provides fine-grained control over the tokenization process and is useful when dealing with specialized tokenization requirements or non-standard text formats Tokenization 2021

Customized Tokenization

Python enables developers to implement customized tokenization approaches tailored to specific needs. By leveraging string manipulation, pattern matching, or external libraries, developers can design tokenization methods suited for domain-specific tasks or unique data formats. Custom tokenization is particularly useful when dealing with unconventional text structures or when existing tokenization libraries do not meet specific requirements Tokenization 2021

Python offers a rich set of tools and libraries for tokenization, empowering text processing and analysis in NLP. NLTK and spaCy provide comprehensive and efficient tokenization capabilities, while regular expressions and customized approaches offer flexibility and control. By leveraging these methods, Python developers can extract valuable insights from textual data, enabling a wide range of NLP applications Tokenization 2021

Get all your business need here only | Top Offshoring Service provider. (24x7offshoring.com)