In the realm of artificial intelligence and machine learning, data is the cornerstone upon which groundbreaking algorithms and models are built. However, raw data, in its unstructured form, lacks the context and organization necessary for machines to comprehend and derive meaningful insights. This is where data labeling emerges as a crucial process, bridging the gap between raw data and actionable intelligence. In this comprehensive guide, we delve deep into the essence of data labeling, exploring its significance, methodologies, challenges, and future implications.

Understanding Data Labeling

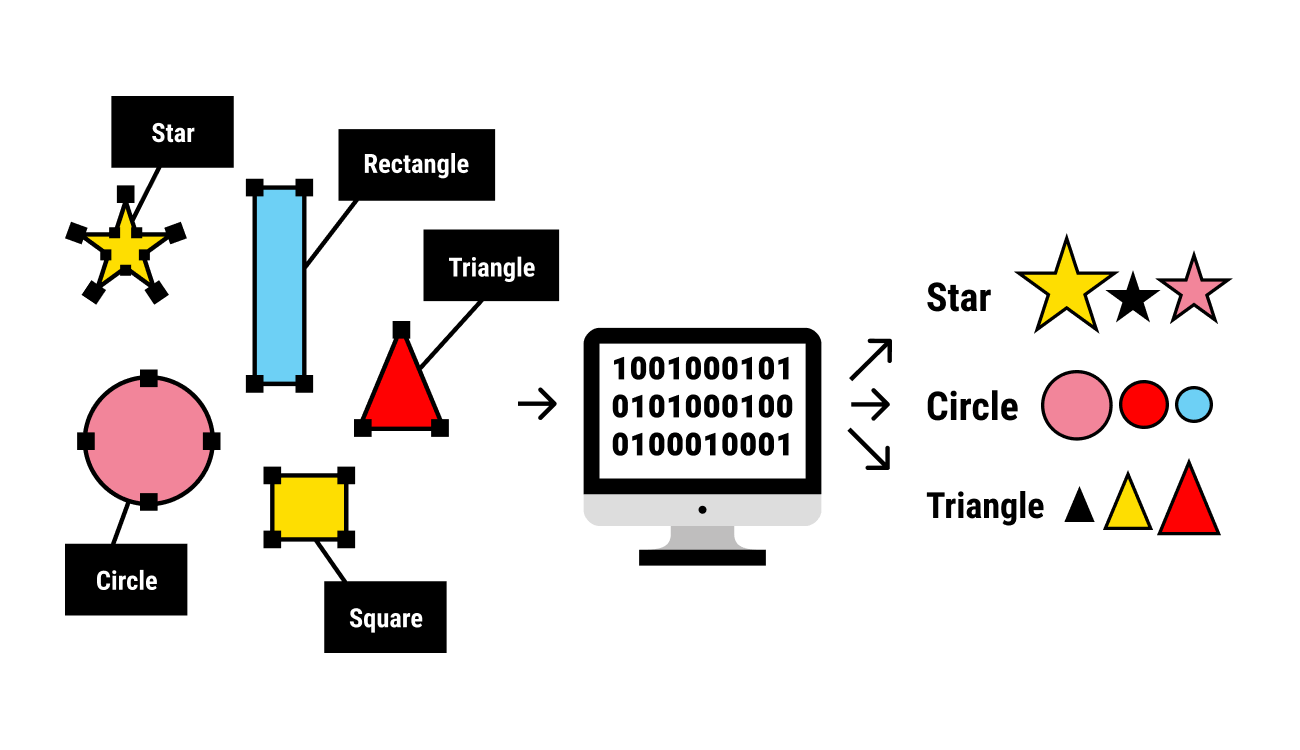

At its core, data labeling involves the process of annotating or tagging data with relevant metadata or labels, enabling machines to recognize patterns, classify information, and make informed decisions. It is a fundamental step in supervised learning, where algorithms are trained on labeled datasets to generalize patterns and predict outcomes accurately. From image recognition and natural language processing to autonomous driving and healthcare diagnostics, data labeling serves as the bedrock upon which various AI applications thrive.

Significance of Data Labeling

The significance of data labeling cannot be overstated, as it directly influences the performance and reliability of machine learning models. Here’s why data labeling holds immense importance:

- Enhanced Model Accuracy: Labeled datasets provide supervised learning algorithms with ground truth annotations, facilitating the development of accurate predictive models.

- Improved Generalization: By exposing models to diverse and well-labeled data, they can generalize better and make accurate predictions on unseen instances.

- Domain-Specific Insights: Data labeling enables the extraction of domain-specific insights, aiding organizations in making informed decisions and gaining a competitive edge.

- Quality Control: Through meticulous labeling and annotation, data quality issues such as noise, bias, and inconsistencies can be identified and rectified, ensuring robust model performance.

- Ethical Considerations: Proper labeling also plays a crucial role in addressing ethical concerns, such as bias mitigation and fairness in AI systems, thereby fostering trust and accountability.

Methodologies of Data Labeling

Data labeling encompasses a diverse array of methodologies, each tailored to suit the unique characteristics and requirements of different datasets and applications. Some common methodologies include:

- Manual Labeling: Human annotators manually assign labels to data points based on predefined criteria. While labor-intensive, manual labeling offers high accuracy and flexibility, making it ideal for complex tasks requiring human judgment.

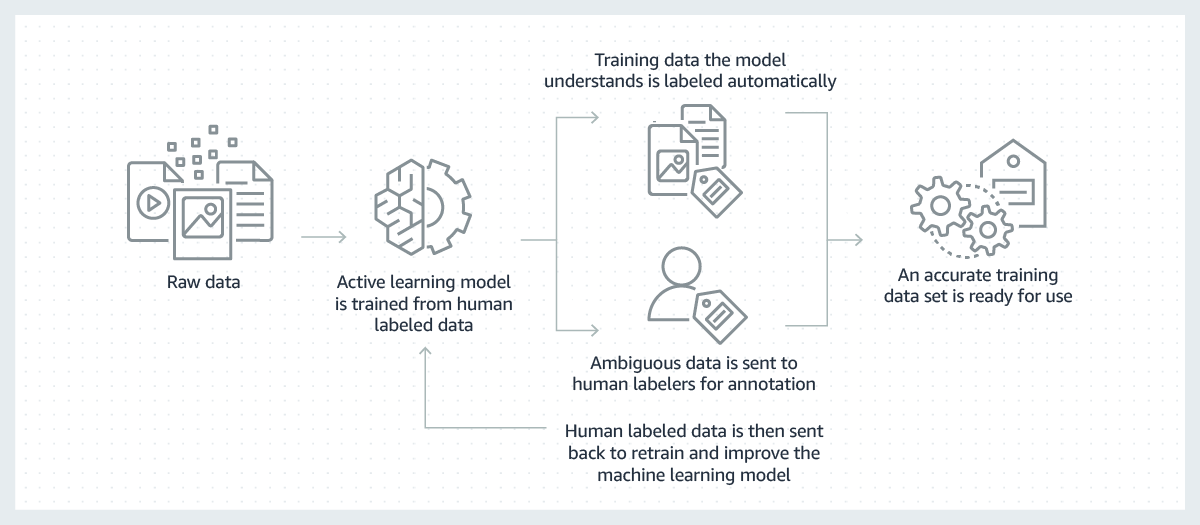

- Semi-Supervised Labeling: In this approach, a portion of the dataset is labeled manually, while the remaining data is annotated using automated techniques such as clustering or active learning algorithms. Semi-supervised labeling strikes a balance between efficiency and accuracy, leveraging human expertise where necessary while minimizing manual effort.

- Active Learning: Active learning frameworks intelligently select the most informative data points for annotation, thereby maximizing the learning efficiency of machine learning models. By prioritizing uncertain or ambiguous instances, active learning accelerates the labeling process while ensuring model performance.

- Crowdsourcing: Crowdsourcing platforms enable organizations to harness the collective intelligence of a distributed workforce for data labeling tasks. While cost-effective and scalable, crowdsourcing may introduce quality control challenges, necessitating robust validation mechanisms.

- Transfer Learning: Transfer learning leverages pre-trained models or existing labeled datasets to bootstrap the labeling process for new tasks or domains. By transferring knowledge from related tasks, transfer learning accelerates model development and reduces the labeling burden.

Despite its inherent benefits, data labeling poses several challenges and considerations that warrant attention:

- Labeling Bias: Human annotators may introduce bias into labeled datasets, leading to skewed model predictions and ethical concerns. Mitigating labeling bias requires diverse annotator pools, rigorous validation protocols, and algorithmic fairness techniques.

- Scalability: Scaling data labeling operations to handle large volumes of data poses logistical and cost challenges. Automation, crowdsourcing, and efficient labeling strategies are essential for addressing scalability concerns without compromising quality.

- Annotation Consistency: Ensuring consistency across annotations is crucial for training reliable machine learning models. Standardized annotation guidelines, inter-annotator agreement metrics, and quality assurance protocols help maintain annotation consistency.

- Data Privacy and Security: Data labeling often involves sensitive information, necessitating robust privacy and security measures to safeguard against unauthorized access, breaches, and misuse. Compliance with data protection regulations such as GDPR and CCPA is paramount.

- Domain Expertise: Certain labeling tasks require domain-specific knowledge and expertise to accurately annotate data. Collaborating with subject matter experts and domain specialists ensures the quality and relevance of labeled datasets.

Future Implications and Trends

As AI continues to evolve, data labeling will play an increasingly pivotal role in shaping its trajectory. Several emerging trends and advancements are poised to influence the future of data labeling:

- Automated Labeling Technologies: Advancements in computer vision, natural language processing, and machine learning are driving the development of automated labeling technologies, reducing the reliance on manual annotation and accelerating the labeling process.

- Federated Learning: Federated learning frameworks enable collaborative model training across distributed data sources while preserving data privacy. Federated labeling techniques extend this paradigm to data labeling, allowing organizations to collectively label data without centralizing sensitive information.

- Ethical Labeling Frameworks: The integration of ethical principles and guidelines into data labeling practices is gaining traction, fostering transparency, fairness, and accountability in AI systems. Ethical labeling frameworks encompass considerations such as bias mitigation, privacy preservation, and stakeholder engagement.

- Meta-Learning and Few-Shot Learning: Meta-learning and few-shot learning techniques empower models to generalize from limited labeled data, reducing the labeling requirements for training AI systems. By leveraging meta-knowledge and prior experience, meta-learning algorithms adapt rapidly to new tasks and domains.

- Blockchain-enabled Labeling Platforms: Blockchain technology offers decentralized and tamper-resistant solutions for data labeling, enhancing transparency, auditability, and trust in labeling processes. Blockchain-enabled labeling platforms ensure the integrity and traceability of labeled datasets, mitigating concerns related to data manipulation and fraud.

In conclusion, data labeling serves as the cornerstone of AI development, facilitating the transformation of raw data into actionable insights and intelligent systems. By embracing diverse methodologies, addressing inherent challenges, and embracing emerging trends, organizations can harness the full potential of data labeling to drive innovation, enhance decision-making, and shape a future powered by AI.

As we embark on this transformative journey, the role of data labeling will only continue to evolve, shaping the trajectory of artificial intelligence and its impact on society. Through collaboration, innovation, and ethical stewardship, we can harness the power of data labeling to build a future where AI empowers human potential and augments our collective intelligence.