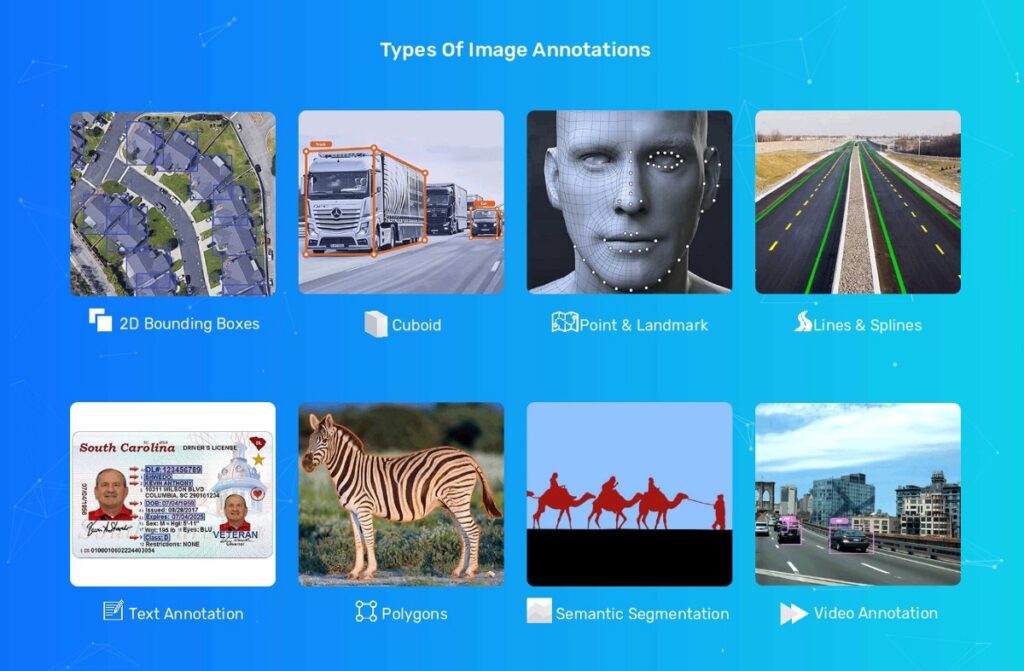

What is Image Annotation?

Image Annotation is the method involved with adding a layer of metadata to a picture. It’s a way for individuals to portray what they find in a picture, and that data can be utilized for different purposes. For instance, it can assist with distinguishing objects in a picture or give additional background info about them. It can likewise give accommodating data image annotation on how those articles connect with one another spatially or transiently.

Picture explanation instruments permit you to make comments physically or through AI calculations (MLAs). The most well known MLA technique as of now utilized is called profound realizing, which utilizes counterfeit brain organizations (ANNs) to recognize highlights inside pictures and produce text portrayals in view of those elements.

Two normal explained picture datasets are Google’s OID (Open Pictures Data set) assortment and Microsoft’s COCO Assortment (Normal Articles in Setting), which each contain 2.5 million clarified occurrences in 328k pictures.

How in all actuality does Picture Explanation work?

Pictures can be commented on utilizing any open image annotation source or freeware information explanation apparatus. Be that as it may, the most notable open-source picture explanation apparatus is the PC Vision Comment Instrument (CVAT).

A careful handle of the kind of information being explained and the current task is important to choose the proper explanation instrument.

You ought to give close consideration to:

The information’s conveyance technique

The vital kind of explanation

The record type that comments ought to be kept in

A few image annotation innovations can be used for comments because of the colossal reach in picture explanation occupations and capacity designs. From fundamental explanations on open-source stages like CVAT and LabelImg to complex comments for huge scope information utilizing advances like V7.

Furthermore, commenting on can be done on an individual or gathering level, or it tends to be contracted out to self employed entities or organizations that give explaining administrations.

An outline of how to start commenting on pictures is given here.

Source your crude picture or video information

This is the most important phase in any task, and it’s vital for ensure that you’re utilizing the right devices. While working with picture information, there are two most compelling things you really want to remember:

The document configuration of your picture or video – whether it’s jpeg or spat; Crude (DNG, CR2) or JPEG.

Whether you’re working with pictures from a camera or video cuts from a cell phone (e.g., iPhone/Android), there are various kinds image annotation of cameras out there, each with its exclusive document designs. To bring a wide range of documents into one spot and explain them, then, at that point, begin by bringing in just those organizations that function admirably together (e.g., jpeg stills + h264 recordings).

Figure out what name types you ought to utilize

The sort of undertaking being utilized to prepare the calculation has an immediate bearing on the sort of comment that image annotation ought to be utilized. For instance, when a calculation is being prepared to arrange pictures, the marks appear as mathematical portrayals of the different classes. Then again, semantic covers and boundary box directions would be utilized as comments on the off chance that the framework were learning picture division or item recognition.

Make a class for each item you need to mark

The following stage is to make a class for each item you need to name. Each class ought to be extraordinary and address an article with unmistakable attributes in your picture. For instance, on the off chance that you’re explaining an image of a feline, one class could be designated “catFace” or “catHead.” Likewise, in the event that your image annotation has two individuals in it, one class could be named “Person1″and the other would be marked “Person2″.

To do this accurately (and abstain from committing errors), we suggest utilizing a picture proofreader, for example, GIMP or Photoshop to make extra layers for each different item you need to name independently on top of our unique photograph so that when we trade these pictures later on they will not get stirred up with different articles from other photographs.

- Comment on with the right devices

The right apparatus for the gig is basic in regards to image annotation explanation. A few administrations support both text and picture explanation, or just sound, or just video — the potential outcomes are unfathomable. Utilizing a help that works with your favored correspondence medium is significant.

There are additionally apparatuses accessible for explicit information types, so you ought to pick one that upholds what you have as a top priority. For instance: on the off chance that you’re explaining time series information (i.e., a progression of occasions over the long haul), you’ll need an instrument explicitly intended for this reason; in the event that there isn’t such a device available yet, then think about building one yourself!

Form your dataset and trade it

Whenever you’ve commented on the image annotation, you can utilize rendition control to deal with your information. This includes making a different document for each dataset variant, incorporating a timestamp in its filename. Then, at that point, while bringing information into another program or investigation instrument, there will be no uncertainty about which variant is being utilized.

For instance, we could call our most memorable Image Annotation record “ImageAnnotated_V2”, trailed by “ImageAnnotated_V3” when we make changes, etc. Then, in the wake of trading our last variant of the dataset utilizing this naming plan (and saving it as a .csv document), it’ll be sufficiently simple to bring once more into Picture Explanation later if necessary.

Assignments that need explained information

Here, we’ll investigate the different PC vision assignments that require the utilization of commented on picture information.

Picture order

Picture order is an errand in AI where you have a bunch of pictures and marks for each picture. The objective is to prepare an AI calculation to perceive objects in image annotation.

You really want explained information for picture order since it’s difficult for machines to figure out how to arrange pictures without understanding what the right marks are. It would be like going blindfolded into a room with 100 items, getting one indiscriminately, and attempting to think about what it was – – you’d improve in the event that somebody showed you the responses in advance.

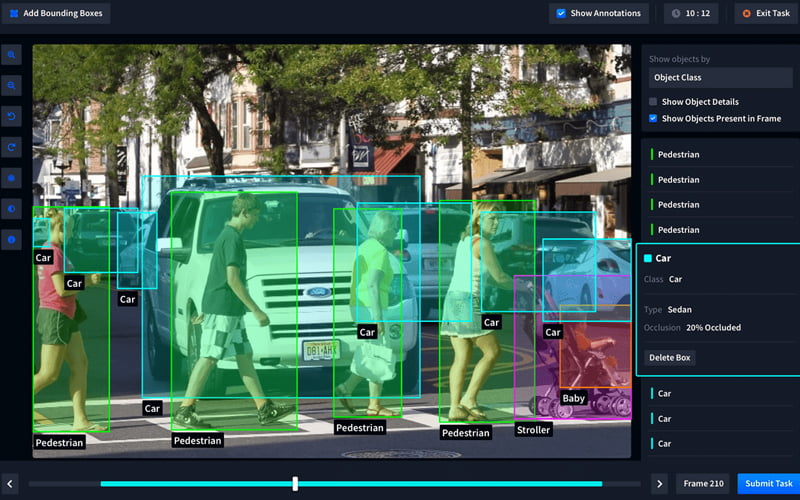

Object identification and acknowledgment

Object identification is the assignment of tracking down unambiguous items in a image annotation, while object acknowledgment includes distinguishing those articles. Finding a thing that you have not seen before is known as original location, while perceiving an item that you have seen beforehand is known as recognizable discovery.

Object identification can be additionally separated into bouncing box assessment (which finds every one of the pixels that have a place with one item) and class-explicit limitation (which figures out which pixel has a place with which class). Explicit undertakings include:

Distinguishing objects in pictures.

Assessing their area.

Assessing their size.

Picture division

Picture division is the most image annotation common way of parting a picture into various sections. This should be possible to detach various items in the picture or to disengage a specific item from its experience. Picture division is utilized in numerous ventures and applications, including PC vision and workmanship history.

Picture division has a few advantages over manual altering: it’s quicker and more exact than hand-drawn frames; it doesn’t need extra preparation time; you can involve one bunch of rules for various pictures with somewhat unique lighting conditions; mechanized calculations don’t commit errors as fast as people do (and when they truly do commit errors, they’re simpler to fix).

Semantic division

Semantic division is the most common way of naming every pixel in a picture with a class mark. This could appear to be like grouping, yet there is a significant qualification: characterization relegates a solitary mark (or class) to a whole picture; semantic division gives various names (or classifications) to individual pixels inside the picture.

Semantic division is a sort of edge recognition that recognizes spatial limits between objects in a picture. This assists computers with better comprehension what they’re checking out, permitting them to classify new pictures and recordings better really them later on. It’s likewise utilized for object following — distinguishing where explicit items are situated inside a scene over the long haul — and activity acknowledgment — recalling activities performed by individuals or creatures in photographs or recordings.

Occasion division

Occasion division is a sort of division that includes recognizing the limits between objects in a picture. It varies from other division types in that it expects you to figure out where each article starts and finishes, as opposed to just relegating a solitary mark to every locale. For instance, on the off chance that you were given a picture with various individuals remaining close to their vehicles at a parking area leave, example division would be utilized to figure out which vehicle had a place with which individual as well as the other way around.

Occasions are many times utilized as the information highlights for grouping models since they contain more visual data than standard RGB pictures. Also, they can be handled effectively since they just require gathering into sets in view of their normal properties (i.e., colors) as opposed to performing optical stream strategies for movement identification.

Panoptic division

Panoptic division is a procedure that permits you to see the information according to numerous points of view, which can be useful for errands like picture grouping, object identification and acknowledgment, and semantic division. Panoptic division is not the same as customary profound learning approaches in that it doesn’t need preparing on the whole dataset prior to playing out an errand. All things considered, panoptic division utilizes a calculation to recognize what parts of a picture are sufficiently significant to utilize while concluding what data is being gathered by every pixel in the picture sensor.

Business Image Annotation Arrangement

Business Image Annotation is a particular help. It requires specific information and experience. It additionally requires exceptional hardware to play out the explanation. Hence, you ought to re-appropriate this errand to a business Image Annotation accomplice.

Viso Suite, a PC vision stage, has a CVAT-based Image Annotation climate as a feature of its center usefulness. The Suite is worked for the cloud and can be gotten to from any internet browser. The Viso Suite is a complete instrument for proficient groups to clarify pictures and recordings. Cooperative video information assortment, picture explanation, artificial intelligence model preparation and the executives, without code application improvement, and enormous PC vision framework activities are conceivable.

Using no-code and low-code advances, Viso can accelerate the generally sluggish mix process no matter how you look at it in the application improvement lifecycle.

What amount of time does Picture Explanation require?

Timing for an explanation depends intensely on the amount of information required and the multifaceted design of the actual comment. For instance, comments that contain a couple of things from maybe one or two classes can be handled undeniably more rapidly than those that have objects from great many classes.

Explanations that main need the actual Image Annotationed on can be finished more rapidly than ones that include pinpointing a few items and central issues.

How to track down quality picture information?

Assembling excellent commented on data is testing.

Comments should be worked from crude gained information on the off chance that information of a specific kind isn’t uninhibitedly accessible. This typically involves a bunch of tests to preclude any chance of mistake or pollute in the handled information.

The nature of picture information is subject to the accompanying boundaries:

Number of commented on pictures: The more clarified pictures you have, the better. Also, the bigger your dataset is, the almost certain it will be to catch assorted conditions and situations that can utilized for train.

Dissemination of clarified pictures: A uniform circulation among different classes isn’t really alluring in light of the fact that it restricts the assortment accessible in your informational index and, thusly, its utility. You’ll need a great deal of models from each class so you can prepare a model that performs well under all conditions (regardless of whether they’re interesting).

Variety in annotators: Annotators who understand what they’re doing can furnish excellent explanations with little blunder; one rotten one will destroy your entire group! What’s more, having various annotators guarantees overt repetitiveness and guarantees consistency across various gatherings or nations where there might be varieties in wording or shows across locales.

Open datasets

With regards to picture information, there are two principal types: open and shut. Open datasets are unreservedly accessible for download on the web, without any limitations or authorizing arrangements. Shut datasets, then again, must be involved in the wake of applying for a permit and paying an expense — and, surprisingly, then, at that point, may require extra desk work from the client prior to being given admittance.

A few instances of open datasets incorporate Flickr and Wikimedia Center (both are assortments of photographs contributed by individuals from one side of the planet to the other). Interestingly, proportions of shut datasets incorporate business satellite symbolism sold by organizations like DigitalGlobe or Airbus Guard and Space (these organizations offer high-goal photographs yet require broad agreements).

Scratch web information

Web scratching is the method involved with scanning the web for explicit kinds of photographs utilizing a content that naturally does many quests and downloads the outcomes.

The information got by web based scratching is typically in an extremely crude state and requires broad cleaning before any calculation or comment can be led, yet it is effectively open and fast to gather. For instance, utilizing scratching, we can collect photographs that are now labeled as having a place with a particular class or branch of knowledge in light of the question we give.

Order, which just necessities a solitary tag for each picture, is enormously worked with by this comment.

Self clarified information

One more sort of information is self-explained. For this situation, the proprietor of the information has physically named it with their marks. For instance, you might need to comment on pictures of vehicles and trucks with their ongoing model year. You can scrap pictures from maker sites and coordinate them with your dataset utilizing a device like Microsoft Mental Administrations.

This kind of explanation is more dependable than publicly supported naming since people are less inclined to mislabel or commit errors while they’re clarifying their information than when they are marking another person’s information. Be that as it may, it additionally costs more — you have burned through cash on human work for these comments.