Best 2022 Public Social Media Datasets?

We start with implications of a part of the key techniques related to analyzing unstructured printed data Public Social Media Datasets:

Typical language taking care of—(NLP) is a field of computer programming, man-made awareness and semantics stressed over the joint efforts among PCs and human (standard) vernaculars. Specifically, it is the course of a PC isolating huge information from standard language input just as conveying typical language yield of Public Social .

News examination—the assessment of the diverse abstract and quantitative properties of text based (unstructured data) reports. A part of these qualities is: feeling, importance and interest Public Social.

Evaluation mining—appraisal mining (believing mining, evaluation/feeling extraction) is the space of assessment that is undertakings to make modified systems to choose human evaluation from text written in ordinary language.

Scratching—gathering on the web data from online media and other Web objections as unstructured text and besides known as site scratching, web gathering, and web data extraction.

Feeling assessment—assessment examination insinuates the utilization of ordinary language dealing with, computational semantics and text examination to recognize and remove passionate information in source materials.

Text assessment—incorporates information recuperation (IR), lexical examination to focus on word repeat movements, plan affirmation, naming/clarification, information extraction, data mining techniques including association and alliance examination, insight, and judicious examination.

Public social media datasets contain vast amounts of unstructured printed data, presenting both opportunities and challenges for analysis. This article examines the implications of key techniques used to analyze unstructured printed data in public social media datasets, shedding light on their importance and potential applications.

Natural Language Processing

Natural Language Processing (NLP) plays a crucial role in analyzing unstructured printed data in social media datasets. NLP techniques enable the extraction of meaningful information from text, including sentiment analysis, topic modeling, and named entity recognition. These techniques help uncover patterns, sentiments, and trends within the data, providing valuable insights for various applications.

Text Preprocessing

Text preprocessing is an essential step in analyzing unstructured printed data. Techniques such as tokenization, stop-word removal, stemming, and lemmatization help transform raw text into a more structured and analyzable format. By standardizing text and reducing noise, text preprocessing enhances the accuracy and efficiency of subsequent analysis techniques.

Text Classification and Clustering

Text classification and clustering techniques group similar texts or assign labels to texts based on their content. Classification algorithms, such as support vector machines (SVM) and Naive Bayes, categorize text into predefined classes. Clustering algorithms, such as k-means and hierarchical clustering, group similar texts without predefined labels. These techniques enable organization, summarization, and retrieval of information from unstructured printed data in social media datasets.

Entity Extraction and Named Entity Recognition

Entity extraction and named entity recognition techniques focus on identifying and extracting specific information such as names, organizations, locations, and dates from unstructured text. These techniques are valuable for applications like information retrieval, social network analysis, and event detection, as they enable the identification of relevant entities and their relationships within the data.

The implications of key techniques for analyzing unstructured printed data in public social media datasets are significant. Natural Language Processing, text preprocessing, text classification, clustering, and entity extraction techniques enable the extraction of valuable insights and knowledge from unstructured text, facilitating applications in sentiment analysis, information retrieval, event detection, and social network analysis. Understanding and leveraging these techniques are essential for effective analysis of public social media datasets.

Investigation challenges Public Social

Online media scratching and assessment gives a rich wellspring of academic investigation challenges for social scientists, PC specialists, and sponsoring bodies. Hardships include:

Scratching—but online media data is open through APIs, as a result of the business worth of the data, a huge part of the huge sources, for instance, Facebook and Google are making it dynamically difficult for scholastics to get thorough induction to their ‘rough’ data; relatively few social data sources give sensible data commitments to the academic local area and investigators.

News organizations, for instance, Thomson Reuters and Bloomberg ordinarily charge a premium for induction to their data. Then again, Twitter has actually detailed the Twitter Data Grants program, where experts can apply to acquire permission to Twitter’s public tweets and recorded data to get pieces of information from its gigantic game plan of data (Twitter has more than 500 million tweets each day).

Data cleansing—cleaning unstructured abstract data (e.g., normalizing message), especially high-repeat streamed constant data, really presents different issues and investigation challenges.

Widely inclusive data sources—experts are dynamically joining together and uniting novel data sources: online media data, steady market, and customer data, and geospatial data for examination.

Data confirmation—at whatever point you have made a ‘significant data’ resource, the data ought to be gotten, ownership and IP issues settled (i.e., taking care of scratched data is against a huge piece of the distributers’ terms of organization), and customers outfitted with different levels of access; if not, customers may try to ‘suck’ all of the significant data from the informational collection.

Data examination—refined assessment of online media data for appraisal mining (e.g., feeling assessment) really raises a store of hardships as a result of obscure lingos, new words, work related chatter, spelling botches, and the standard creating of language.

Assessment dashboards—various electronic media stages anticipate that clients should create APIs to will feeds or program examination models in a programming language, similar to Java. While reasonable for PC scientists, these capacities are normally past most (humanism) trained professionals. Non-programming interfaces are required for giving what might be insinuated as ‘significant’ permission to ‘rough’ data, for example, planning APIs, merging web-based media deals with, joining widely inclusive sources, and making intelligent models.

Data discernment—a visual depiction of data whereby information that has been engrossed in some schematic construction completely plans on passing on information evidently and effectively through graphical means. Given the degree of the data being referred to, the portrayal is ending up being dynamically critical.

Analyzing public social media data presents unique challenges due to the vastness and complexity of the datasets. This article delves into the investigation challenges faced when working with public social media data, addressing issues related to data access, data quality, privacy concerns, and ethical considerations.

Data Access and Availability

One of the primary challenges in analyzing public social media data is accessing and obtaining the data. While social media platforms provide APIs and tools for data retrieval, limitations on data access and the need for permissions can hinder the acquisition process. Additionally, public social media data availability may vary across platforms and regions, posing challenges in creating comprehensive datasets for analysis.

Data Quality and Noise

Public social media data often contains noise, which can affect the accuracy and reliability of analysis results. Noise can arise from various sources, including spam, fake accounts, irrelevant content, and user-generated noise such as typographical errors or slang. Ensuring data quality requires robust preprocessing techniques, filtering mechanisms, and careful consideration of data sources to minimize the impact of noise on analysis outcomes.

Privacy and Ethical Considerations

Analyzing public social media data raises privacy concerns and ethical considerations. Although the data is publicly accessible, personal and sensitive information may inadvertently be exposed during analysis. Respecting user privacy and adhering to legal and ethical guidelines are crucial aspects of working with public social media data. Researchers must handle data responsibly, anonymize sensitive information, and obtain necessary ethical approvals when working with human subjects.

Data Bias and Representation

Public social media data may exhibit biases due to factors such as demographic skews, platform usage patterns, or sampling biases. It is important to acknowledge these biases and understand their potential impact on analysis results. Mitigating biases involves considering sampling techniques, diversifying data sources, and validating findings across different datasets to ensure comprehensive and representative analyses.

Investigating public social media data comes with several challenges that require careful consideration. Overcoming challenges related to data access, data quality, privacy, and ethics is essential for conducting meaningful analyses and drawing reliable insights. Addressing these challenges advances the field of social media analytics, enabling researchers to make informed decisions and contribute to a deeper understanding of online social dynamics.

Online media data—electronic media data types (e.g., relational association media, wikis, destinations, RSS channels, and news, etc) and setups (e.g., XML and JSON). This fuses instructive lists and continuously huge steady data deals with, as money related data, customer trade data, telecoms, and spatial data.

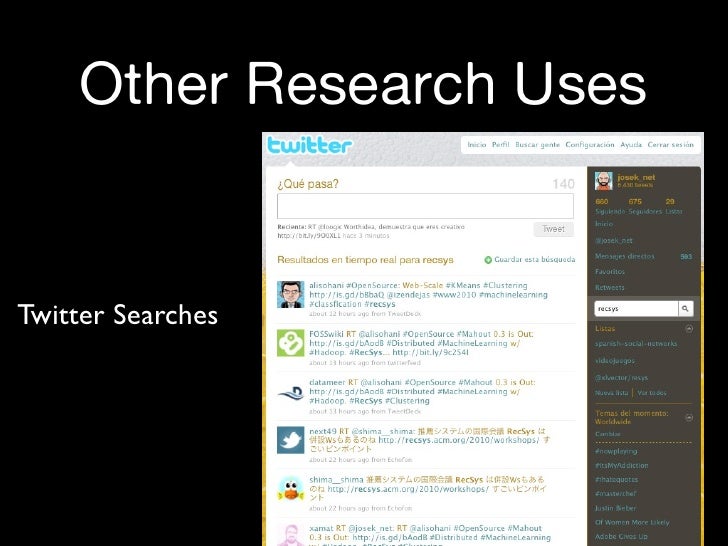

Online media programmed admittance—data organizations and gadgets for sourcing and scratching (text based) data from relational cooperation media, wikis, RSS channels, news, etc These can be supportively divided into:

Data sources, organizations, and mechanical assemblies—where data is gotten to by instruments that guarantee the unrefined data or give clear examination. Models include: Google Trends, SocialMention, SocialPointer, and SocialSeek, which give a surge of information that aggregates distinctive electronic media deals with.

Data deals with through APIs—where educational assortments and channels are open through programmable HTTP-based APIs and return named data using XML or JSON, etc Models fuse Wikipedia, Twitter, and Facebook.

Text cleaning and limit gadgets—instruments for cleaning and taking care of text based data. Google Refine and DataWrangler are models for data cleaning.

Text examination instruments—individual or libraries of mechanical assemblies for separating on the web media data at whatever point it has been damaged and cleaned. These are mainly standard language taking care of, assessment, and gathering mechanical assemblies, which are explained under.

Change mechanical assemblies—clear gadgets that can change scholarly data into tables, maps, outlines (line, pie, disperse, bar, etc), schedule or even development (movement over course of occasions), like Google Fusion Tables, Zoho Reports, Tableau Public or IBM’s Many Eyes.

Assessment gadgets—further created examination gadgets for taking apart friendly data, perceiving affiliations, and building associations, as Gephi (open source) or the Excel module NodeXL.

Electronic media stages—conditions that give broad online media data and libraries of devices for examination. Models include Thomson Reuters Machine Readable News, Radian 6, and Lexalytics.

Casual people group media stages—arrange that give data mining and assessment on Twitter, Facebook, and a wide extent of other relational association media sources.

News stages—stages, for instance, Thomson Reuters giving business news documents/channels and related examination of Public Social.

Online public social interactions have become an integral part of our digital lives, shaping the way we connect, communicate, and share information. This article delves into the multifaceted nature of online public social interactions, examining their characteristics, impact, and the dynamics that govern these virtual social spaces.

Digital Platforms and Social Networks (150 words):

Online public social interactions primarily occur on digital platforms and social networking sites. These platforms, such as Facebook, Twitter, Instagram, and LinkedIn, provide avenues for individuals to connect, share content, and engage in conversations on a global scale. The accessibility and reach of these platforms have revolutionized the way people communicate and form online communities.

Communication and Engagement

Online public social interactions facilitate various forms of communication, including text-based messages, multimedia sharing, and real-time conversations. Users engage in discussions, comment on posts, like or share content, and participate in virtual communities centered around shared interests or topics. These interactions offer opportunities for collaboration, information dissemination, and social support.

Influence and Impact

Online public social interactions have a profound influence on individuals, communities, and society at large. They shape public opinion, drive cultural trends, and can mobilize collective action. The viral spread of information, the power of influencers, and the impact of social movements are all manifestations of the transformative potential of online public social interactions.

Challenges and Opportunities

While online public social interactions offer numerous benefits, they also present challenges. Issues like online harassment, misinformation, privacy concerns, and the spread of hate speech necessitate ongoing efforts to foster responsible online behavior and ensure a safe digital environment. However, these challenges also provide opportunities for technological advancements, policy interventions, and educational initiatives to address and mitigate negative aspects.

Online public social interactions have revolutionized the way we connect, communicate, and share information. Understanding the dynamics and impact of these interactions is crucial in navigating the digital landscape. By harnessing the potential of online public social interactions and addressing the associated challenges, we can foster a more inclusive, informed, and engaging digital world.

The digital era has brought forth a plethora of challenges and opportunities in the realm of online interactions. This article explores the multifaceted nature of these challenges and opportunities, examining their impact on individuals, communities, and society at large in an increasingly connected and virtual world.

Cybersecurity and Privacy Concerns

As online interactions proliferate, the importance of cybersecurity and privacy becomes paramount. Challenges include protecting personal data, safeguarding against hacking and identity theft, and countering online threats. Opportunities arise through advancements in encryption technologies, user awareness campaigns, and the development of secure platforms that prioritize privacy.

Digital Divide and Inclusivity

The digital divide poses a significant challenge, as not everyone has equal access to online platforms and opportunities. Bridging this divide requires efforts to provide affordable internet access, digital literacy programs, and inclusive design principles. Embracing inclusivity enables marginalized communities to participate fully in online interactions, fostering equality and reducing social disparities.

Misinformation and Fake News

The proliferation of misinformation and fake news presents a challenge to online interactions. False information spreads rapidly, influencing public opinion and undermining trust. Addressing this challenge involves promoting media literacy, fact-checking initiatives, and the responsible sharing of information. Opportunities lie in the development of algorithms and tools that can identify and combat misinformation effectively.

Digital Well-being and Mental Health

The digital era poses challenges to individuals’ well-being and mental health, with issues such as online addiction, cyberbullying, and social media-induced anxiety. Opportunities arise through promoting digital well-being practices, fostering healthy online habits, and providing mental health support services. Building resilience, promoting digital empathy, and encouraging digital detoxes can enhance the positive aspects of online interactions.

The digital era has revolutionized the way we live, work, and connect. However, it has also introduced challenges to our mental health and well-being. This article delves into the concept of digital well-being and explores strategies for promoting mental health in the digital age.

The Impact of Digital Technology

Digital technology has brought numerous benefits, but it has also altered our lifestyles and behaviors. Excessive screen time, social media comparison, and constant connectivity can contribute to stress, anxiety, and a sense of disconnection. Understanding the impact of digital technology on mental health is crucial for addressing the challenges it poses.

Promoting Digital Well-being

Promoting digital well-being involves cultivating healthy digital habits and developing strategies to maintain a positive relationship with technology. Setting boundaries on screen time, practicing digital mindfulness, and fostering meaningful offline connections are essential for maintaining balance and mental well-being in the digital era.

Digital Detox and Mindful Technology Use

Digital detoxes, periodic breaks from digital devices and online activities, can help rejuvenate mental health. Engaging in offline activities, practicing self-care, and engaging in hobbies or physical exercise can reduce digital dependency and improve overall well-being. Additionally, practicing mindful technology use involves being intentional and aware of how and when technology is used, avoiding mindless scrolling and fostering a healthier digital environment.

Technology-Assisted Mental Health Support

Digital technology also offers opportunities for mental health support. Online therapy platforms, mental health apps, and virtual support communities provide convenient access to resources, counseling, and peer support. These digital tools can complement traditional mental health services and improve access to care, especially in areas with limited resources.

Digital well-being is a vital aspect of maintaining mental health in the digital era. By cultivating healthy digital habits, practicing mindfulness, and embracing digital detoxes, individuals can navigate the digital landscape more effectively. Utilizing technology for mental health support further enhances well-being, emphasizing the potential of technology to promote positive mental health outcomes.

The digital landscape presents a myriad of challenges and opportunities in the realm of online interactions. By addressing cybersecurity concerns, promoting inclusivity, combating misinformation, and prioritizing digital well-being, we can harness the transformative potential of the digital era. Navigating these challenges and embracing the opportunities paves the way for a more inclusive, informed, and responsible digital future.

For more articles-English grammar that makes absolutely no sense