It is a piece of the 24×7 offshoring Architectural Layer in what parts are decoupled with the goal that insightful abilities may start. It is about information stockpiling and further its investigation, which should be possible utilizing different Tools, Design Patterns, and few Challenges. In the time of the Internet of Things and Mobility, a gigantic measure of information is opening up rapidly. There is additionally the requirement for a productive Analytics System. The correct administration of 24×7 offshoring utilizing Data Ingestion, pipelines, devices, plan designs, use cases, best practices, and Modern Batch Processing makes everything Quantified and Tracked. www.24x7offshoring.com

Information ingestion secret can be surely known utilizing the Layered Architecture of 24×7 offshoring. The Layered Architecture of the 24×7 offshoring ingestion pipeline is isolated into various layers, where each layer plays out a specific capacity.

The Architecture of 24×7 offshoring helps plan the Data Pipeline with the different necessities of either the Batch Processing System or Stream Processing System. This design comprises of 6 layers, which guarantee a protected progression of information.

Huge Data Architecture www.24x7offshoring.com

Information Ingestion Layer

This layer is the initial step for the information coming from variable sources to begin its excursion. This implies the information here is focused on and classified, making information stream easily in additional layers in this cycle stream.

In this Layer, more spotlight is on the transportation of information from the ingestion layer to the remainder of the information pipeline. It is the Layer where segments are decoupled with the goal that insightful capacities may start.

In this essential layer, the centre is to have some expertise in the information pipeline handling framework. We can say that the data we have gathered in the past layer is handled in this layer. Here we do some enchantment with the information to course them to an alternate objective and order the information stream, and it’s the primary point where the scientific may happen.

Capacity turns into a test when the size of the information you are managing turns out to be huge. A few potential arrangements, similar to Data Patterns, can save from such issues. Finding a capacity arrangement is a lot of significant when the size of your information turns out to be enormous. This layer centres around “where to store such enormous information productively.”

This is the layer where dynamic scientific handling happens. Here, the essential centre is to accumulate the information esteem with the goal that they are made to be more useful for the following layer. www.24x7offshoring.com

The representation, or introduction level, likely the most renowned level, where the information pipeline clients may feel the VALUE of . We need something that will catch individuals’ eye, manner them into, make your discoveries surely knew.

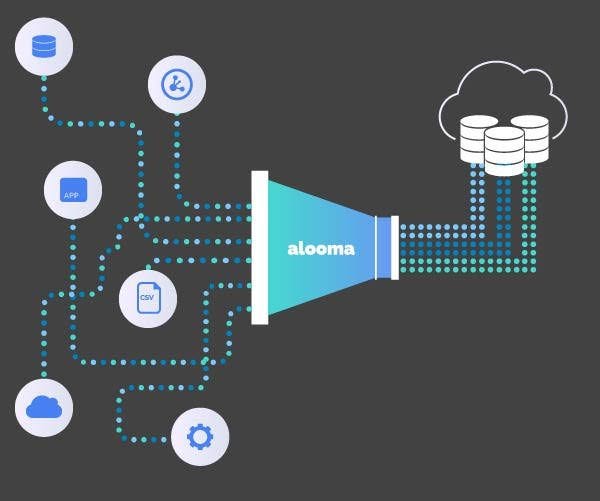

Information ingestion structure is tied in with moving information – and particularly the unstructured information – from where it began into a framework where it tends to be put away and investigated.

entry refers to transferring from a single location (such as a large site to a pool) for a specific purpose. It will not involve any modification or manipulation of the data during that process. Easily remove from one point and upload to another.

Each organization has a different entry framework, depending on its purpose.

Data entry has three modes, which include bulk, real time, and live streaming. Let’s learn about each one in more detail.

Collection Data Processing

In the processing of bulk data, is imported in batches. Suppose an organization wants to transfer information from a variety of sources to a repository every Monday morning. The best way to transfer would be to process a lot. Collection processing is an effective way to process large amounts of daa from time to time.

Real-time Processing

In real-time processing, will be processed into smaller pieces, and that in turn.

Most real-time is preferred by companies that rely on real-time dataprocessing such as national power grids, banks, and financial companies.

Broadcast Processing

Unlike real-time , transmission is an ongoing process. This type of ata entry process is widely used in forex and stock market estimates, forecasting analyzes, and online recommendation programs.

Lambda Road

Next, we have the lambda method. It controls both processes, batch and streaming, latency measurement, and output. The use of Lambda facilities is increasing due to the expansion of Big and the need for real-time statistics.

Challenges that Companies Face While Importing ata

Now that you realize that method data can be integrated into the medium, here is a list of problems companies often face when importing data and how the data entry tool can help solve that challenge.

Maintaining Data Quality

The biggest challenge of importing from any source is maintaining quality and completeness. It is important for business intelligence activities that you will perform on your dataHowever, since the imported can be used in the BI in a temporary way, quality issues are usually not available. You can reduce this by using the data import tool which provides additional quality features.

Data is available in most formats in the organization. As the organization grows, more will accumulate, and it will soon be difficult to manage it. Synchronizing all this data or putting it in one archive is the solution. But since this is available from many sources, extracting it can be a problem. This can be solved with data import tools that provide multiple connections to extract, modify, and upload

Creating a Similar Structure

In order to make your business intelligence operations more efficient, you will need to build a similar structure using map features that can edit data points. The data import tool can clean, modify, and map in the right place.

A widely used model, bulk entry, collects on large tasks, or collections, for periodic transfer. groups can set the task to be performed based on logical planning or simple planning.

Companies often use bulk import for large that do not require near-real-time analysis. For example, a business that wants to explore the relationship between renewable SaaS subscriptions and customer support tickets can import related on a daily basis — it does not need to access and analyze as soon as the support ticket resolves.

Streaming data collection collects real-time so that it can be uploaded quickly to a specific location.

This is a very costly import method, which requires systems to monitor resources continuously, but is needed where quick information and understanding are more expensive.

For example, online advertising scenarios that require a second decision — which will be given by the ad — require live streaming input to access data and analysis.

Small data entry captures small data sets in very short periods of time — usually less than a minute. The strategy makes data available closer to real-time, much like live streaming. In fact, the terms micro-batching and streaming are often used interchangeably in data formats and software platform definitions.

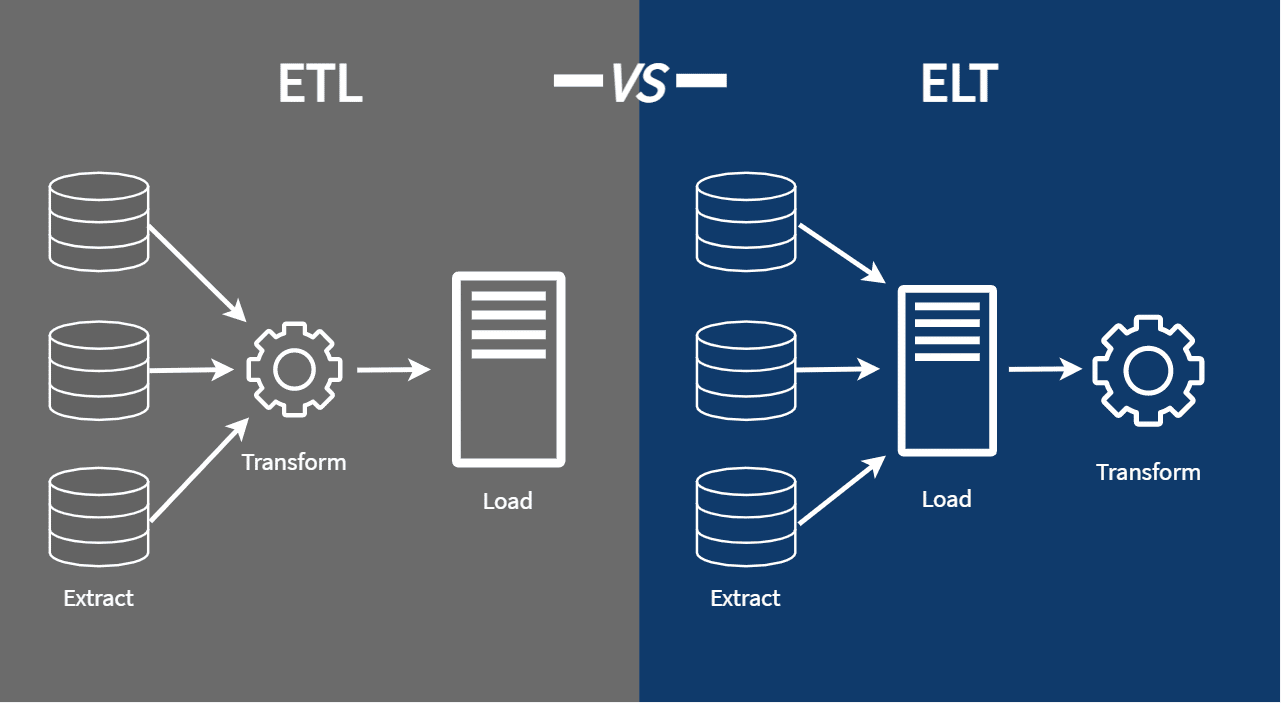

To import data, a simple pipe extracts the data from which it was created or stored and uploads it to a selected location or set of locations. Where paradigm includes data conversion steps — such as merging, purification, or retrieval — it is considered the process of Extract, Transform, Load (ETL) or Extract, Load, Transform (ELT). The two most important components of a data entry are:

Sources

The process can extend beyond the company’s business data center. In addition to internal systems and stored information, import resources can include IoT applications, third-party forums, and information collected online.

Destinations

Data pools, data warehouses, and document stores are often the target areas for data entry. The input pipe can simply send data to an application or messaging system.

Transferring data from in-house systems to the reporting or analysis area to reach a wider business

Taking continuous data distribution from various sources as part of a marketing campaign

Collecting data from different providers to create an internal product line

Taking a large amount of daily records of the internal Salesforce forum

Allowing customers to eat and compile data through an application interface (API)data ingestion

Capture data from the Twitter feed for further analysis

Setting up a plumbing in the house goes hand in hand with complex challenges. In the past, organizations were able to duplicate or manipulate maps of their own processes. But with the size and diversity of data today, older methods are often not enough for fast-moving businesses.

First, the data companies need to import is usually handled by third parties, which can be difficult to work with, especially if it is not fully documented. If the marketing team needs to upload data from an external system to a marketing application, for example, assumptions include:

Quality: Is the data adequate quality? What are the metrics?data ingestion

Format: Can the inlet pipe handle all different data formats?

Reliability: Is the data distribution reliable?

Access: How will the pipeline access data source? How much internal IT work will you need?data ingestion

Updates: How often is source data updated?

Additionally, pipeline management will require significant time and resources if manual monitoring and process management are involved. Human intervention in this process greatly increases the risk of error and, ultimately, the failure of data failure.

And as always, data management and security are the main concerns. This is especially important when determining how to disclose data to users. When building an pipeline, organizations should consider:

Whether the data will be disclosed internally and externallydata ingestion

Who will have access to the data and what kind of access they will havedata ingestion

Whether the data is sensitive and what level of security it requiresdata ingestion

What regulations apply to data and how to comply with it

By importing faster, automated, and secure data, businesses are increasingly turning to cloud-based solutions. With platforms designed to easily retrieve and upload data from multiple data sources in the cloud data center, companies avoid the costs, complexity and risks associated with import pipelines designed and used by internal IT teams.

Matillion provides cloud-based applications to help businesses quickly generate statistical and business data:

Matillion Data Loader Import Software helps companies continuously extract and upload data to their selected cloud data center

The Matillion ETL software is designed for companies that need strong data conversion skillsdata ingestion

Some of the challenges of data entry are:

Slightly:

In the past, with the release of Exit, Convert, and Uploading (ETL) tools, it was not difficult to compile content or physically map to load, extract, and clean data. In any case, the data has been able to be very large, very diverse and complex, and the old methods of importing data are not fast enough to keep up with the breadth and volume of current data sources.data ingestion

Insecurity:

Security is always an issue when data transmission. Data is usually sorted at different stages during import, making it difficult to complete consistent guidelines throughout the process.data ingestion

Cost:

A few different components join to make data import more expensive. Infrastructure that is expected to help a variety of data sources and patented devices can be an exaggeration to stay relevant over a long period of time. Retaining professional staff to support the data entry pipeline is not fair.data ingestion

Complexity:

With the explosion of rich and innovative data sources such as sensors, smart meters, smartphones, and other related devices, organizations sometimes find it difficult to get value from that data.

Flexible and fast:

If you need to make big decisions, it is important to have the data available when you need it. In addition to downtime, a productive pipeline can add time stamps or clear your data during import. Using lambda architecture, you can import data in batches or in real time.

Protect:

Data transfer is always a security concern. EU-US Privacy Shield Framework, GDPR, HIPAA, and SOC 2 Type II support and compliance OAuth 2.0.

Cost effective:

Well-designed data entry should save your organization money by making the computer a part of time-consuming and expensive processes. Additionally, data entry may be less expensive if your organization does not pay a support framework.

Les Complex:

While you may have a wide range of sources with a variety of data schemes and types of data, a well-planned roundup of data entry should help eliminate the complexity of integrating these sources.

Improving the popularity of cloud-based storage systems has brought new processes for duplication of data analysis.data ingestion

To date, appropriate data entry models required an Extract, Transform and Load (ETL) process from which data is extracted from a source, which is controlled to fit local spatial features or business needs.data ingestion

When organizations used expensive internal analysis frameworks, it seemed prudent to do as much preparatory work as possible, including data entry and conversion, before storing data in a repository.data ingestion

In any case, today, cloud databases such as Microsoft Azure, Snowflake, Google Big Query, and Amazon Redshift can be cost-effective enough to save and calculate resources with delays measured in minutes or seconds.

A sound data system is ready for the future, compliant, efficient, flexible, responsive and starts with good input. Creating an Extract, Transform and Load (ETL) platform from scratch will require website controls, switch comprehension, formatting processes, SQL or NoSQL queries, API calls, writing web applications, and more.

No one needs to do that because DIY ETL removes developers from user-focused products and puts the flexibility, accessibility, and accuracy of the mathematical area at risk.data ingestion

6. DATA MAKING TOOLS

Apache Nifi

Elastic Logstash

Gobblin With LinkedIn

Apache Storm

Apache Flume

To complete the data import process, we must apply the correct principles and tools:

Network Bandwidth

Various systems and technologies

Reliable Network Support

Data Distribution

Select the Right Data Format

Maintain Scalability

Business decisionsdata ingestion

Delays

High Accuracy

Choosing the Right Business Input Tool

Now that you know the various types of data entry challenges, let’s learn the best tools you can use.

Astera Centerprise is a visual data management tool and integration tool to build bi-directional integration, sophisticated data mapping, and data verification functions to facilitate data entry. Provides a high, low latency pile management platform and close to real-time data feeds. It also allows faster data transfer to Salesforce, MS Dynamics, SAP, Power BI, and other viewing software with OLAP. In addition, by using its automated power-flow capabilities, users can streamline, synchronize, and run tasks with ease. Used by businesses and banks including finance giant Wells Fargo.data ingestion

Apache Kafka is a GUI-based data streaming platform, open source, error-tolerant, highly focused and provides high-quality messages across devices. It helps to connect multiple data sources in one or more locations in a structured format. It is already being used by tech giants like Netflix, Walmart, and others.data ingestion

Fluentd is another open source data entry platform that allows you to integrate data into a database. Allows data filtering functions such as filtering, merging, volume storage, data entry, and creation of two-dimensional JSON lists for multiple sources and destination. However, the drawback is that it does not allow automatic workflow, which makes the scope of the software limited to certain uses.

Wavefront is a paid platform for data management tasks such as importing, storing, visualizing, and converting. Uses data collection of data to analyze high-volume data. Wavefront can generate millions of data points per second. Provides features such as big data intelligence, business application integration, data quality, and core data management. The data entry feature is one of the many data management features offered by Wavefront.

Conclusion

In fact, data entry is important for smart data management and business data collection. It allows medium and large businesses to maintain an integrated database by importing data in real time and making informed decisions about importing temporary data.

Data and data integration sound like similar and most of the people confused in them…data ingestion

Data integration involves integrating data that resides in different sources and provides users with an integrated perspectiveThis process becomes important in a variety of contexts, including both commercial (such as when two similar companies need to compile their database) and scientific (integrating research results from different bioinformatics repositories, for example) domains. Data consolidation comes with increasing frequency as volume (i.e., larger data) and the need to share existing data explode. It has become the subject of extensive theater work, and many open-ended issues have not been resolved. Data integration promotes interaction between internal and external users. Consolidated data should be accepted into the various data system and converted into a compact data store that provides synchronized data across the entire client file network. The most common use of data aggregation is in data mining where data is analyzed and extracted from existing websites that can be useful for Business information.

Now what is the difference between them….?

Data integration and data entry may sound similar, but there is one major difference. And it all comes down to the number of systems you work with.data ingestion

When you work with data integration from multiple systems, data integration. But when you get your data from X to Y, it is data import.data ingestion

Of course, we only investigate what you need to know here.

So, let’s take a closer look at these two processes and how businesses behave.data ingestion

Data integration vs data integrationdata ingestion

Our Senior Product Manager, Pavel Svec, reviewed descriptions from Google search and provided his details.

To make life easier, we will deal with one term at a time

‘Data integration involves integrating data that resides in different sources and giving users its unified perspective.’ – [Wikipedia]

Data aggregation is often more complex than importing data, and it involves data integration. You usually do not end up having two different data sets pushed into the target, but instead one data set is added to multiple sources. These can be applications, APIs or files.

Also, the main difference here is that integration involves combining multiple sources together.

Internet definition of data entry (that is absolutely wrong)

‘Data entry is the process of collecting raw data from various websites or files and integrated into a data pool in the data processing area, e.g., Hadoop data pool’ – ScienceDirect

Unfortunately, this definition of Google is not as accurate as the original.

First, the references to the definition of ‘integration’, i.e. (as we have already explained) a different process. But more than that, the meaning is also very clear. You can collect data from any system, not just websites or files.

‘Data entry is the process of collecting raw data and uploading it to a targeted data repository, eg.gHadoop data lake.’

That being said, it is important to note that the target does not have to be a pool. It can be anything. For example, it could be an e-commerce program like Shopify. In essence, data entry involves taking data from a source, resizing it to the target and ensuring that the source and target can ‘speak’ to each other, and then upload it to the target. Assessing how businesses behave in these processes

Now let’s break down how organizations generally deal with data integration and data entry respectively.

As data integration is complex, many businesses use advanced programming languages, such as Python, PHP, and Perl as a starting point.

These languages are excellent as they have libraries and website links that make them easy to work with.

Businesses may also choose to embed cloud SDKs (software development kits) in their integration processes. These kits work easily next to programming languages and cloud services, such as AWS S3 or Azure file storage.

However, while many businesses deserve data integration, eventually cracks started to emerge.

Usually, this is the result of lost or expired documents. For example, Person A formed a merger years ago and left the company without relinquishing the information. This lack of document gap and skills will ultimately create risks and result in improper data collection.

Many data import processes start with Excel spreadsheets or Google Spreadsheets.

When these spreadsheets are manually made they are too big to handle, however, businesses sometimes turn to bulk uploads. For example, using something that allows you to place a file somewhere, where the text can be downloaded and uploaded to a website.

This works well until the website becomes very large. When that happens, businesses often change the database. But the process of transporting bulk upload documents is difficult, to say the least. Usually, at this point, they can look for an ETL or ELT solution instead.

When is the default time?

As we have seen in both cases, there is a time when problems are increasingly difficult to deal with. Many businesses will find themselves extinguishing the fire too much.

But when exactly is the time to embrace automation? Before we answer that, here’s how we define the default in CloverDX. For many audiences, automation is actually augmented manual process. But, for us:

💡 Automation is a completely independent process, which can work without the intervention of users at all.

Many organizations use the ‘fourth rule’ of automation. Simply put, the law states that if you need to do something four or more times, you have to do it automatically.

By automatically repeating processes, you can save valuable time. This could be the weeks, months, or years you can spend focusing on the highest jobs.

For the tools you can use to combine or absorb, you may use:

Organized web interface. These are very easy to prepare and accurate to use. Once you have paid for the tool or register, you can use it immediately.

Visual web designer. These are a little more complex, but usually based on parts. In fact, you just put these pre-arranged components together to help you make a change.

IDE (Integrated Development Area). Usually, you put these tools in place. Like visual web designers, they rely on a partial-based approach. But they do offer the most advanced editing tools that will require the most skilled users to work.

Apps. You can also use the editing libraries to process and edit your data flow (and you can apply this to IDEs). However, these have a few visual aids and features for drag and drop. So, again, this solution is best suited for technical staff.

Any of these options work, but your choices will depend on your skills and your unique business needs.

Although data entry and integration may have one major difference, these two processes can produce many different challenges.

These challenges become apparent as your project integrates or imports.

If you rely on manual processes in both, you run the risk of falling into human error, losing documents, and wasting resources. Therefore, we recommend that you use automation wherever you can.

There are more details on data entry, data integration, and how to deal with each in the full video: Data Entry Compared to Data Combination: What Is the Difference?

Data entry is increasingly necessary for business enterprises. In a world of extra data, tools that successfully integrate and process that data are more than just assets – it is a strategic advantage.

The important thing is that you need to get your data entry tools right if you want to succeed in the next decade.

However, as the demand for data entry has increased, so has the number of data entry tools increasing in the market. Finding the right solution can be a challenge. We are here to help you solve the problem and find the tools that make the most sense for you.

1. StarQuest Data Replicator

Granted, we have a little bias. But we believe that our data entry software is the best in the market for a variety of business data import needs.

StarQuest Data Replicator (SQDR) resides between source and location, which allows real-time duplication, unmistakable DMBS vendor pairing, and compliance with the NoSQL website using an intuitive GUI. It can exploit Apache Kafka, and supports application conditions on new DBMS platforms such as Salesforce and Snowflake.

Notably, SQDR has near-zero footprint on production servers. It is also designed to automatically restore duplication following communication loss, without processes being compromised (something to struggle with other solutions).data ingestion

SQDR is also supported by our industry-leading support team, which customers call “the best vendor support I have ever had.”data ingestion

Lastly, SQDR generally allows cost savings of up to 90% compared to market competitors. This is due to our basic pricing model and our customized use designed to meet your needs (not to sell you services you do not need).data ingestion

To learn more about how SQDR can meet your organization’s data entry requirements, contact us to schedule a free demo.data ingestion

Important Note: SQDR is a robust and cost-effective solution for data entry and duplication in almost any application mode.data ingestion

2. IBM InfoSphere Data Replication

No one has ever been fired, say, by hiring IBM; company always, at least, is a safe choice. As you might expect from one of the world’s leading computer companies, their data replication product – InfoSphere – is a solid way, especially for IBM’s Db2 data.data ingestion

Here is how IBM defines a platform:

“IBM InfoSphere Data Replication provides log-based transformation data capture operations to support large data integration and integration, asset storage and scale analysis systems.”data ingestion

It is built for continuous availability and works for a variety of data pairs, but is best suited for IBM’s Db2. Additionally, although it is a solid business solution, if you want to save costs, you may want to look elsewhere – it does, surprisingly, carry a business-level price tag.data ingestion

Lastly, it is worth noting that choosing IBM InfoSphere Data Replication may result in the vendor locking on the IBM platform, which may not be required depending on your application type. The solution was made by IBM, after all, so it is not agnostic for the seller.

Important Note: IBM InfoSpere Data Replication is an IBM’s Db2 business-level solution that carries a business-level price tag.data ingestion

3. Oracle GoldenGate

Oracle is another Fortune 500 company best known for providing reliable computer solutions. Their solution in the data entry space is Oracle GoldenGate. Here is how the company describes the solution:

“Oracle GoldenGate is a complete software package for real-time data integration and duplication in a variety of IT environments. “data ingestion

As an IBM solution, GoldenGate’s biggest advantage is its strong integration with its DBMS affiliate product, namely, in this case, the Oracle Database. Having said that, it is designed for a variety of data transfers. Like IBM, Oracle is a good solution to consider whether cost effectiveness is not a priority and if the seller locks are not a concern.data ingestiondata ingestion

Important Note: Oracle’s GoldenGate works well on the Oracle Database but holds a business-level price tag and may result in merchant locks.data ingestiondata ingestion

4. Microsoft SQL Server

Microsoft is the ultimate source of what we consider to be “three major” solutions for data copying. The company’s SQL Server, although sometimes installed as a “legacy” solution by emerging marketers, is still one of the most widely used and frequently used storage locations in the world.

Microsoft does not have a transparent duplication of data, but SQL Server has built-in capabilities to be used for situations that involve duplication. As Microsoft explains, “SQL Server provides a powerful and flexible data synchronization system throughout your business.”

5. HVR software

HVR Software is a new solution. Initially, the company focused on importing and duplicating Oracle’s database, but the solution has grown to include all the standard forums (you can view them on their homepage). Here’s how they describe their skills on their website:data ingestion

“[It ‘] is all you need to duplicate and compile high-volume data, whether you need to move your data between storage, cloud, or cloud computing, into a data pool or data repository.”data ingestion

If you are considering investing in a simple data import option that is better suited to the Oracle Database, HVR Software might make your list.data ingestion

Key point: HVR is best for Oracle Database and cloud environments, but may not have strong performance in some solutions.data ingestion

6. Qlik (formerly Attunity)

Qlik is another company that, although not widely known as IBM or Oracle, has improved word recognition through its data management offerings. The company purchased Attunity Replicate a few years ago, and now offers that solution under the brand name Qlik Replicate.

Here is how they describe its power:

“Qlik Replicate empowers organizations to accelerate data duplication, import and distribution of a wide variety of databases, data warehouses, and large data centers.”data ingestion

Product is one of the various tools (including Qlik Sense, Qlik View, Qlik Compose, and more) offered by the company. It may work well, but like the three major ones mentioned above, its inclusion as part of a comprehensive data management package could lead to vendor locking.data ingestion

Important Note: Qlik Replicate may be worth considering if you are willing to invest in Qlik’s data management system. If you want data import as one component, it may not be the most desirable solution.data ingestiondata ingestion

Ready to Get Started with Importing Data?data ingestion

There are many data entry tools on the market; hopefully, the above information will help you determine which options are most likely to meet your business needs. If you want a solid solution for importing data into an affordable price, let’s talk.data ingestion

At StarQuest, we specialize in data entry. As mentioned above, our powerful SQDR software can be used for duplication and import from a wide range of data sources.data ingestion

If you are looking for a data transfer, data storage, app development, processing, disaster recovery, or other use – we can help.

Contact us to discuss your data entry requirements. We can set up a free trial of our software using the DBMS of your choice, and help you take the first step toward a solution that will benefit your business.data

Data entry is one of the first steps in the data management process. With the right data entry tools, companies can quickly collect, import, process, and store data from a variety of data sources.

Another way to enter data may be to manually enter the code in the data pipe, assuming you are able to write the code and are familiar with the required languages. This gives you a lot of control, but if you do not know the answer to the above “what if” questions, you could spend a lot of time working on and re-processing your code.data ingestion

Each organization has a different data entry framework, depending on its purpose. Data entry has three modes, which include bulk, real time, and live streaming. Let’s learn about each one in more detail. In the processing of bulk data, data is imported in data data ingestiondata ingestiondata ingestion