How Much Data Is Required for Machine Learning?

Machine Learning

Machine Learning. In case you ask any records scientist how tons statistics is needed for device studying, you’ll most possibly get either “It depends” or “The greater, the better.” And the thing is, both solutions are correct.

It definitely relies upon at the form of venture you’re operating on, and it’s usually a superb concept to have as many applicable and dependable examples inside the datasets as you could get to receive accurate outcomes. however the query stays: how a good deal is enough? And if there isn’t enough information, how are you going to cope with its lack?

The enjoy with various initiatives that worried artificial intelligence (AI) and system gaining knowledge of (ML), allowed us at Postindustria to come up with the most optimal ways to method the records quantity trouble. this is what we’ll talk approximately inside the study below.

Factors that have an effect on the dimensions of datasets you want each ML challenge has a fixed of unique elements that affects the dimensions of the AI schooling records sets required for successful modeling. right here are the maximum vital of them.

The complexity of a version genuinely placed, it’s the number of parameters that the set of rules have to examine. The more functions, length, and variability of the expected output it ought to recollect, the greater records you need to enter.

For instance, you want to educate the model to expect housing expenses. you are given a table in which every row is a residence, and columns are the vicinity, the community, the range of bedrooms, flooring, lavatories, and so on., and the charge. In this situation, you train the version to are expecting expenses based totally at the change of variables within the columns. And to find out how every extra enter feature affects the input, you’ll want more records examples.

The complexity of the gaining knowledge of set of rules greater complex algorithms always require a bigger amount of records. in case your task desires widespread ML algorithms that use established getting to know, a smaller amount of facts might be enough. Even in case you feed the set of rules with extra information than it’s sufficient, the consequences gained’t enhance notably.

The situation is one-of-a-kind when it comes to deep mastering algorithms. unlike traditional machine getting to know, deep getting to know doesn’t require feature engineering (i.e., building enter values for the model to healthy into) and continues to be able to research the representation from uncooked statistics. They paintings without a predefined shape and parent out all of the parameters themselves. In this example, you’ll need more data this is relevant for the algorithm-generated classes.

Labeling desires relying on how many labels the algorithms need to are expecting, you may want various amounts of input facts. as an example, in case you want to type out the photographs of cats from the photographs of the puppies, the set of rules desires to learn a few representations internally, and to do so, it converts enter information into these representations. however if it’s simply locating photos of squares and triangles, the representations that the set of rules has to learn are less difficult, so the amount of information it’ll require is much smaller.

Acceptable mistakes margin

The kind of venture you’re operating on is any other aspect that influences the quantity of information you want given that different projects have unique degrees of tolerance for errors. as an instance, if your assignment is to expect the climate, the algorithm prediction can be inaccurate with the aid of some 10 or 20%. but while the algorithm have to inform whether the affected person has most cancers or no longer, the diploma of error may cost the affected person existence. so you need extra records to get greater correct results.

Enter Range

In some instances, algorithms ought to learn to function in unpredictable situations. for example, while you broaden a web virtual assistant, you clearly need it to apprehend what a vacationer of a enterprise’s website asks. however human beings don’t typically write perfectly accurate sentences with fashionable requests. they will ask lots of various questions, use unique styles, make grammar mistakes, and so forth. The greater out of control the environment is, the greater records you want to your ML venture.

Based at the elements above, you can outline the dimensions of facts units you need to reap appropriate set of rules performance and reliable consequences. Now let’s dive deeper and discover a solution to our foremost query: how much records is required for machine studying?

What is the most efficient length of AI schooling records units?

While making plans an ML venture, many fear that they don’t have a variety of data, and the consequences received’t be as dependable as they may be. however only some virtually recognize how a great deal statistics is “too little,” “an excessive amount of,” or “sufficient.”

How Much Should You Invest in AI?

The most not unusual manner to outline whether a data set is enough is to use a ten times rule. This rule manner that the quantity of enter statistics (i.e., the wide variety of examples) ought to be ten times extra than the wide variety of ranges of freedom a version has. generally, stages of freedom suggest parameters to your facts set.

So, as an example, if your set of rules distinguishes photos of cats from photos of puppies based on 1,000 parameters, you need 10,000 pix to educate the version.

Despite the fact that the ten instances rule in system gaining knowledge of is pretty popular, it may simplest paintings for small models. larger fashions do now not observe this rule, as the wide variety of amassed examples doesn’t always reflect the actual quantity of training information. In our case, we’ll need to matter no longer only the wide variety of rows but the wide variety of columns, too. The proper method could be to multiply the quantity of pix by the scale of each photograph by using the variety of shade channels.

You can use it for tough estimation to get the mission off the floor. however to parent out how a lot statistics is needed to teach a specific model inside your precise task, you need to discover a technical associate with applicable information and visit them.

On top of that, you continually must understand that the AI models don’t take a look at the records however instead the relationships and patterns at the back of the facts. So it’s not best quantity with the intention to influence the outcomes, but additionally nice.

But what can you do if the datasets are scarce?

There are some strategies to cope with this difficulty.

The way to cope with the dearth of information loss of facts makes it not possible to establish the members of the family among the input and output records, hence causing what’s referred to as “‘underfitting”. if you lack input statistics, you may either create artificial information units, increase the prevailing ones, or observe the know-how and records generated earlier to a comparable trouble. permit’s evaluation every case in more element below.

Statistics augmentation

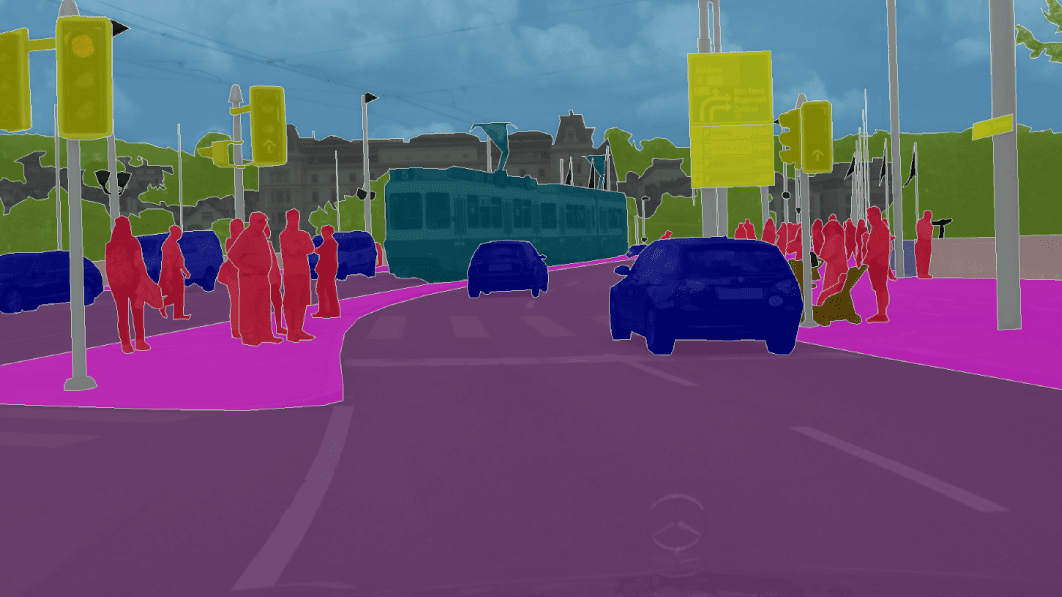

Statistics augmentation is a technique of expanding an input dataset by way of barely changing the prevailing (unique) examples. It’s extensively used for photograph segmentation and classification. common photograph alteration strategies include cropping, rotation, zooming, flipping, and color adjustments.

How a great deal facts is needed for machine getting to know?

In fashionable, records augmentation helps in solving the problem of confined statistics via scaling the available datasets. besides photo class, it may be utilized in a number of different instances. for example, here’s how data augmentation works in natural language processing (NLP):

Back translation: translating the text from the unique language into a target one after which from goal one back to authentic

clean facts augmentation (EDA): changing synonyms, random insertion, random swap, random deletion, shuffle sentence orders to receive new samples and exclude the duplicates

Contextualized word embeddings: training the set of rules to use the word in distinct contexts (e.g., while you need to understand whether or not the ‘mouse’ approach an animal or a device)

Records augmentation adds extra flexible information to the fashions, facilitates solve magnificence imbalance troubles, and increases generalization capability. but, if the original dataset is biased, so might be the augmented statistics.

Artificial facts era artificial records generation in machine learning is sometimes considered a type of facts augmentation, but these principles are specific. for the duration of augmentation, we exchange the traits of facts (i.e., blur or crop the photograph so we can have 3 pics in place of one), while synthetic technology means growing new statistics with alike but no longer comparable homes (i.e., developing new snap shots of cats primarily based on the previous pictures of cats).

At some stage in synthetic statistics technology, you could label the information proper away and then generate it from the supply, predicting exactly the records you’ll acquire, which is useful when no longer plenty statistics is to be had. but, even as running with the actual statistics sets, you need to first acquire the facts after which label every example. This synthetic information technology approach is extensively applied whilst developing AI-based healthcare and fintech solutions due to the fact actual-life statistics in those industries is difficulty to strict privacy laws.

At Postindustria, we also apply a artificial records approach in ML. Our latest digital earrings attempt-on is a top example of it. To develop a hand-tracking version that could paintings for numerous hand sizes, we’d need to get a sample of 50,000-one hundred,000 palms. since it might be unrealistic to get and label such some of actual pics, we created them synthetically by drawing the photographs of various hands in various positions in a unique visualization program. This gave us the necessary datasets for schooling the set of rules to tune the hand and make the hoop healthy the width of the finger.

Whilst synthetic facts may be a brilliant solution for many tasks, it has its flaws.

Synthetic facts vs real statistics trouble one of the problems with synthetic statistics is that it is able to lead to results which have little application in solving real-lifestyles troubles while actual-existence variables are stepping in.

for instance, if you broaden a virtual make-up try-on the use of the pictures of people with one skin color after which generate greater artificial facts based on the existing samples, then the app wouldn’t work nicely on other pores and skin colorings. The result? The clients gained’t be happy with the function, so the app will cut the number of capability consumers in preference to developing it.

Another issue of getting predominantly synthetic information deals with producing biased consequences. the bias can be inherited from the authentic sample or whilst different factors are disregarded. as an example, if we take ten human beings with a certain health situation and create extra records based on the ones cases to are expecting how many people can expand the identical condition out of one,000, the generated information could be biased because the original sample is biased by means of the selection of number (ten).

Transfer getting to know switch learning is any other method of solving the trouble of restrained information. This method is based on applying the information gained whilst operating on one undertaking to a new comparable venture. The idea of transfer gaining knowledge of is that you teach a neural community on a selected statistics set and then use the decrease ‘frozen’ layers as feature extractors.

Then, pinnacle layers are used teach different, extra particular statistics units. As an instance, the version changed into skilled to recognize pics of wild animals (e.g., lions, giraffes, bears, elephants, tigers). next, it can extract capabilities from the further snap shots to do extra speicifc evaluation and recognize animal species (i.e., can be used to differentiate the photos of lions and tigers).

The transfer gaining knowledge of method speeds up the training degree since it permits you to apply the backbone community output as functions in in addition tiers. however it could be used only while the duties are similar; otherwise, this approach can affect the effectiveness of the version.

Significance of excellent information in healthcare initiatives the availability of massive facts is one of the biggest drivers of ML advances, such as in healthcare. The ability it brings to the area is evidenced by way of some high-profile offers that closed during the last decade. In 2015, IBM bought a enterprise called Merge, which specialized in clinical imaging software program for $1bn, acquiring massive quantities of medical imaging statistics for IBM.

In 2018, a pharmaceutical large Roche received a big apple-primarily based organisation centered on oncology, referred to as Flatiron health, for $2bn, to fuel statistics-pushed personalised cancer care.

However, the provision of statistics itself is regularly not enough to effectively educate an ML version for a medtech answer. The nice of information is of extreme importance in healthcare tasks. Heterogeneous records types is a mission to research on this subject. facts from laboratory tests, clinical photos, essential symptoms, genomics all are available in distinct codecs, making it hard to install ML algorithms to all of the statistics at once.

Any other issue is extensive-spread accessibility of scientific datasets. MIT, as an example, that’s taken into consideration to be one of the pioneers within the field, claims to have the handiest notably sized database of important care fitness records this is publicly on hand.

Its MIMIC database stores and analyzes health records from over 40,000 critical care patients. The data encompass demographics, laboratory checks, essential signs accumulated by affected person-worn monitors (blood strain, oxygen saturation, coronary heart rate), medications, imaging data and notes written by using clinicians. every other stable dataset is Truven health Analytics database, which information from 230 million patients gathered over forty years based on coverage claims. but, it’s no longer publicly available.

Every other hassle is small numbers of information for a few diseases. identifying ailment subtypes with AI requires a enough quantity of records for each subtype to educate ML fashions. In some instances information are too scarce to educate an set of rules. In those instances, scientists try and broaden ML models that examine as a good deal as feasible from healthful affected person data. We should use care, however, to make sure we don’t bias algorithms closer to healthful patients.

Want statistics for an ML venture? we will get you protected!

The size of AI education records sets is vital for gadget studying initiatives. To define the most beneficial quantity of information you want, you need to recall a lot of factors, together with undertaking kind, set of rules and version complexity, errors margin, and enter variety. you may additionally apply a ten times rule, but it’s no longer continually reliable in relation to complex obligations.

If you finish that the available facts isn’t enough and it’s impossible or too costly to collect the required actual-world statistics, try and follow one of the scaling techniques. it can be information augmentation, synthetic information era, or transfer studying — depending to your mission wishes and budget.

Be ready for AI built for business.

Dataset in Machine Learning

Dataset in Machine Learning. 24x7offshoring provides AI skills built into our packages, empowering your trading company processes with AI. It really is as intuitive as it is flexible and powerful. even though 24x7offshoring ‘s unwavering commitment to accountability ensures thoughtfulness and compliance in every interaction.

24x7offshoring ‘s enterprise AI , tailored to your particular data landscape and the nuances of your industry, enables smarter choices and efficiencies at scale:

- Added AI in the context of your business procedures.

- AI trained on the industry’s broadest enterprise data sets.

- AI based on ethics and privacy of statistics. standards

The traditional definition of artificial intelligence is the technology and engineering required to make intelligent machines. System cognition is a subfield or branch of AI that involves complex algorithms including neural networks, choice bushes, and large language models (LLMs) with dependent, unstructured data to determine outcomes.

From these algorithms, classifications or predictions are made based entirely on certain input standards. Examples of system studies are recommendation engines, facial recognition frameworks, and standalone engines.

Product Benefits Whether you’re looking to improve your customer experience, improve productivity, optimize business systems, or accelerate innovation, Amazon Web Products (AWS) offers the most comprehensive set of artificial intelligence (AI) services. to meet your business needs.

AI services pre-trained and prepared to use pre-qualified models delivered with the help of AI offerings in their packages and workflows.

By constantly studying APIs because we use the same deep learning technology that powers Amazon.com and our machine learning services, you get the best accuracy by constantly learning APIs.

No ML experience desired

With AI offerings conveniently available, you can add AI capabilities to your business programs (no ML experience required) to address common business challenges.

A creation for systems learning. Data sets and resources.

Machine learning is one of the most up-to-date topics in technology. The concept has been around for decades, but the conversation is now heating up toward its use in everything from Internet searches and spam filters to search engines and autonomous vehicles. Device learning about schooling is a method by which device intelligence is trained with recording units.

To do this correctly, it is essential to have a large type of data sets at your disposal. Fortunately, there are many resources for datasets to learn about the system, including public databases and proprietary datasets.

What are machine insights into data sets?

Device learning data sets are essential for the device to know algorithms to compare from. A data set is an example of how the study of systems allows predictions to be made, with labels that constitute the result of a given prediction (achievement or failure). The best way to start gaining device knowledge is by using libraries like Scikit-analyze or Tensorflow, which help you accomplish maximum tasks without writing code.

There are three predominant types of device mastery strategies: supervised (learning from examples), unsupervised (learning through grouping), and gaining knowledge by reinforcement (rewards). Supervised mastering is the practice of teaching a computer a way to understand styles in statistics. Strategies using supervised domain algorithms consist of: random forest, nearest friends, large number susceptible regulation, ray tracing algorithm, and SVM ruleset.

Devices that derive knowledge from data sets come in many different forms and can be obtained from a variety of places. Textual logs, image statistics, and sensor statistics are the three most common types of system learning data sets. A data set is actually a set of information that can be used to make predictions about future activities or consequences based on historical records. Data sets are typically labeled before they can be used by device learning algorithms so that the rule set knows what final results to expect or classify as an anomaly.

For example, if you want to predict whether a customer might churn or not, you can label your data set as “churned” and “no longer churned” so that the system that learns the rule set can search further records. Machine learning datasets can be created from any data source, even if that information is unstructured. For example, you can take all the tweets that mention your company and use them as a device reading data set.

To learn more about machine learning and its origins, read our blog posted on the device learning list.

What are the data set styles?

- A device for acquiring knowledge about a data set divided into training, validation and testing data sets.

- A data set can be divided into three elements: training, validation and testing.

- A device for learning a data set is a set of facts that have been organized into training, validation, and take a look at units. Automated mastery commonly uses these data sets to teach algorithms how to recognize patterns in records.

- The schooling set is the facts that make it easy to train the set of rules about what to look for and a way to recognize it after seeing it in different sets of facts.

- A validation set is a group of recognized and accurate statistics against which the algorithm can be tested.

- The test set is the ultimate collection of unknown data from which performance can be measured and modified accordingly.

Why do you need data sets for your version of AI?

System learning data sets are essential for two reasons: they help you teach your device to learn about your models, and they provide a benchmark for measuring the accuracy of your models. Data sets are available in a variety of sizes and styles, so it is important to select one that is appropriate for the challenge at hand.

Machine mastering models are as simple as the information they are trained on. The more information you have, the higher your version will be. That’s why it’s crucial to have a large number of data sets processed while running AI initiatives, so you can train your version correctly and get top-notch results.

Use cases for the dataset system domain.

There are numerous unique types of devices for learning data sets. Some of the most common include textual content data, audio statistics, video statistics, and photo statistics. Each type of information has its own specific set of use cases.

Textual content statistics are a great option for programs that want to understand natural language. Examples include chatbots and sentiment assessment.

Audio data sets are used for a wide range of purposes, along with bioacoustics and sound modeling. They may also be useful in computer vision, speech popularity, or musical information retrieval.

Video data sets are used to create advanced digital video production software, including motion tracking, facial recognition, and 3D rendering. They can also be created for the function of accumulating data in real time.

Photo datasets are used for a variety of different functions, including photo compression and recognition, speech synthesis, natural language processing, and more.

What makes a great data set?

A good machine for learning a data set has a few key characteristics: it is large enough to be representative, highly satisfying, and relevant to the task at hand.

Features of a Great Device Mastering a Data Set Features of a Good Data Set for a Device Gaining knowledge about quantity is important because you need enough statistics to teach your rule set correctly. Satisfactory is essential to avoid problems of bias and blind spots in statistics.

If you don’t have enough information, you risk overfitting your version; that is, educating it so well with the available data that it performs poorly when applied to new examples. In such cases, it is always a good idea to consult a statistical scientist. Relevance and insurance are key factors that should not be forgotten when accumulating statistics. Use real facts if possible to avoid problems with bias and blind spots in statistics.

In summary: a great systems management data set contains variables and capabilities that can be accurately based, has minimal noise (no irrelevant information), is scalable to a large number of data points, and is easy to work with.

Where can I get machine learning data sets?

Regarding statistics, there are many different assets that you can use on your device to gain insights into the data set. The most common sources of statistics are net and AI-generated data. However, other sources include data sets from public and private groups or individual groups that collect and share information online.

An important factor to keep in mind is that the format of the data will affect the clarity or difficulty of applying the stated data. Unique file formats can be used to collect statistics, but not all formats are suitable for the machine to obtain data about the models. For example, text documents are easy to read but do not contain information about the variables being collected.

On the other hand, csv (comma separated values) documents have both the text and numeric records in a single region, making them convenient for device control models.

It’s also critical to ensure that your data set’s formatting remains consistent as people replace it manually using exceptional people. This prevents discrepancies from occurring when using a data set that has been updated over the years. For your version of machine learning to be accurate, you need constant input records.

Top 20 Free Machine Awareness Dataset Resources, Top 20 Free ML Datasets, Top 20 Loose ML Datasets Related to Machine Awareness, Logs are Key . Without information, there can be no models of models or acquired knowledge. Fortunately, there are many resources from which you can obtain free data sets for the system to learn about.

The more records you have while training, the better, although statistics alone are not enough. It is equally important to ensure that data sets are mission-relevant, available, and top-notch. To start, you need to make sure that your data sets are not inflated. You’ll probably need to spend some time cleaning up the information if it has too many rows or columns for what you want to accomplish for the task.

To avoid the hassle of sifting through all the options, we’ve compiled a list of the top 20 free data sets for your device to learn about.

The 24x7offshoring platform’s datasets are equipped for use with many popular device learning frameworks. The data sets are properly organized and updated regularly, making them a valuable resource for anyone looking for interesting data.

If you are looking for data sets to train your models, then there is no better place than 24x7offshoring . With more than 1 TB of data available and continually updated with the help of a committed network that contributes new code or input files that also help form the platform, it will be difficult for you to no longer find what you need correctly. here!

UCI Device acquiring knowledge of the Repository

The UCI Machine Domain Repository is a dataset source that incorporates a selection of popular datasets in the device learning community. The data sets produced by this project are of excellent quality and can be used for numerous tasks. The consumer-contributed nature means that not all data sets are 100% clean, but most have been carefully selected to meet specific desires without any major issues.

If you are looking for large data drives that are ready for use with 24x7offshoring offerings , look no further than the 24x7offshoring public data set repository . The data sets here are organized around specific use cases and come preloaded with tools that pair with the 24x7offshoring platform .

Google Dataset Search

Google Dataset Search is a very new tool that makes it easy to locate datasets regardless of their source. Data sets are indexed primarily based on metadata dissemination, making it easy to find what you’re looking for. While the choice is not as strong as some of the other options on this list, it is evolving every day.

ecu open data portal

The ECU Union Open Information Portal is a one-stop shop for all your statistical needs. It provides datasets published by many unique institutions within Europe and in 36 different countries. With an easy-to-use interface that allows you to search for specific categories, this website has everything any researcher could want to discover while searching for public domain records.

Finance and economics data sets

The currency zone has embraced open-fingered device learning, and it’s no wonder why. Compared to other industries where data can be harder to find, finance and economics offer a trove of statistics that is ideal for AI models that want to predict future outcomes based on past performance results.

Data sets of this kind allow you to predict things like inventory costs, monetary indicators, and exchange prices.

24x7offshoring provides access to financial, monetary and opportunity data sets. Statistics are available in unique formats:

● time series (date/time stamp) and

● tables: numeric/care types including strings for people who need them

The global bank, the sector’s financial institution, is a useful resource for anyone who wants to get an idea of world events, and this statistics bank has everything from population demographics to key indicators that may be relevant in development charts. It is open without registration so you can access it comfortably.

Open data from international financial institutions is the appropriate source for large-scale assessments. The information it contains includes population demographics, macroeconomic statistics, and key signs of improvement that will help you understand how the world’s countries are faring on various fronts.

Photographic Datasets/Computer Vision Datasets

A photograph is worth 1000 words, and this is especially relevant in the topic of computer vision. With the rise in reputation of self-driving cars, facial recognition software is increasingly used for protection purposes. The clinical imaging industry also relies on databases containing images and movies to effectively diagnose patient situations.

Free photo log unit image datasets can be used for facial popularity

The 24x7offshoring dataset contains hundreds of thousands of color photographs that are ideal for educating photo classification models. While this dataset is most often used for educational research, it could also be used to teach machine learning models for commercial purposes.

Natural Language Processing Datasets

The current state of the art in device understanding has been applied to a wide variety of fields including voice and speech reputation, language translation, and text analysis. Data sets for natural language processing are typically large in size and require a lot of computing power to teach machine learning models.

It is important to remember before purchasing a data set when it comes to system learning, statistics is key. The more statistics you have, the better your models will perform. but not all information is equal. Before purchasing a data set for your system learning project, there are several things to remember:

Guidelines before purchasing a data set

Plan your mission carefully before purchasing a data set because of the reality: not all data sets are created equal. Some data sets are designed for research purposes, while others are intended for program manufacturing. Make sure the data set you purchase fits your wishes.

Type and friendliness of statistics: not all data is of the same type either. Make sure the data set contains information so that one can be applicable to your company.

Relevance to your business: Data sets can be extraordinarily massive and complicated, so make sure the records are relevant to your specific mission. If you’re working on a facial popularity system, for example, don’t buy a photo dataset that’s best made up of cars and animals.

In terms of device learning, the phrase “one size does not fit all” is especially relevant. That’s why we offer custom-designed data sets that can fit the unique desires of your business enterprise.

High-quality data sets for system learning by acquiring device knowledge

Data sets for system learning and synthetic intelligence are crucial to generating effects. To achieve this, you need access to large quantities of discs that meet all the requirements of your particular mastering goal. This is often one of the most difficult tasks when running a machine learning project.

At 24x7offshoring , we understand the importance of data and have collected a massive international crowd of 4.5 million 24x7offshoring to help you prepare your data sets. We offer a wide variety of data sets in special formats, including text, photos, and movies. Best of all, you can get a quote for your custom data set mastering system by clicking the link below.

There are links to discover more about machine learning datasets, plus information about our team of specialists who can help you get started quickly and easily.

Quick Tips for Your Device When Studying the Company

1. Make sure all information is labeled effectively. This consists of the input and output variables in your version.

2. Avoid using non-representative samples while educating your models.

3. Use a selection of data sets if you want to teach your models efficiently.

4. Select data sets that may be applicable to your problem domain.

5. Statistics preprocessing: so that it is prepared for modeling purposes.

6. Be careful when deciding on system study algorithms; Not all algorithms are suitable for all types of data sets. Knowledge of the

final system becomes increasingly vital in our society.

It’s not just for big men, though: all businesses can benefit from device learning. To get started, you want to find a good data set and database. Once you have them, your scientists and logging engineers can take their tasks to the next level. If you’re stuck at the data collection level, it may be worth reconsidering how you technically collect your statistics.

What is an on-device dataset and why is it critical to your AI model?

According to the Oxford Dictionary, a definition of a data set in the automatic domain is “a group of data that is managed as a single unit through a laptop computer.” Because of this, a data set includes a series of separate records, but can be used to teach the system the algorithm for finding predictable styles within the entire data set.

Data is a vital component of any AI model and basically the only reason for the rise in popularity of the machine domain that we are witnessing these days. Thanks to the provision of information, scalable ML algorithms became viable as real products that can generate revenue for a commercial company, rather than as a component of its primary processes.

Your business has always been completely data-driven. Factors consisting of what the customer sold, by-product recognition, and the seasonality of consumer drift have always been essential in business creation. However, with the advent of system knowledge, it is now essential to incorporate this data into data sets.

Sufficient volumes of records will allow you to analyze hidden trends and styles and make decisions based on the data set you have created. However, although it may seem simple enough, working with data is more complex. It requires adequate treatment of the information available, from the applications of the use of a data set to the training of the raw information so that it is clearly usable.

Splitting Your Information: Education, Testing, and Validation Data Sets In system learning , a data set is typically no longer used just for training functions. A single education set that has already been processed is usually divided into several styles of data sets in system learning, which is necessary to verify how well the model training was performed.

For this reason, a test data set is usually separated from the data. Next, a validation data set, while not strictly important, is very useful to avoid training your algorithm on the same type of data and making biased predictions.

Gather. The first thing you need to do while searching for a data set is to select the assets you will use for ML log collection. There are generally three types of sources you can choose from: freely available open source data sets, the Internet, and synthetic log factories. Each of these resources has its pros and cons and should be used in specific cases. We will talk about this step in more detail in the next segment of this article.

Preprocess. There is a principle in information technology that every trained expert adheres to. Start by answering this question: has the data set you are using been used before? If not, anticipate that this data set is faulty. If so, there is still a high probability that you will need to re-adapt the set to your specific needs. After we cover the sources, we’ll talk more about the functions that represent a suitable data set (you can click here to jump to that section now).

Annotate. Once you’ve made sure your information is clean and relevant, you may also want to make sure it’s understandable to a computer. Machines do not understand statistics in the same way as humans (they are not able to assign the same meaning to images or words as we do).

This step is where a variety of companies often decide to outsource the task to experienced statistical labeling offerings, when it is considered that hiring a trained annotation professional is generally not feasible. We have a great article on how to build an in-house labeling team versus outsourcing this task to help you understand which way is best for you.