What is Data Labeling ?

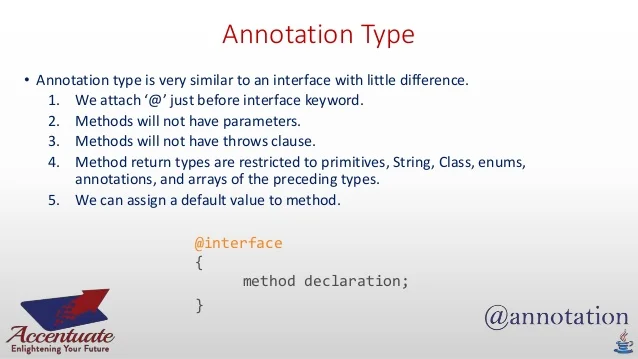

In machine learning, the data labeling process is used to identify raw data (images, text files, videos, etc.) and add one or more meaningful labels of data to provide context so that machine learning models can learn from it. For example, tags can indicate whether a photo contains a bird or a car, which words are pronounced in an audio recording, or whether an X image contains a tumor. data labelled data labeling data label jobs 24×7 offshoring Data annotation is required for a variety of use cases, including computer vision, natural language processing, and speech recognition.

How Data Labeling Works

Today, the most practical machine learning models utilize supervised learning, which applies algorithms to map an input to an output. For supervised learning to work, you need a set of labeled data from which the model can learn to make the right decisions.

The starting point for data labeling is usually to ask humans to make judgments about given unlabeled data. For example, an annotator might need to label all images in the dataset for which the answer to “does the photo contain a bird” is “yes”. Adding labels can be as coarse as a simple yes/no, or as fine-grained as identifying the pixels in an image associated with birds.

Machine learning models learn underlying patterns using human-supplied labels in a process called model training. The model trained in this way can be used to make predictions on new data.

In machine learning, a correctly labeled dataset that you use as an objective standard to train and evaluate a given model is often called a “ground truth”. The accuracy of the trained model will depend on the accuracy of the ground truth, so it is critical to dedicate some time and resources to ensure high accuracy data labeling.

What are the common types of data labeling ?

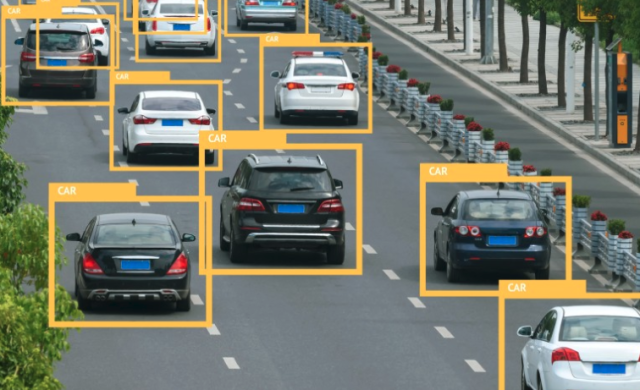

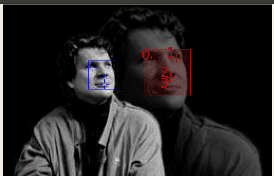

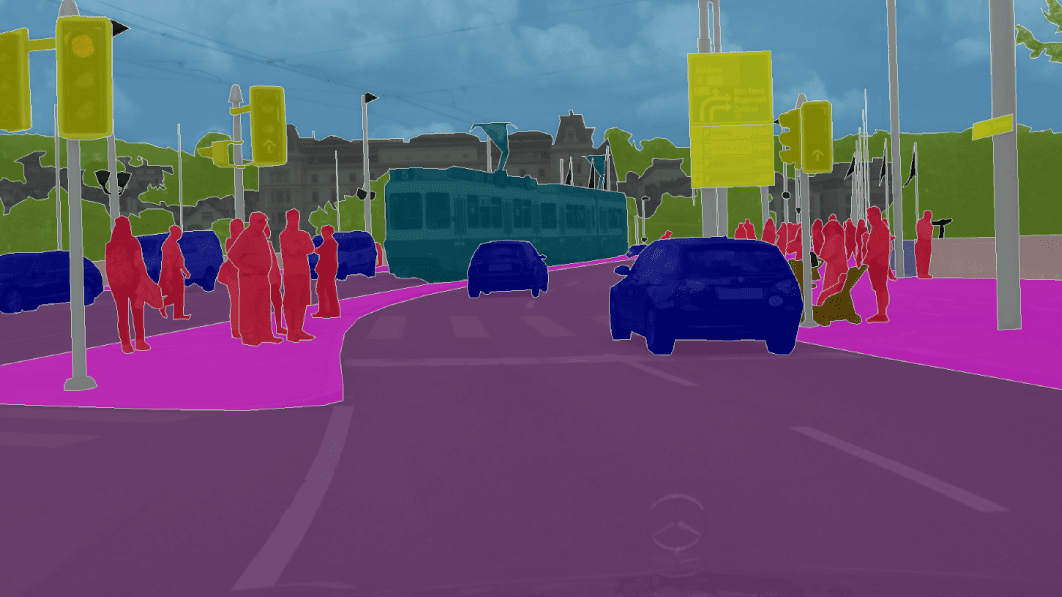

Computer Vision: When building a computer vision system, you first need to label images, pixels, or keypoints, or create boundaries (called bounding boxes) that completely surround a digital image to generate a training dataset. For example, you can categorize images by quality type (for example, product and lifestyle images) or content (what the image itself actually contains), and you can segment images at specified pixel levels.

You can then use this training data to build computer vision models that can be used to automatically classify images, detect the location of objects, identify key points in images, or segment images.

Natural language processing: Natural language processing requires you to first manually identify important parts of the text or annotate the text with specific labels to generate your training dataset. For example, you might want to determine the point of view or intent of a text advertisement, recognize parts of speech, classify proper nouns such as places and people’s names, and recognize text in images, PDFs, or other files.

You can do this by drawing bounding boxes around the text and manually transcribing those texts to the training dataset. Natural language processing models are used for sentiment analysis, entity name recognition, and optical character recognition.

Audio processing: Audio processing converts all types of sounds, such as speech, wildlife noises (barks, howls, or birdsong), and construction sounds (shattering glass, scans, or sirens) into a structured format for use in machine learning. Audio processing often requires that you first manually transcribe it into written text. You can then find deeper data about the audio by adding tags and categorizing the audio. This classified audio becomes your training dataset.

What are the best practices for data labeling?

There are many techniques that can be used to improve the efficiency and accuracy of data annotation. These include:

An intuitive and streamlined task interface helps minimize cognitive load and context switching for human annotators.

Annotator consensus helps to offset errors/biases of individual annotators. Annotator consensus involves sending each dataset object to multiple annotators, and then merging their responses (called “annotations”) into a single label.

Label audits are used to verify the accuracy of labels and update them as needed.

Active learning can use machine learning to identify the most useful data that humans should label, making data labeling more efficient.

How to complete data labeling efficiently?

Successful machine learning models are built on a large amount of high-quality training data. However, the process of creating the training data needed to build these models is often expensive, complex, and time-consuming. Most models created today require humans to manually annotate data so the model can learn how to make the right decisions. To address this challenge, machine learning models can be used to automatically label data to make labeling more efficient.

In this process, the machine learning model used to annotate the data is first trained on a subset of the raw data annotated by humans. If the labeling model thinks it has a high degree of confidence in its results based on what it has learned so far, it will automatically apply labels to the raw data. If the labeling model thinks its results have low confidence, it passes the data to a human labeler for labeling.

Then, the human-generated labels are fed back to the labeling model for it to learn from and improve its ability to automatically label the next set of raw data. Over time, the model can automatically label more and more data and greatly speed up the creation of training datasets.

What is data labeling?

In artificial intelligence, data labeling is the process of identifying raw data (pictures, text files, videos, and so on) and adding one or more significant as well as interesting labels to give context to make sure that a device learning model can pick up from it. As an example, labels might suggest whether a photo includes a bird or car, which words were said in an audio recording, or if an x-ray includes a tumor. Data identifying is required for a range of use situations including computer vision, natural language processing, and also speech acknowledgment.

Build Datasets with Amazon SageMaker Ground Truth (34:30).

Exactly how does data labeling job?

Today, the majority of useful artificial intelligence versions utilize monitored understanding, which applies a formula to map one input to one output. For managed finding out to work, you need an identified collection of data that the model can learn from to make correct choices. data identifying commonly starts by asking human beings to make judgments concerning a given piece of unlabeled data. For example, data labelers might be asked to label all the pictures in a dataset where “does the picture include a bird” holds true. The tagging can be as rough as an easy yes/no or as granular as determining the specific pixels in the photo related to the bird. The machine learning model makes use of human-provided labels to discover the underlying patterns in a procedure called “model training.” The outcome is a skilled model that can be utilized to make forecasts on brand-new data.

In machine learning, an effectively labeled dataset that you make use of as the objective criterion to train as well as analyze a provided model is usually called “ground fact.” The precision of your qualified design will depend upon the accuracy of your ground fact, so spending the time and also sources to make sure very precise data labeling is necessary.

What are some usual kinds of data labeling?

Computer system Vision.

Computer Vision: When developing a computer vision system, you first require to classify pictures, pixels, or key points, or produce a boundary that fully confines a digital image, called a bounding box, to create your training dataset. For instance, you can categorize images by high quality type (like item vs. way of living images) or content (what’s really in the photo itself), or you can segment a photo at the pixel degree. You can after that utilize this training data to develop a computer system vision design that can be utilized to immediately classify images, find the area of items, determine key points in a picture, or sector a picture.

Natural Language Processing.

Natural Language Processing: Natural language handling needs you to first manually identify vital areas of message or tag the text with details labels to produce your training dataset. For instance, you might want to recognize the view or intent of a message blurb, recognize parts of speech, identify proper nouns like places as well as people, and also recognize message in pictures, PDFs, or various other files. To do this, you can attract bounding boxes around message and after that manually record the text in your training dataset. Natural language processing versions are made use of for view analysis, entity name acknowledgment, and also optical character recognition.

Sound Processing: Audio processing transforms all kinds of noises such as speech, wildlife sounds (barks, whistles, or chirps), and also building audios (breaking glass, scans, or alarm systems) into an organized format so it can be utilized in artificial intelligence. Audio processing frequently requires you to first manually record it right into created text. From there, you can reveal deeper details regarding the audio by including tags and classifying the sound. This classified audio becomes your training dataset.

What are some best practices for data labeling?

There are numerous methods to boost the performance and also precision of data labeling. A few of these strategies include:.

User-friendly and structured job user interfaces to help decrease cognitive load as well as context switching for human labelers.

Labeler consensus to help combat the error/bias of private annotators. Labeler consensus entails sending out each dataset object to several annotators and afterwards settling their actions (called “comments”) into a single tag.

Tag bookkeeping to confirm the precision of tags and also upgrade them as necessary.

Energetic learning to make data labeling extra effective by utilizing maker learning to determine the most valuable data to be identified by human beings.

Getting Started with Amazon SageMaker Ground Truth (19:44).

How can data labeling be done successfully?

Successful device finding out versions are built on the shoulders of big volumes of top notch training data. Yet, the process to develop the training data required to construct these designs is commonly expensive, challenging, and time-consuming. Most of versions produced today call for a human to manually label data in a way that enables the model to discover just how to make right decisions. To conquer this challenge, labeling can be made much more efficient by utilizing a device finding out version to label data immediately.

In this process, a device discovering version for classifying data is first educated on a part of your raw data that has been identified by human beings. Where the labeling model has high confidence in its results based on what it has actually found out thus far, it will instantly use labels to the raw data. Where the labeling version has reduced self-confidence in its results, it will certainly pass the data to human beings to do the labeling. The human-generated labels are then given back to the labeling version for it to pick up from and also improve its ability to immediately identify the following set of raw data. Over time, the model can label an increasing number of data immediately and significantly speed up the production of training datasets.

Worldwide dialogues on expert system and also machine learning usually develop around two points– data and also algorithms. To remain on top of the dynamically uphill technology, you wish to understand both.

If we were to describe their correlation briefly, AI versions use algorithms to pick up from what is called training data and after that use that expertise to satisfy the model objectives.

For the objectives of this article, we will certainly concentrate on data only. Though raw data itself does not mean much to a supervised version, poorly identified data can trigger your design to decrease in flames.

guide to data labeling.

In this post, we’ll cover every little thing you need to find out about data identifying to make informed decisions for your company and ultimately create high-performance AI as well as machine learning designs:.

What is data labeling?

What is it made use of for?

Exactly how does data labeling work?

Common kinds of data labeling.

What are several of the best practices for data labeling?

What should I look for when picking an data labeling platform?

Secret takeaways.

What is data labeling?

data labeling is the job of identifying items in raw data, such as picture, video, text, or lidar, as well as identifying them with labels that help your maker finding out model make exact forecasts and estimations. Now, identifying objects in raw data seems all pleasant and easy in theory. In practice, it is extra about making use of the best note tools to outline objects of interest extremely very carefully, leaving as little room for mistake as possible. That for a dataset of thousands of products.

What is it made use of for?

Labeled datasets are specifically crucial to supervised knowing versions, where they help a design to truly refine as well as recognize the input data. As soon as the patterns in data are examined, the forecasts either match the purpose of your version or don’t. And also this is where you specify whether your model requires further adjusting as well as screening.

Data note, when fed right into the version as well as requested training, can help independent vehicles stop at pedestrian crossings, electronic assistants acknowledge voices, security cameras discover suspicious actions, therefore far more. If you wish to find out more regarding usage cases for labeling, have a look at our blog post on the real-life use instances of image comment.

Exactly how does data labeling job?

In the meantime, here’s a walkthrough of specific actions associated with the data labeling procedure:.

Data collection.

It all begins with getting the right amount as well as selection of data that are enough with your design needs. And also there are numerous ways you can go here:.

Manual data collection:.

A huge as well as varied amount of data guarantees more exact results compared to a small amount of data. One real-world instance is Tesla gathering big amounts of data from its lorry owners. Though utilizing a human resource for data setting up is not technically practical for all use situations.

As an example, if you’re establishing an NLP version and also require testimonials of numerous items from numerous channels/sources as data samples, it could take you days to locate and also access the details you require. In this case, it will make more feeling to use an internet scuffing tool, which can aid in automatically finding, gathering, as well as upgrading the data for you.

Open-source datasets:.

An alternative option is utilizing open-source datasets. The latter can allow you to carry out training and also data evaluation at scale. Availability as well as cost-effectiveness are among the two primary reasons specialists may choose open-source datasets. Besides, integrating an open-source dataset is a great method for smaller sized business to really profit from what is already in reserve for large-sized companies.

With this in mind, beware that with open-source, your data can be susceptible to susceptability: there’s the danger of the inaccurate use data or possible voids that will affect your model performance ultimately result. So, everything boils down to recognizing the worth open-source offers your model as well as determining tradeoffs to take on the prefabricated dataset.

Artificial data generation:.

Artificial data/datasets are both a true blessing and also a curse, as they can be regulated in simulated atmospheres by developers. And they are not as pricey as they might seem initially. The primary prices related to synthetic data are the initial simulation costs essentially. Artificial datasets are widespread throughout two broad classifications, computer vision and tabular data (e.q., health care and safety and security data). Autonomous driving business usually occur to be at the forefront of artificial data generation intake, as they pertain to deal with unnoticeable or occluded things regularly. Thus, the requirement for a faster method to recreate data featuring objects that real-life circumstance datasets miss.

data collection.

Various other advantages of using open-source datasets consist of limitless scalability and the whitewash for edge situations, where the hand-operated collection would threaten (provided the possibility of always producing more data vs. aggregating manually).

Data marking.

Once you have your raw (unlabeled) data up and also prepared, it’s time to offer your things a tag. data identifying contains human labelers determining aspects in unlabeled data using an data labeling platform. They can be asked to identify whether a photo includes a person or not or to track a round in a video clip. And also for all these jobs, completion result functions as a training dataset for your version.

Currently, at this moment, you’re most likely having concerns concerning your data security. As well as indeed, security is a major issue, specifically if you’re handling a delicate task. To resolve your inmost concerns about security, SuperAnnotate adheres to sector regulations.

superannotate adhere to market regulations.

Benefit: With SuperAnnotate, you’re keeping your data on-premise, which gives greater control and personal privacy, as no delicate details is shown third parties. You can connect our platform with any type of data source, permitting multiple people to team up and produce the most accurate notes in a snap. You can likewise whitelist IP addresses, adding additional security to your dataset. Discover how to set it up.

Quality assurance.

Your classified data must be interesting and exact to develop top-performing machine learning versions. So, having a quality assurance (QA) in place to inspect the accuracy of your classified data goes a long way. By enhancing the direction circulation for the QA, you can significantly boost the QA performance, removing any feasible ambiguity in the data labeling process.

Several of the things to keep in mind is that locations and also cultures issue when it involves regarding objects/text that is subject to note. So, if you have a remote international group of annotators, ensure they’ve undergone appropriate training to develop consistency in contextualizing as well as understanding task standards.

QA training can end up being a long-lasting investment and pay off in the future. Though training only could not ensure constant top quality in delivery for all usage instances. That’s where online QA steps to the fore, as it aids find and also stop potential errors right on the area and level up efficiency degrees for data identifying tasks.

Version training.

To train an ML model, you need to feed the machine finding out formula with identified data that contains the right response. With your freshly trained model, you can make accurate predictions on a new collection of data. However, there are a variety of concerns to ask yourself prior to as well as after training to give prediction/output precision:.

1) Do I have enough data?

2) Do I obtain the expected results?

3) How do I monitor and also examine the design’s performance?

4) What is the ground fact?

5) How do I understand if the version misses out on anything?

6) How do I locate these situations?

7) Should I utilize energetic discovering to discover better samples?

8) Which ones should I pick to classify once more?

9) How do I make a decision if the version achieves success ultimately?

Guideline: It’s inadequate to deploy your version in manufacturing. You additionally need to keep an eye on exactly how it’s executing. There’s a wonderful resource that we create to even more assist you on exactly how to build not just a training dataset however premium top quality SuperData for your AI. Make sure to check it out.

Common kinds of data labeling.

We suggest checking out data identifying via the lens of 2 major groups:.

Computer system vision.

By using high-quality training data (such as photo, video, lidar, as well as DICOM) and covering junctions of artificial intelligence as well as AI, computer vision models cover a large range of jobs. That includes object discovery, photo category, face recognition, aesthetic relationship discovery, circumstances as well as semantic division, as well as a lot more.

data identifying for computer vision.

Nonetheless, data labeling for computer system vision has its very own nuances when compared to that of NLP. The typical differences between data classifying for computer system vision vs. NLP mainly relate to the used comment strategies. In computer system vision applications, for example, you will certainly run into polygons, polylines, semantic and instance division, which are not common for NLP.

Natural language processing (NLP).

Now, NLP is where computational linguistics, artificial intelligence, and deep knowing fulfill to easily extract understandings from textual data. data labeling for NLP is a bit different in that here, you’re either including a tag to the file or making use of bounding boxes to outline the part of the text you intend to tag (you can commonly annotate files in pdf, txt, html layouts). There are various strategies to data identifying for NLP, commonly broken down right into syntactic and semantic teams. Much more on that in our article on natural language processing methods as well as utilize instances.

natural language processing.

What are some of the most effective practices for data labeling?

There’s no one-size-fits-all technique. From our experience, we suggest these attempted as well as evaluated data labeling techniques to run an effective job.

Collect diverse data.

You want your data to be as diverse as feasible to lessen dataset predisposition. Expect you want to educate a version for autonomous vehicles. If the training data was collected in a city, then the automobile will certainly have difficulty browsing in the hills. Or take one more situation; your version just won’t identify barriers at night if your training data was accumulated during the day. For this reason, see to it you obtain photos as well as videos from different angles as well as illumination conditions.

gather diverse data.

Relying on the characteristics of your data, you can avoid bias in different ways. So, if you’re collecting data for natural language processing, you may occur to be managing assessment as well as measurement, which in turn can present predisposition. As an example, you can not connect a higher possibility of outrageous criminal offense dedication to minority group representatives simply by taking the variety of arrest prices within their population. So, getting rid of prejudice from your gathered data right off is an essential pre-processing step that precedes data note.

Collect specific/representative data.

Feeding the model with the precise data it requires to run effectively is a game-changer. Your collected data has to be as details as you desire your forecast results to be. Currently, you might counter this whole section by questioning the context of what we call “specific data”. To clear things up, if you’re educating a design for a robot waiter, utilize data that was collected in dining establishments. Feeding the model with training data gathered in a shopping mall, airport, or medical facility will certainly cause unnecessary confusion.

Set up a note standard.

In today’s cut-throat AI and machine learning environment, making up interesting, clear, and also succinct annotation standards repays greater than you can perhaps expect. Comment instructions indeed help stay clear of prospective errors throughout data labeling prior to they influence the training data.

Reward tip: How to improve annotation guidelines better? Think about showing the tags with instances: visuals assist annotators, as well as QAs comprehend the comment needs much better than written descriptions. The standard ought to likewise consist of completion goal to reveal the labor force the bigger photo and also motivate them to strive for perfection.

Develop a QA procedure.

Integrate a QA method into your project pipeline to assess the high quality of the tags as well as ensure effective task results. There are a couple of methods you can do that:.

Audit jobs: Include “audit” jobs among regular jobs to check the human worker’s work quality. “Audit” tasks must not differ from various other work products to stay clear of predisposition.

Targeted QA: Prioritize work products that contain arguments between annotators for testimonial.

Random QA: Regularly inspect a random sample of work products for each annotator to test the top quality of their work.

Apply these techniques and also use the searchings for to boost your guidelines or train your annotators.

Discover one of the most appropriate comment pipeline.

Execute a note pipeline that fits your job needs to make the most of performance as well as lessen distribution time. For instance, you can set one of the most popular label on top of the listing to make sure that annotators do not lose time searching for it. You can also establish a note process at SuperAnnotate to specify the comment actions as well as automate the class and device choice procedure.

Keep communication open.

Keeping in touch with handled data classifying teams can be tough. Specifically if the group is remote, there is even more space for miscommunication or maintaining vital stakeholders out of the loophole. Performance as well as project efficiency will certainly feature developing a solid and also user friendly line of interaction with the workforce. Set up routine conferences and develop team networks to trade crucial insights in minutes.

keep communication open.

Provide regular comments.

Connect annotation errors in labeled data with your workforce for a much more streamlined QA process. Regular responses helps them obtain a better understanding of the standards and ultimately supply top quality data labeling. See to it your comments follows the supplied annotation guidelines. If you experience a mistake that was not made clear in the guideline, consider upgrading it as well as connecting the adjustment with the team.

Run a pilot project.

Constantly test the waters before jumping in. Put your workforce, annotation guidelines, as well as job processes to test by running a pilot job. This will assist you determine the conclusion time, review the performance of your labelers and also QAs, and enhance your guidelines as well as processes before beginning your project. Once your pilot is total, usage efficiency results to establish affordable targets for the labor force as your task advances.

Keep in mind: Task complexity is a significant indicator of whether you need to run a pilot job. Though often, intricate jobs benefit extra from a pilot job as you get to gauge the success of your project on a spending plan. Run a totally free pilot job with SuperAnnotate and also reach label data 10x faster.

What should I try to find when selecting an data labeling platform?

Premium data needs an expert data labeling team paired with durable tooling. You can either acquire the system, develop it yourself if you can not locate one that matches your use situation, or additionally utilize data labeling solutions. So, what should you try to find when selecting a platform for your data labeling project?

Inclusive tools.

Prior to looking for an data labeling system, think about the devices that fit your use instance. Maybe you need the polygon tool to tag automobiles or maybe a rotating bounding box to identify containers. Make certain the system you choose consists of the devices you require to produce the best labels.

Consider a couple of steps ahead and think about the labeling devices you could need in the future, as well. Why spend time and resources in a labeling system that you will not be able to use for future projects? Educating workers on a brand-new system costs money and time, so being a couple of actions ahead will certainly conserve you a headache.

Integrated administration system.

Reliable administration is the foundation of a successful data labeling task. Because of this, the selected data classifying platform ought to contain an incorporated monitoring system to manage tasks, datat, as well as customers. A robust data identifying platform needs to also allow project supervisors to track task development and also customer productivity, interact with annotators concerning mislabeled data, carry out an annotation workflow, evaluation and modify tags, as well as keep track of quality control.

Effective job management functions contribute to the distribution of just as powerful forecast outcomes. Several of the common attributes of successful job monitoring systems include progressed filtering system and real-time analytics that you ought to be mindful of when selecting a system.

Quality control process.

The precision of your data determines the top quality of your machine learning design. Ensure that the labeling platform you pick features a quality assurance procedure that allows the job manager regulate the high quality of the labeled data. Keep in mind that in addition to a strong quality control system, the data note solutions that you select need to be trained, vetted, and also skillfully took care of to aid you attain leading efficiency.

quality control process.

Guaranteed privacy and protection.

The privacy of your data ought to be your utmost priority. Select a protected labeling platform that you can trust with your data. If your data is extremely niche-specific, demand a labor force that understands exactly how to handle your task requires, getting rid of issues for mislabeling or leakage. It’s also an excellent suggestion to look into the safety standards and also guidelines your platform of passion complies with. Various other inquiries to request assured protection consist of but are not limited to:.

1) How is data gain access to regulated?

2) How are passwords as well as qualifications saved on the system?

3) Where is the data held on the system?

Technical assistance and also paperwork.

Make sure the data note system you pick gives technological support through complete and also updated documentation and an active assistance group to lead you throughout the data labeling procedure. Technical issues might develop, and you desire the assistance group to be offered to deal with the problems to decrease disturbance. Think about asking the support team just how they provide troubleshooting help prior to registering for the system.

Trick takeaways.

AI is reinventing the way we do things, and also your company needs to hop on board immediately. The endless opportunities of AI are making sectors smarter: from farming to medicine, sports, and a lot more. Data annotation is the first step towards development. Since you know what data labeling is, how it works, its ideal techniques, and also what to search for when selecting an data comment platform, you can make informed decisions for your organization as well as take your operations to the next degree.

Everything You Need to Know About Data Labeling– Featuring Meeta Dash

Expert system Artificial intelligence (AI) is only as good as the data it is trained with. With the quality and amount of training data directly figuring out the success of an AI algorithm, it’s not a surprise that, usually, 80% of the time spent on an AI project is wrangling training data, including data labeling. When building an AI model, you’ll begin with an enormous quantity of unlabeled data. Labeling that data is an integral step in data preparation and preprocessing for constructing AI. However exactly what is data labeling in the context of machine learning (ML)? It’s the process of identifying and tagging data samples, which is particularly essential when it comes to monitored learning in ML. Supervised knowing occurs when both datainputs and outputs are identified to improve future knowing of an AI model. The whole data identifying workflow often consists of data annotation, tagging, classification, small amounts, and processing. You’ll require to have an extensive procedure in place to transform unlabeled data into the necessary training data to teach your AI designs which patterns to acknowledge to produce a wanted result. For instance, training data for a facial recognition design may need tagging pictures of confront with particular features, such as eyes, nose, and mouth. Additionally, if your design requires to perform sentiment analysis (as in a case where you need to discover whether someone’s tone is ironical), you’ll require to label audio files with numerous inflections.

How to Get Labeled Data

labeled data with meeta dash Data labels need to be highly accurate in order to teach your model to make correct forecasts. The data labeling procedure needs several steps to guarantee quality and precision.

Data Labeling Approaches

It’s important to select the appropriate data identifying approach for your company, as this is the step that needs the best financial investment of time and resources. Data identifying can be done using a number of techniques (or mix of methods), that include:

Internal: Use existing staff and resources. While you’ll have more control over the results, this approach can be lengthy and pricey, particularly if you require to work with and train annotators from scratch.

Outsourcing: Hire short-term freelancers to identify data. You’ll be able to assess the abilities of these professionals but will have less control over the workflow company.

Crowdsourcing: You might choose rather to crowdsource your data labeling requirements using a relied on third-party data partner, an ideal alternative if you don’t have the resources internally. A data partner can offer expertise throughout the design construct procedure and offer access to a big crowd of contributors who can manage huge quantities of data quickly. Crowdsourcing is perfect for business that expect increase toward massive deployments.

By device: Data labeling can also be done by machine. ML-assisted data labeling should be considered, specifically when training data need to be prepared at scale. It can likewise be utilized for automating business procedures that need data categorization.

The technique your company takes will depend upon the intricacy of the problem you’re trying to fix, the skill level of your employees, and your spending plan.

Quality Assurance

Quality assurance (QA) is a frequently neglected however vital component to the data labeling procedure. Make certain to have quality checks in location if you’re managing data preparation in house. If you’re dealing with a data partner, they’ll have a QA procedure already in place. Why is QA so important? Labels on data must satisfy numerous attributes; they must be useful, unique, and independent. The labels need to likewise reflect a ground truth level of precision. For example, when identifying images for a self-driving automobile, all pedestrians, signs, and other cars must be correctly identified within the image for the model to work effectively.

Train and Test

Once you have actually labeled data for training and it has passed QA, it is time to train your AI design using that data. From there, test it on a new set of unlabeled data to see if the forecasts it makes are precise. You’ll have different expectations of accuracy depending on what the needs of your design are. If your design is processing radiology images to identify infection, the precision level might require to be higher than a design that is being utilized to recognize products in an online shopping experience, as one could be a matter of life and death. Set your self-confidence threshold appropriately.

Make use of Human-in-the-loop

When checking your data, human beings ought to be involved in the procedure to supply ground fact monitoring. Using human-in-the-loop permits you to inspect that your design is making the ideal forecasts, determine spaces in the training data, provide feedback to the model, and retrain it as needed when low confidence or incorrect forecasts are made.

Scale

Develop versatile data labeling procedures that enable you to scale. Expect to repeat on these procedures as your needs and utilize cases evolve.

Appen’s Own Data Labeling Expert: Meeta Dash

At Appen, we depend on our team of experts to assist supply the best possible data annotation platform. Meeta Dash, our VP of Product Management, a Forbes Tech Council Contributor, and current winner of VentureBeat’s AI in Mentorship award, assists guarantee the Appen Data Annotation Platform surpasses market standards in providing precise data labeling services. Her top 3 insights on data identifying consist of:

The most effective of groups start with a clear meaning of use cases, target personalities, and success metrics. This helps identify training data needs, make sure coverage across various circumstances, and reduce possible predisposition due to absence of diverse datasets. Furthermore including a diverse pool of factors for data labeling can help prevent any predisposition presented during the labeling procedure.

Data wander is more common than you might believe. In the real life, the data that your design sees changes every day, and a model that you have trained a month earlier might not perform as per your expectation. So it’s essential to develop a scalable, automated training data pipeline to continuously train your design with new data.

Security and privacy considerations should be dealt with head-on and not as an afterthought. Wherever possible redact sensitive data that is not required for training an optimal design. Utilize a safe and secure and enterprise-grade data identifying platform and when dealing with data labeling projects with delicate data pick a safe and secure contributor labor force that is trained to manage such data.

What We Can Do For You

We offer data labeling services to improve machine learning at scale. As a global leader in our field, our clients benefit from our ability to rapidly deliver big volumes of premium data throughout multiple data types, consisting of image, video, speech, audio, and text for your specific AI program needs. Discover how top quality data labeling can give you the self-confidence to release AI. Contact us to speak to a professional.

Data is the currency of the future.

With innovation and AI slowly permeating into our everyday lives, data and its proper use can trigger a substantial impact in modern-day society.

Properly annotated data can be utilized efficiently by ML algorithms to find issues and propose practical solutions, hence making data annotation an important part of this change.

Here’s what we’ll cover:

What is data labeling?

Unlabeled data vs labeled data

Data labeling approaches

Typical kinds of data labeling

How does data labeling work

Best practices for data labeling

Data labeling: TL; DR.

Pro idea: Speed up your labeling 10x by utilizing V7 auto annotation tool.

Accelerate labeling data 10x. Usage V7 to establish AI much faster.

Attempt V7 Now.

Don’t start empty-handed. Explore our repository of 500+ open datasets and test-drive V7’s tools.

What is data labeling?

Data labeling describes the process of including tags or labels to raw data such as images, videos, text, and audio.

These tags form a representation of what class of objects the data belongs to and helps an artificial intelligence design learn to identify that particular class of objects when come across in data without a tag.

What is “training data” in machine learning?

Training data describes data that has been collected to be fed to a machine finding out model to help the design learn more about the data .

Training data can be of different kinds, consisting of images, voice, text, or functions depending upon the machine learning design being utilized and the job at hand to be solved.

It can be annotated or unannotated. When training data is annotated, the matching label is referred to as ground reality.

Pro idea: Are you trying to find quality datasets to label and train your designs? Check out the list of 65+ datasets for machine learning.

” Ground truth” as a term is used for info that is known ahead of time to be true.

Unlabeled data vs labeled data.

The training dataset is completely based on the type of artificial intelligence job we wish to concentrate on. Machine/Deep Learning algorithms can be broadly categorized on the type of data they need in 3 classes.

Monitored knowing.

Supervised knowing, the most typical type, is a kind of artificial intelligence algorithm that requires data and corresponding annotated labels to train. Popular jobs like image category and image division come under this paradigm.

The common training treatment includes feeding annotated data to the device to help the design find out, and checking the learned model on unannotated data .

To find the accuracy of such a method, annotated data with concealed labels is normally used in the testing phase of the algorithm. Thus, annotated data is an absolute need for training maker discovering designs in a supervised way.

Not being watched learning.

In not being watched learning, unannotated input data is provided and the design trains without any knowledge of the labels that the input data may have.

Typical without supervision algorithms of training include autoencoders that have the outputs the like the input. Not being watched learning techniques likewise include clustering algorithms that groups the data into ‘n’ clusters, where ‘n’ is a hyperparameter.

Supervised vs. Unsupervised Learning.

Semi-supervised learning.

In semi-supervised learning, a combination of both annotated and unannotated data is utilized for training the design.

While this minimizes the cost of data annotation by using both sort of data , there are typically a great deal of severe presumptions of the training data made while training. Use cases of semi-supervised learning consist of Protein sequence category and Internet material analysis.

Pro pointer: Dive much deeper and check out Supervised vs. Unsupervised Learning: What’s the Difference?

What is ‘Human-in-the-Loop’ (HITL)?

The term Human-In-The-Loop most commonly refers to constant guidance and validation of the AI design’s results by a human.

There are two primary methods which humans become part of the Machine Learning loop:.

Labeling training data: Human annotators are needed to identify the training data that is being fed to (supervised/semi-supervised) machine learning designs.

Training the design: Data researchers train the design by constantly monitoring design data like loss function and predictions. At times model performance and forecasts are confirmed by a human and the outcomes of the recognition are fed back to the model.

Data labeling approaches.

There are various labeling approaches. depending upon the issue statement, the time frame of the project, and the variety of people who are associated with the work.

While internal labeling and crowdsourcing are really typical, the terminology can also reach consist of novel types of labeling and annotation that use AI and active learning for the task.

The most common approaches for annotation of data are listed below.

Internal data labeling.

In-house data identifying secures the highest quality labeling possible and is usually done by data scientists and data engineers hired at the organization.

Top quality labeling is vital for markets like insurance or health care, and it often requires assessments with specialists in matching fields for correct labeling of data.

Pro tip: Check out 21+ Best Healthcare Datasets for Computer Vision if you are searching for medical data.

As is anticipated for internal labeling, with the increase in quality of the annotations, the time required to annotate boosts considerably, leading to the entire data identifying process and cleaning being really sluggish.

Crowdsourcing.

Crowdsourcing describes the process of getting annotated data with the help of a large number of freelancers registered at a crowdsourcing platform.

The datasets annotated consist mainly of minor data like images of animals, plants, and the natural environment and they do not require extra proficiency. Therefore, the task of annotating an easy dataset is typically crowdsourced to platforms that have tens of countless signed up data annotators.

Outsourcing.

Outsourcing is a middle ground between crowdsourcing and in-house information identifying where the job of data annotation is outsourced to an organization or a person.

One of the advantages of outsourcing to people is that they can be assessed on the particular subject prior to the work has actually been handed over.

This approach of developing annotation datasets is perfect for projects that do not have much financing, yet need a substantial quality of data annotation.

Pro tip: V7 works with a highly qualified network of expert annotators to help you label your data quicker. Discover more about V7 Labeling Services.

Machine-based annotation.

One of the most unique forms of annotation is machine-based annotation. Machine-based annotation describes making use of annotation tools and automation which can considerably increase the speed of data annotating without compromising the quality.

The bright side is that current automation advancements in conventional maker annotation tools– utilizing not being watched and semi-supervised machine discovering algorithms– assisted significantly lower the workload on the human labelers.

Unsupervised algorithms like clustering and just recently established semi-supervised algorithms for AI data labeling– like active learning are tools that can minimize annotation times by bounds.

Typical types of data labeling.

From what we have actually seen till now, data labeling is all about the job we desire a machine-learning algorithm to carry out with our data.

For example–.

If we want a maker finding out algorithm for the task of problem examination, we feed it data such as pictures of rust or cracks. The corresponding annotation would be polygons for localization of those fractures or rust, and tags for calling them.

Here are some common AI domains and their particular data annotation types.

Computer system Vision.

Computer system vision (or the research study to assist computers “see” the world around them) needs annotated visual data in the form of images. Data annotations in computer vision can be of various types, depending on the visual task that we want the model to perform.

Typical data annotation types based upon the task are listed below.

Image Classification: Data annotation for image classification requires the addition of a tag to the image being worked on. The number of unique tags in the entire database is the variety of classes that the design can classify.

Classification issues can be further divided into:.

Binary class classification (which includes only 2 tags).

Multiclass classification (which contains multiple tags).

Additionally, multi-label category can also be seen, particularly in the case of disease detection, and describes each image having more than a single tag.

Image Segmentation: In Image Segmentation, the task of the Computer Vision algorithm is to separate things in the images from their backgrounds and other objects in the very same image. This normally suggests a pixel map of the very same size as the image containing 1 where the object exists and 0 where an annotation has yet to be created.

For multiple objects to be segmented in the very same image, pixel maps for each object are concatenated channel-wise and used as ground reality for the model.

Object Detection: Object Detection describes the detection of things and their locations by means of computer system vision.

The data annotation in item detection is vastly different from that in Image Classification, with each object annotated utilizing bounding boxes. A bounding box is the smallest rectangular section which contains the object in the image. Bounding box annotations are generally accompanied by tags where each bounding box is given a label in the image.

Usually, the coordinates of these bounding boxes and the matching tags for them are kept in a separate JSON file in a dictionary format with the image number/image ID being the secret of the dictionary.

Posture estimate: Pose evaluation describes using Computer Vision tools to approximate the pose of an individual in an image. Position estimate runs by the detection of key points in the body and correlating these bottom lines for obtaining the posture. The matching ground fact for the position evaluation design, therefore, would be key points from an image. This would be basic coordinate data that is labeled with the help of tags, where each coordinate gives the area of a specific bottom line, determined by the tag, in the particular image.

Pro idea: Check out 15+ Top Computer Vision Project Ideas for Beginners to develop your own computer system vision design in less than an hour.

Natural Language Processing.

Natural language processing (or NLP for brief) refers to the analysis of human languages and their types throughout interaction both with other humans and with machines. Belonging of computational linguistics originally, NLP has established further with the help of Artificial Intelligence and Deep Learning.

Here are a few of the data labeling approaches for identifying NLP data .

Entity annotation and connecting: Entity annotation refers to the annotation of entities or specific features in the unlabelled data corpus.

The word ‘Entity’ can take different kinds depending upon the task at hand.

For the annotation of proper nouns, we have actually named entity annotation that refers to the recognition and tagging of names in the text. For the analysis of expressions, we describe the procedure as Keyphrase tagging where keywords or keyphrases from the text are annotated. For analysis and annotation of practical elements of any text like verbs, nouns, prepositions, we utilize Parts of Speech tagging, abbreviated as POS tagging.

POS tagging is used in parsing, device translation, and generation of linguistic data.

Entity annotation is followed by entity linking, where the annotated entities are connected to data repositories around them to assign a distinct identity to each of these entities. This is especially essential when the text contains data that can be uncertain and needs to be disambiguate.

Entity connecting is often used for semantic annotation, where the semantic details of entities is added as annotations.

Text category: Similar to image category where we designate a label to image data, in text classification, we appoint one or numerous labels to blocks of text.

While in entity annotation and connecting, we separate out entities inside each line of the text, in text classification, the text is considered as a whole and a set of tags is assigned to it. Types of text category include a classification on the basis of belief (for sentiment analysis) and classification on the basis of the topic the text wants to communicate (for topic categorization).

Phonetic annotation: Phonetic annotation describes the labeling of commas and semicolons present in the text and is especially required in chatbots that produce textual info based upon the input provided to them. Commas and stops at unintentional places can change the structuring of the sentence, adding to the significance of this action.

Audio annotation.

Audio annotation is needed for the proper use of audio data in artificial intelligence jobs like speaker recognition and extraction of linguistic tags based upon the audio data. While speaker recognition is the easy addition of a label or a tag to an audio file, annotation linguistic data includes a more complicated procedure.

For the annotation of linguistic data, the first annotation of the linguistic region is performed as no audio is expected to include 100 percent speech. Surrounding noises are tagged and a transcript of the speech is developed for more processing with the help of NLP algorithms.

How does data labeling work.

Data identifying processes work in the following sequential order:.

Data collection: Raw data is collected that would be used to train the model. This data is cleaned and processed to form a database that can be fed straight to the model.

Data tagging: Various data identifying approaches are utilized to tag the data and associate it with significant context that the device can use as ground truth.

Quality control: The quality of data annotations is frequently figured out by how precise the tags are for a specific data point and how precise the coordinate points are for bounding box and keypoint annotations. QA algorithms like the Consensus algorithm and Cronbach’s alpha test are really helpful for figuring out the average precision of these annotations.

Pro tip: Check out 20+ Open Source Computer Vision Datasets to discover more quality data .

Labeling data with V7.

V7 provides us a vast selection of tools that are quintessential for data annotation and tagging, for that reason allowing us to carry out precise annotations for division, category, things detection, or pose estimation at lightning-fast speeds.

V7 even more permits you to train your models on the web itself, making the entire process of developing an AI design fast and easy.

Here is a short step-by-step guide you can follow to learn how to identify your data with V7.

Discover quality data: The primary step towards top quality training data is premium raw data. The raw data need to be first pre-processed and cleaned up before it is sent for annotations.

Submit your data: After data collection, upload your raw data to V7. Go to New Dataset and offer it a name.

Include your data in the next section and add the classes you would wish to accompany with the type of annotation it requires.

Forgot to add a class you need?

Don’t fret– you can constantly add them later!

Annotate: V7 labs provides a myriad of data labeling tools for to help annotate your maker discovering data and finish your data labeling tasks.

Let us take a look at the bounding boxes tool and the auto-annotate tools on some data we submitted.

Bounding box tool.

The bounding box tool is used to help us fit bounding boxes onto items and tag them likewise.

Here is an example of its use:.

Find out more: Annotating With Bounding Boxes: Quality Best Practices.

Automobile annotate tool.

The auto annotate tool is a specialized feature of V7 that sets it apart from other annotators. It can automatically capture fine-grained segmentation maps from images, making it one of the most useful tools for segmentation ground maps.

An example of the powerful auto-annotate tool can be seen here:.

Train your model: Create your Neural Network and name it correspondingly. Train your model on the annotated data you generated.

Best practices for data labeling.

With supervised learning being the most common form of machine learning today, data labeling finds itself in almost every workplace that talks about AI.

Here are some of the best practices for data labeling for AI to make sure your model isn’t crumbling due to poor data:.

Proper dataset collection and cleaning: While talking about ML, one of the primary things we should take care of is the data. The data should be diversified but extremely specific to the problem statement. Diverse data allows us to infer ML models in multiple real-world scenarios while maintaining specificity reduces the chances of errors. Similarly, appropriate bias checks prevent the model from overfitting to a particular scenario.

Proper annotation approach: The next most important thing for data labeling is the assignment of the labeling task. The data to be annotated has to be labeled via in-house labeling, outsourcing, or via crowdsourcing means. The proper choice of data labeling approach undertaken helps keep the budget in check without cutting down the annotation accuracy.

QA checks: Quality Assurance checks are absolutely mandatory for data that has been labeled via crowdsourcing or outsourcing means. QA checks prevent false labels and improperly labeled data from being fed to ML algorithms.